NVIDIA presented a lot of news yesterday taking advantage of the GTC 2023 scenarioa very important event that has become a reference in the technological sector at a professional level.

As the list of novelties is very long, and it would be counterproductive to collect them all In a huge article, we are going to focus on the four most important announcements, and we are going to detail them so that you know all their keys.

In general terms, the most interesting novelties that NVIDIA has presented this year are focused above all on the hardware, and are not limited to the traditional model but rather extend to quantum computing. It is precisely with this that we open this article, although we must not forget that the green giant has also confirmed an important boost to AI to be able to take it to any industry, and has strengthened its collaborations with the main giants of the sector.

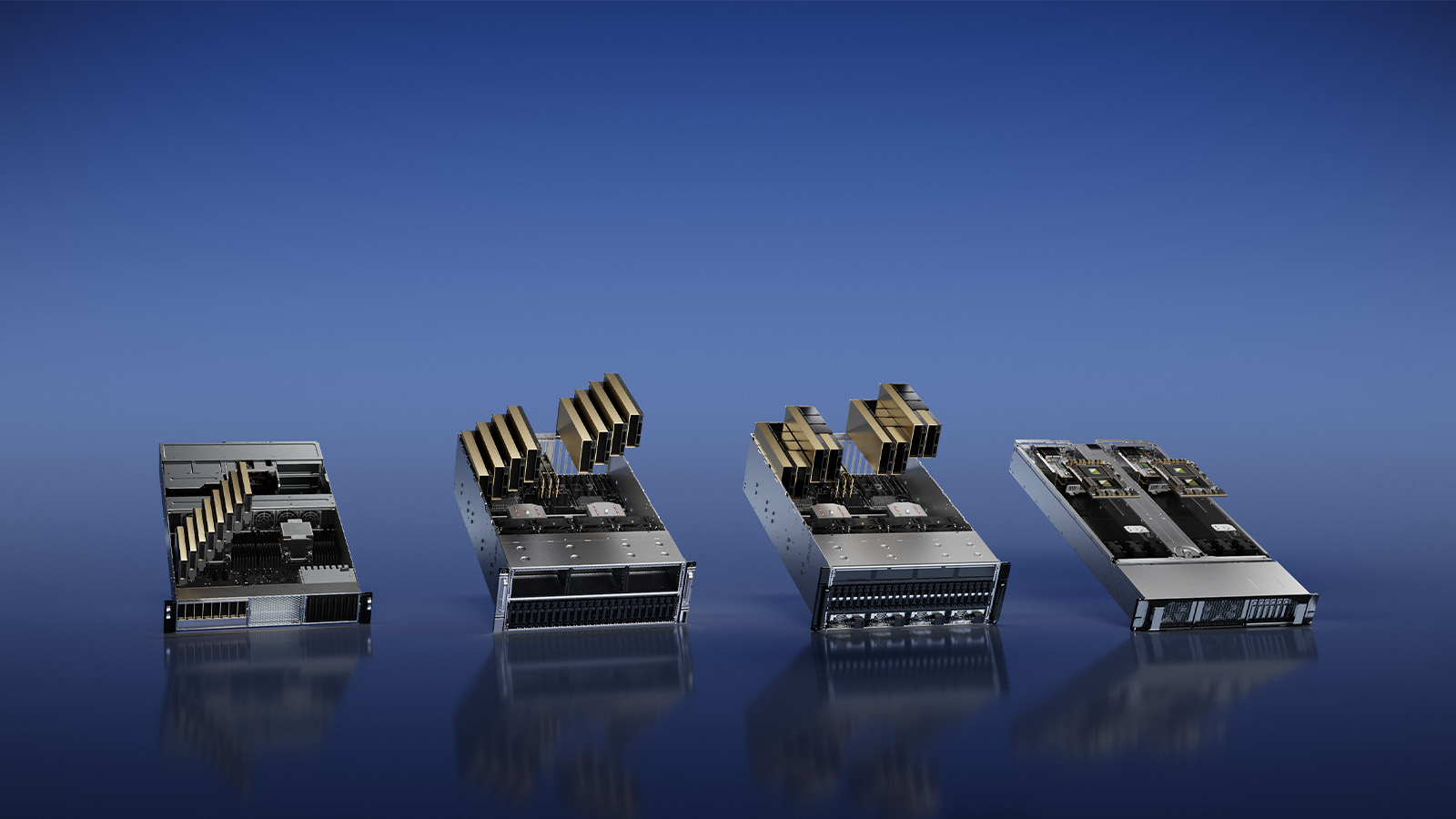

NVIDIA DGX Quantum

Its about first GPU-accelerated quantum computing systemand represents a major inflection point as it shapes a next-generation computing solution powered by NVIDIA’s Grace Hopper superchip and the open source CUDA Quantum programming model, coupled with the world’s most advanced quantum control platform. world, OPX, from Quantum Machines.

With this system it is possible to unite the best of both worlds, classical computing and quantum computing, and this will allow researchers to have all the potential they need to create extraordinary applications, and without having to give up advanced features that are key in quantum computingsuch as calibration, control, quantum error correction, and hybrid algorithms.

At the hardware level, the NVIDIA DGX Quantum has a Grace Hopper super chip, as we said at the beginning, and is connected via a PCIe interface to a Quantum Machines OPX+ system. This allows reduce latency to less than a microsecondand allows near-instant communications between graphics cores and quantum processing units.

Five new GPUs for professional laptops

NVIDIA has also confirmed the release of five new professional laptop GPUs, based on the Ada Lovelace architecture and designed to shape more powerful, efficient and lighter workstations. The RTX 5000 is the most powerful of five, since it has 9,728 shaders, it has 76 RT cores, 304 tensor cores, comes with 16 GB of GDDR6 over a 256-bit bus, and has a configurable TGP of between 80 and 175 watts.

The RTX 4000 is a step behind with its configuration of 7,424 shaders, it has 56 RT cores, it has 232 tensor cores, it has 12 GB of GDDR6 on a 192-bit bus and its TGP can be configured between 60 and 175 watts. The RTX 3500 fits in the upper-middle range with its 5,120 shaders, 40 RT cores and 160 tensor cores, it has 12 GB of GDDR6 on a 192-bit bus and its TGP can be configured between 60 and 140 watts.

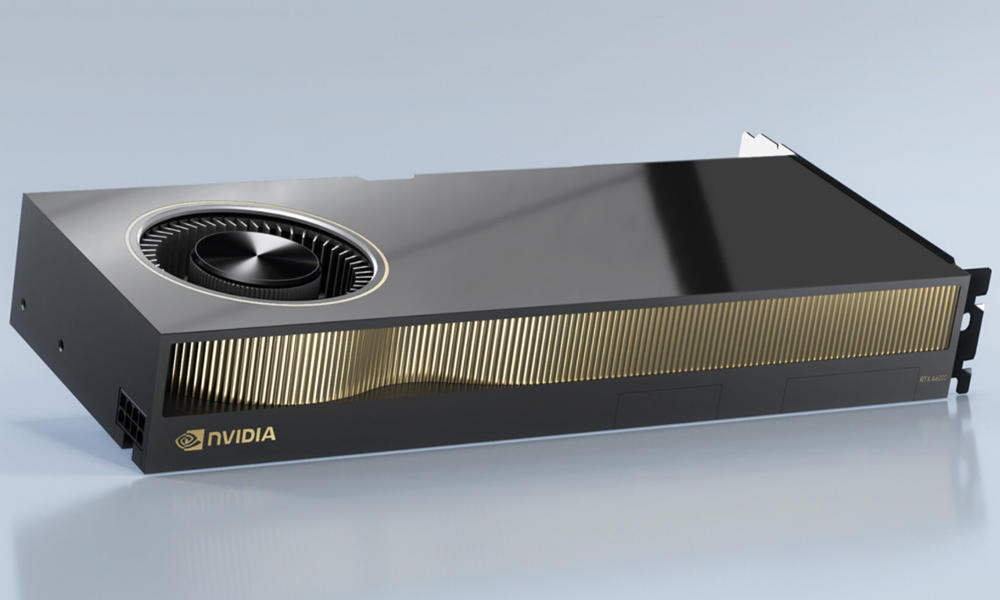

The RTX 3000 is a mid-range model with 4,608 shaders, 36 RT cores, 144 tensor cores, 128-bit bus, 8 GB of GDDR6, and a configurable TGP from 35 to 140 watts. Finally we have the RTX 2000, which has 3,072 shaders, 24 RT cores, 96 tensor cores, 128-bit bus, and 8 GB of GDDR6. Its TGP can be set between 35 and 140 watts. NVIDIA has also announced a RTX 4000 ADA SFF for desktopwhich is nothing more than a small version equipped with 6,144 shaders.

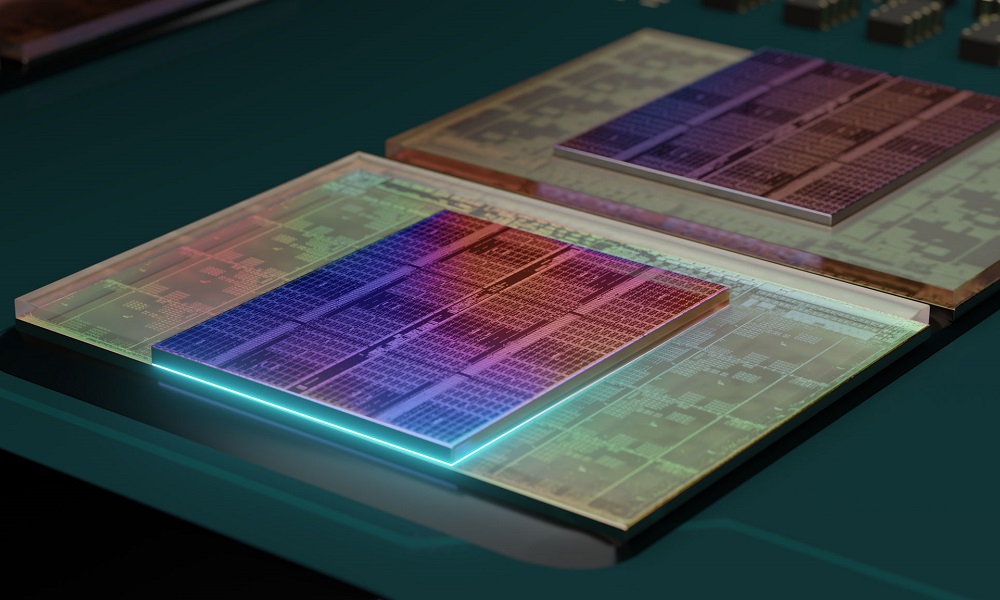

NVIDIA H100 NVL, a dual GPU specialized in Large Language Models (LLM)

The green giant has also surprised us with a Hopper-based dual GPU setup that is specifically designed for work with Large Language Models (LLMs), large language models in a direct translation. It may not sound familiar to some of you and you may have even raised an eyebrow, but if I tell you that Chat-GPT is one of them, you will surely understand the importance of this new hardware.

The acronym NVL refers to NVLink, an interconnection technology that is what allows the two GPUs that make up this accelerator to be joined, and a total of three Gen4 bridges are used. At the specification level, we are facing an impressive solution, since it offers a power of up to 68 TFLOPs in FP64reaches the 134 TFLOPs in FP64 with their tensor cores, and can offer up to 1,979 TFLOPs in FP32 with such cores.

In FP16 the power with the tensor cores is 3,958 TFLOPs, and at INT8 we have a power of 7,916 TFLOPs. The numbers speak for themselves, and yes, they are truly impressive. The NVIDIA H100 NVL has a 6144-bit bus, features 188GB of 5.1GHz HBM3 memory, and offers up to 7.8TB/s. LLM models need large blocks of memory and high bandwidth, so NVIDIA hasn’t cut a thread.