Until now, graphics cards for HPC have dominated the AI market and sector within the professional environment with an iron fist, while in consumer and user PCs they have achieved it in gaming and cryptocurrency mining. What if an algorithm partially changed that? That is precisely what Deci offers, a company that claims to change the traditional paradigm from GPUs to CPUs. That’s how it is AutoNAC DeciNets.

GPUs are excellent at performing massively parallelized operations where the software was designed for their needs. CPUs, on the other hand, can work with more complex software, solving very diverse problems and at incredible speed. DeciNets has been studying and innovating within the world of algorithms and now it seems that it finally has something solid to present.

AutoNAC DeciNets: Efficiency and Accuracy for CPUs in AI

If we say EfficientNet it may not sound like anything to you, if we say Google then things change, but if we name Google EfficientNet CNN you may have a mental conflict. It is important to understand how the big G has changed the world of so-called Convolutional Neural Networks, because now the software manages to improve the efficiency of the models by reducing the parameters and the number of operations per second that are required.

Basically it is possibly the best model to achieve better results and accuracy in neural networks both by GPU and CPU, even by TPU. At this point, we must understand that the algorithms are a determining part of the calculations, so they have to be designed with the hardware that is going to run them in mind. Deci knows the potential of current processors and has therefore been working from its Israel headquarters for several years with Intel Cascade Lake CPUs to offer an improved AutoNAC model that doubles the performance of these, leaving GPUs aside.

Instagram, Facebook, Google… They all want the same thing

An AI algorithm in a large company has to offer solutions to two simple questions: classify information and recognize it. This can arrive as text, videos, images or sounds, but within these it is the images that proliferate the most due to current hardware limitations.

This even extends to cars, since what the videos from the cameras do is capture frames and compare them in record time in order to have the information available in a static image that the algorithm on duty can recognize and work with. Therefore, Deci had a golden opportunity to take an image recognition and classification algorithm to a new level with greater efficiency thanks to CPUs:

“As deep learning practitioners, our goal is not just to find the most accurate models, but to discover the most resource-efficient models that run smoothly in production – this combination of power and accuracy is the ‘holy grail’ of deep learning” . said Yonatan Geifman, co-founder and CEO of Deci. “AutoNAC creates the best computer vision models to date, and now the new class of DeciNets can be effectively applied and run on CPU AI applications.”

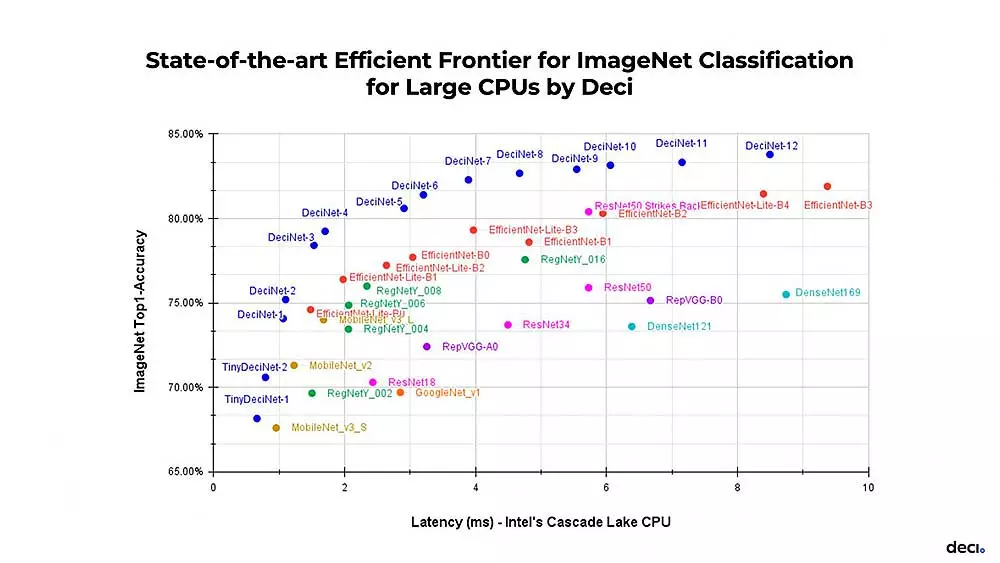

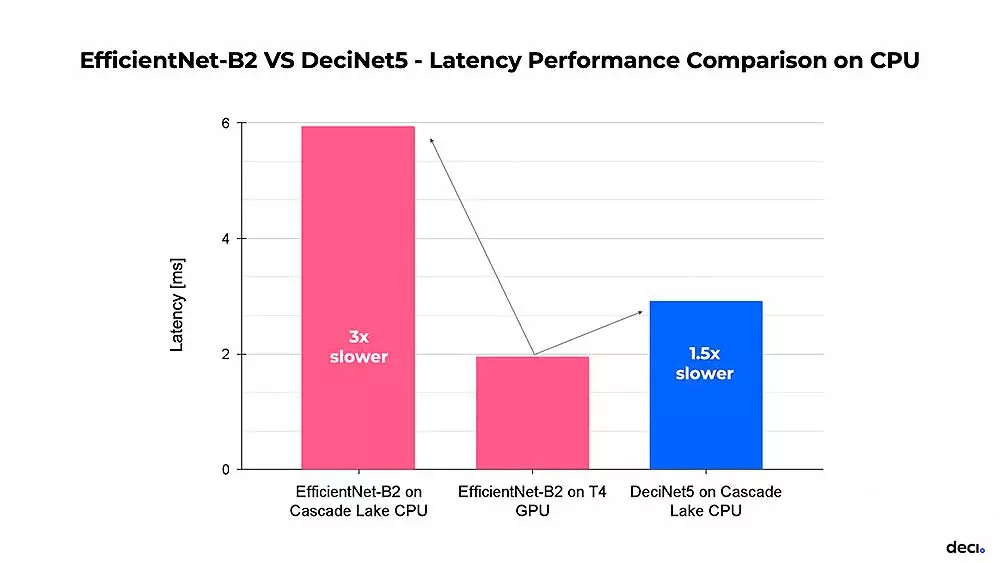

The performance is so high that it is very close to what Google does with EfficientNet B2 GPU-based where by leveraging Deci’s AutoNAC technology and hardware-specific optimization, the gap between model inference performance on a GPU vs. a CPU is halved, without sacrificing model accuracy.

Therefore, and looking at the upper graph, what we can be clear about is that the precision is higher than in the Google algorithm in each and every one of the latencies offered by a CPU without losing performance. This new DeciNet algorithm could change the graphics card sector towards processors, especially if we are talking about Intel.