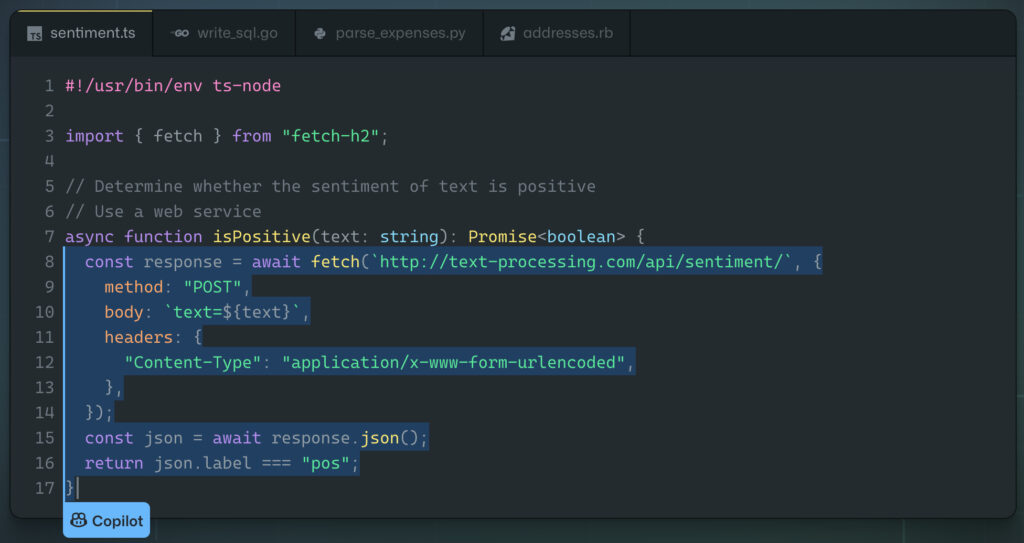

Github Copilot aims to make the life of programmers and developers easier by offering them snippets of code or entire functions automatically. Can such an invention really work?

Code that writes itself? It is the dream of many coders who have to deal with the intricacies of different computer languages every day. And that’s what Github and OpenAI promise, at least in part, with a ” pair programming with artificial intelligence “.

Named Github Copilot, the feature was announced on June 29, but is not yet available to the general public: you must for now request to subscribe to the technical preview in order to have access to it. As of yet, there is no official date for large-scale commercialization yet. The explanations of Github and its managing director, Nat Friedman, however draw a promising technology, which could save time for developers, beginners as well as experienced.

What is Github Copilot?

Github Copilot is the result of a partnership between Github, the very popular development platform, and OpenAI, a company specializing in research on artificial intelligence, and known for having, among other things, created an extremely powerful text generator. Copilot is powered by one of the artificial intelligences inspired by this text generator: OpenAI Codex.

” OpenAI Codex is able to mimic the way people code “Says Nat Friedman in a blog post,” and it is considerably better than previous text generation software because it has been trained on very large databases, including a lot of open source code. “. The text speaks of several billion lines of code analyzed.

The promise of Copilot is impressive: Copilot would suggest entire lines of code, but also, some functions. Among the examples presented on Github, Copilot would automate certain repetitive tasks, convert certain comments into lines of code, and offer several alternatives and solutions, when you are stuck in a passage.

According to Github, Copilot is even so good that it could figure out what to code depending on the context. OpenAi promises that he could ” learn from the corrections you make on his suggestions, and adapt to your writing style “. The two companies also plan to use Copilot in several languages: Python, JavaScript, TypeScript, Ruby, and Go.

Does it really work?

So, does this revolutionary invention really work? For now, it’s hard to say: there hasn’t really been a full-scale test with a lot of users. Copilot is only available in technical preview . Github is very happy with the results, citing engineers who have tested the feature and judge it ” mind blowing “.

Difficult, however, to separate things, given that these testimonials were chosen by Github. However, the platform recognizes in its FAQ that Copilot “ don’t write perfect code “. ” The code that Copilot offers doesn’t always work, and doesn’t always make sense. Lines of code written by Copilot must always be tested, evaluated, and approved. “.

What future for such technology?

Potentially, such technology could change a lot of things. If Copilot does manage to be used on a large scale, one can imagine reduced production times and more robust codes – but that certainly won’t be for now. As we said above, there is currently no announced date for a large-scale Copilot launch. Github has not announced anything definitive in this direction, it is only mentioned of a potential sale ” if the tests with the technical preview are conclusive “.

However, Github warns users of its new technology: like all artificial intelligences, Copilot has biases. They will be all the more dangerous if they are repeated endlessly, in millions of lines of open source code. ” We have put in place filters in the technical preview that allow you to avoid offensive terms “, Is it explained in the FAQ, however,” it is not impossible that discriminating results appear “. Indeed, artificial intelligences are based on huge databases to train. However, if these databases themselves contain racist or sexist biases, they risk being learned by AIs, and subsequently reproduced.