Google will improve the accessibility of Android. A new feature, first available in Android 12 and soon on other versions, allows you to navigate the interface using facial expressions. Here’s how to use it.

Android 12 inaugurates a feature that can be very useful for people with reduced mobility: the control of the interface with facial expressions. This tool allows you to navigate in the menus present on the screen, to select items, or to go back with a raised eyebrow, a glance to the left or a smile.

The functionality, which can be found within the accessibility settings, will begin on Android 12, but should arrive on previous versions of the system, during an update. Google hasn’t said exactly when and to what versions the feature will go up, though. Owners of Pixel phones will therefore be able to taste it before others, but the tool will be democratized later.

Here is how to use it.

How to activate facial detection?

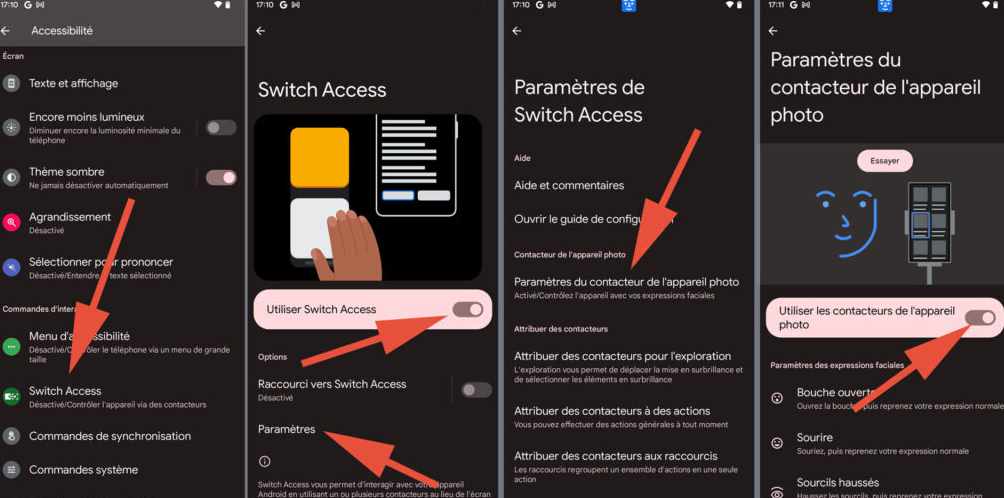

To take advantage of this new feature, you must first activate it in your phone’s settings. To do this :

- Open the app Settings

- Go to the menu Accessibility

- Click on the entry Switch Access

- Flip the switch Use Switch Access on On

- Click on To allow in the pop-up window

- Click on the menu Settings

- Choose Camera switch settings

- Flip the switch Use camera switches on On

If the manipulation was performed correctly, you should see a small face in a bubble at the top of the screen. This means that your phone has started to detect your movements.

How to use facial detection?

Once the option is enabled, you will be able to start controlling your phone through your facial expressions, according to the following diagram:

- Open the mouth : go to the next item on the screen

- To smile : select an item

- Raise eyebrows : return to the previous item on the screen

In our tests (on a Pixel 3), the tool proved to be responsive and effective. Keeping your mouth open for a few seconds allows you to move quickly from one element to another on the screen. With a smile, you can simulate the tapping of the screen. Navigation is less easy than via the touchscreen interface, but it is aimed at people for whom this option is precisely not suitable.

Go further with facial detection

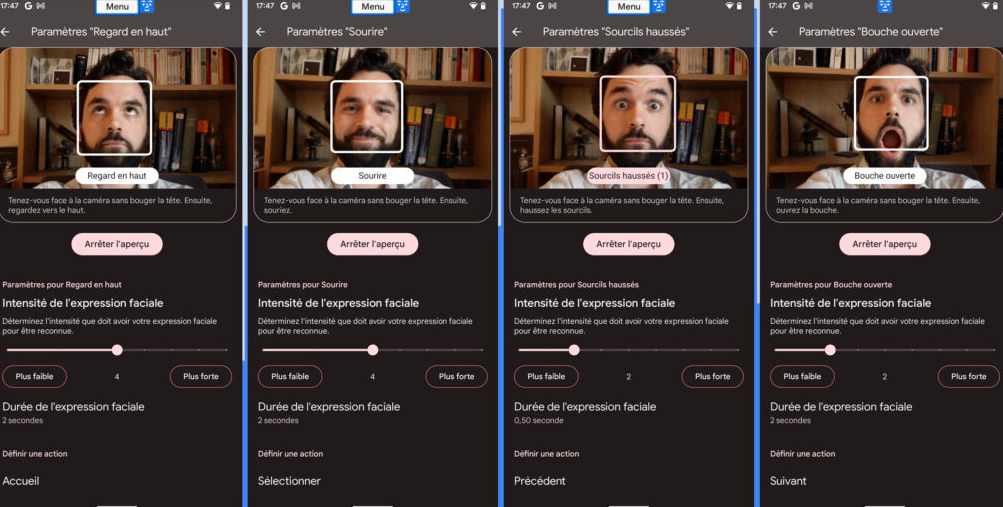

Google offers, in addition to these basic navigation elements, other expressions for navigating within the OS. It is possible to configure an action when looking to the left, up or right.

To use them, just select them in the menu Camera switch and assign them an action. Thus, it is possible, for example, to assign a shortcut back to home via a raise of the eyes.

Detection is slightly less accurate than with the default motions, but it still works quite well. On the other hand, this requires mechanically to look elsewhere than on the screen of the mobile. This complicates navigation a bit, since the phone screen is no longer exactly in the user’s field of vision.

It is also possible to change the “intensity of facial expression”, so that the phone stops detecting involuntary movements. Thus, you will be able to avoid potential false positives (with your phone detecting unintentional movement).

If the navigation via these gestures is too fast for you, it is also possible to adjust the “duration of the facial expression”. This will slow down the scrolling speed of the selection frame, allowing you to navigate slower and more precisely. Default values tend to scroll a bit too fast to allow precise selection of an item on the screen.

Facial recognition is done locally on your phone, so there is technically no fear of Google scanning all of your facial expressions.