There are few more complex debates and more irreconcilable positions than the security vs. privacy confrontation, and it is something that Apple has experienced very firsthand after the announcement of NeuralHash in August of last year, and that was responded to with such forcefulness that, less than A month later, the company was forced to postpone, while stating that they would hold meetings with experts from all the areas involved in search of an effective solution that would provide the desired balance between security (in this case of minors) and privacy. .

In case you don’t remember or didn’t read it at the time, NeuralHash was a proposal to detect CSAM (Childs Sexual Abuse Material, pedophilic content) by reviewing the images uploaded by users from their devices to iCloud. And how was I going to do it? Well, its name itself already puts us on the track: generating a hash for each file and then crossing it with a database of hashes of images identified as CSAM. In addition, as Apple explained, the system would be able to detect some changes in the images (and, of course, in their corresponding hashes) to prevent a small modification that would allow these images to go unnoticed.

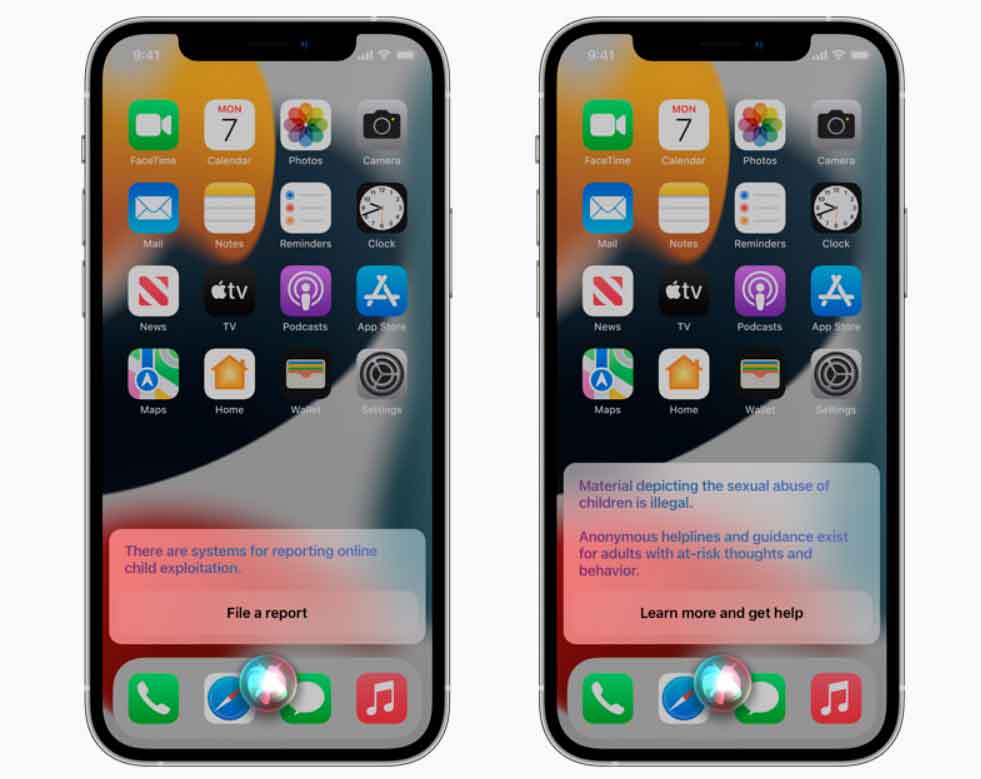

In case of any positive identification, the system would automatically block that iCloud account and, additionally, it would inform the corresponding authorities so that they could take the appropriate measures. And for the unlikely case of false positives, which Apple downplayed, it claimed that users would have a system for reporting the bug, which would involve a manual review to confirm whether or not it was a bug.

The response against it was, as we already told you at the time, more than forceful, and this for an Apple that has been flagging the protection of the privacy and security of its users for many years, was a huge setback, which made that despite the announced plans to “give NeuralHash a spin”, the project actually ended up sitting in the bottom of a drawer. A drawer that we can assume is very, very deep.

Thus, we had not heard from it since then and finally, as we can read in Wired, Apple has decided to throw in the towel with NeuralHash and has canceled it. This is what the company says about it:

“After extensive expert consultation to gather feedback on the child protection initiatives we proposed last year, we are deepening our investment in the Communication Security feature we first made available in December 2021.the company told WIRED in a statement. “We’ve also decided not to move forward with our previously proposed CSAM detection tool for iCloud Photos. Children can be protected without companies reviewing personal data, and we will continue to work with governments, children’s advocates and other companies to help protect young people, preserve their right to privacy and make the Internet a safer place. safe for children and for all of us.”

Thus, Apple will focus its efforts to combat CSAM on the tools provided to parents and guardians so that they can adequately monitor the content that their children receive and use, functions that already began to be deployed at the end of last year and that will continue to evolve.

However, it remains to be seen how this fits into the plans of the European Union, which in the middle of this year began to raise the possibility of establishing systems similar to NeuralHash, with the collaboration of technology companies, to pursue CSAM on the web.