Photo Scanning for Child Abuse

One of the main functionalities that will be applied is related to the possibility of detecting images that include child sexual abuse material. This will be done through different algorithms that will be able to detect these images intelligently. It is important Note that the tracking will only be done on those images that are shared with iCloud. Added to this is the importance that Apple has given that the system is designed with user privacy in mind. The hash technology to be used in this case is known as NeuralHash, which analyzes an image and converts it into a unique number.

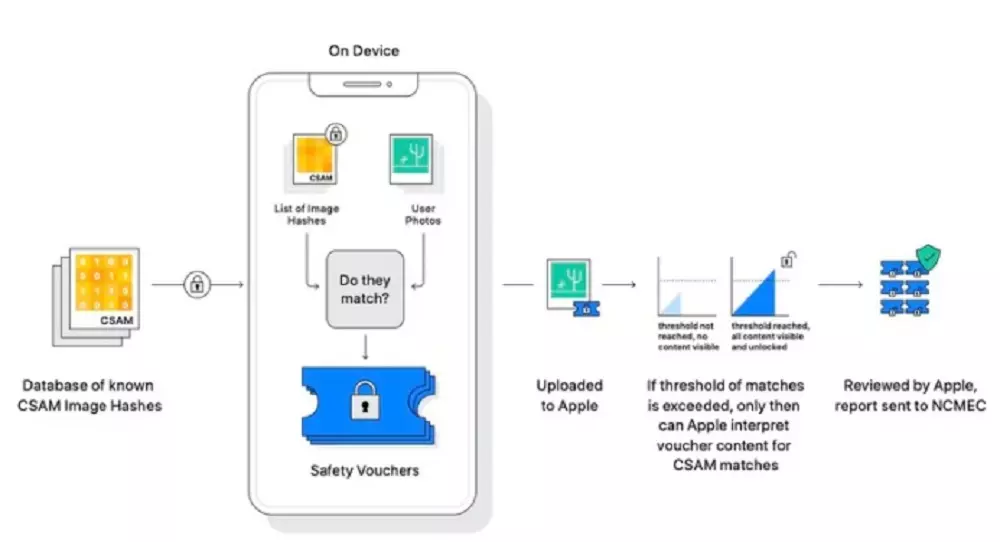

Keep in mind that before uploading an image to iCloud, the device will analyze the image by performing a comparison process. You will have a database with several hashes that have illegal content. In the event that the comparison yields a positive result, a security ticket will be generated with all this data that will be uploaded to iCloud along with the image. From here Apple will manually check the match to not leave this work in the AI. In the event that this manual review is also positive, it will be reported to the competent authorities. And although it looks so simple in these simple processes, Apple itself in technical documents has shown the complexity of this system.

At first Apple has stated that this will be a feature that will arrive with iOS 15 and iPadOS 15 but in a limited way. By this we mean that it will not be available in all countries. The first country in which will be available in the USA and the company believes that over time it may end up expanding it. This is something logical since a coordination with the local authorities must be carried out, and also adapt it to the regulations. In the case of Europe, the privacy legislation is much more extensive. This can be clearly seen in the security that the App Store has to avoid any type of threat to users.

Messages will be more secure

Beyond being able to detect illegal material in the photo gallery, Apple will also introduce new functions in Messages. This is the native communication channel of the company’s ecosystem, and minors when using it may be at risk by being able to get in touch with unwanted people such as pedophiles. The functions will be integrated into the parental controls of the iPhone or iPad. Already at all times the operating system will be able to analyze the multimedia material that is received in the service. In the event that a sexually explicit image is detected, it will be automatically deleted.

This is in addition to the fact that when a child tries to view a sensitive image, a notification will be sent to the parents. This will be especially true in the case of a young child. If you are older, you will only be notified before opening the image that contains hurtful or sexually explicit content. These are undoubtedly really interesting features to consider for parental control.

The radical problem mainly in privacy issues. There are many security experts who believe that this is a technology that can end up being used for other purposes. They believe that a surveillance system is going to end. Although at first it will focus on the little ones, it is possible that it will also be applied in the tracking of iPhones in search of political activists.