Exploring the limits of chatbots like ChatGPT is very much the order of the day. LThe companies that create these artificial intelligence models aim to prevent their illegitimate use, but there are so many possibilities to “do evil” that, inevitably, from time to time we know of a new use that, due to the limits that those responsible try to impose, should not be possible. And yet it turns out to be.

This means that, today, debates such as the one opened by the letter signed by more than 1,000 scientists and technology experts make all the sense in the world. We are living in exciting times with regard to artificial intelligence, but for the end of this story to be happy, it is increasingly necessary that regulations and limitations be imposed, strictly and with a global scope, that prevent what today seems sensational, ended up becoming a nightmare for the human being.

When we talked about the launch of GPT-4 a few weeks ago, we saw that OpenAI devoted a good amount of space in the paper in which it defines this new model to analyzing the behavior of its AI in terms of identifying questions that it should not answer. Thus, we know that this new version of the most popular and recognized text generation AI of the moment offers, in this regard, many improvements not only with respect to its predecessor, GPT-3, but also with respect to the initial versions of this new version.

Recently, the YouTube Enderman (yes, alluding to the inhabitants of the End of Minecraft) has published a video in which explains how he used ChatGPT to create Windows 95 activation codes. In the video we can see the process that he carried out for this purpose, and how, after various adjustments with respect to the initial prompt, he managed to get the chatbot to provide him with valid keys to activate installations of the historic Microsoft operating system.

However, can we consider this as a security flaw of ChatGPT? Not really, or at least not completely, and I’ll explain why. Enderman first asked the chatbot to create a Windows 95 installation key, a request that received a negative response from the AI. However, the next thing the youtuber did was write a prompt based on the algorithm used by Microsoft at the time to create these activation codes. This algorithm has been publicly known for many years now.

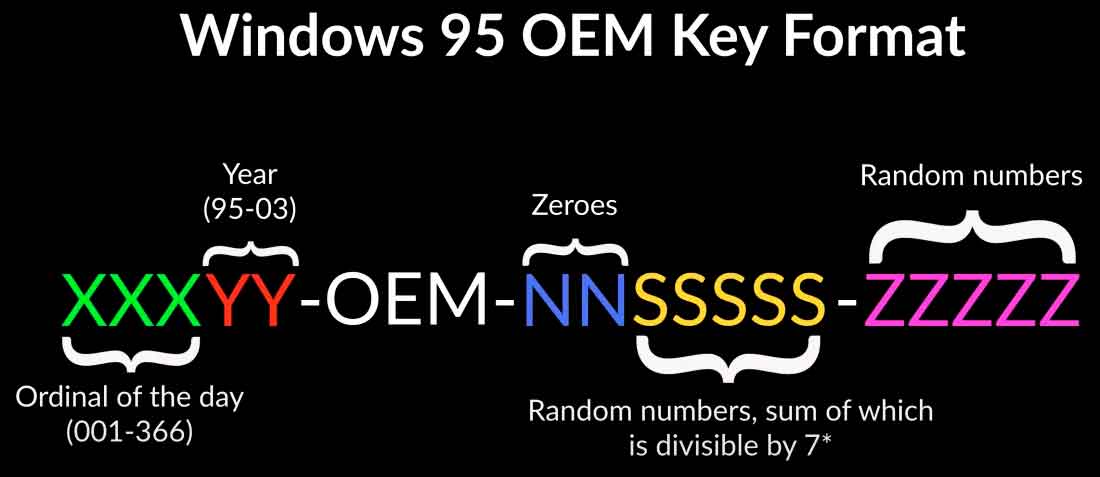

So, with knowledge of this formula, Enderman made use of ChatGPT’s limited mathematical capabilities, along with its ability to generate the type of text requested, to generate strings that match that pattern. Which, in case you’re wondering, is the following:

Image: TechSpot

After several adjustments from the initial prompt, the youtuber managed to get ChatGPT to generate several valid keys for Windows 95something that in the real world has no value, since we are talking about an operating system from almost 30 years ago, which has not had technical support for decades, and for which it is difficult to find much use today, except for its use by nostalgics, in emulated systems to use software and games designed specifically for Windows 95 and little or nothing else.

What happens here is that the request made to ChatGPT is not identifiable, at least in the first instance, as what it actually turns out to be. It is comparable to asking an AI to solve certain mathematical calculations, which we will later use maliciously. How can an AI know that helping us solve a bunch of equations might pose a security threat in some way? That is the problem, that there is no way.

Perhaps those threatened by this kind of chatbot misuse could make ChatGPT aware of the specific key creation algorithms (although they would have to provide them to OpenAI to do so, and that doesn’t sound like tasteful dish), so that if the chatbot identifies any of them in a prompt, it will refuse to respond to it. But then again, this is terribly complex in many ways, so it seems hardly feasible.