Attacked on the privacy front, Apple provides more details on its tool for combating child pornography and the ambitions that go with it.

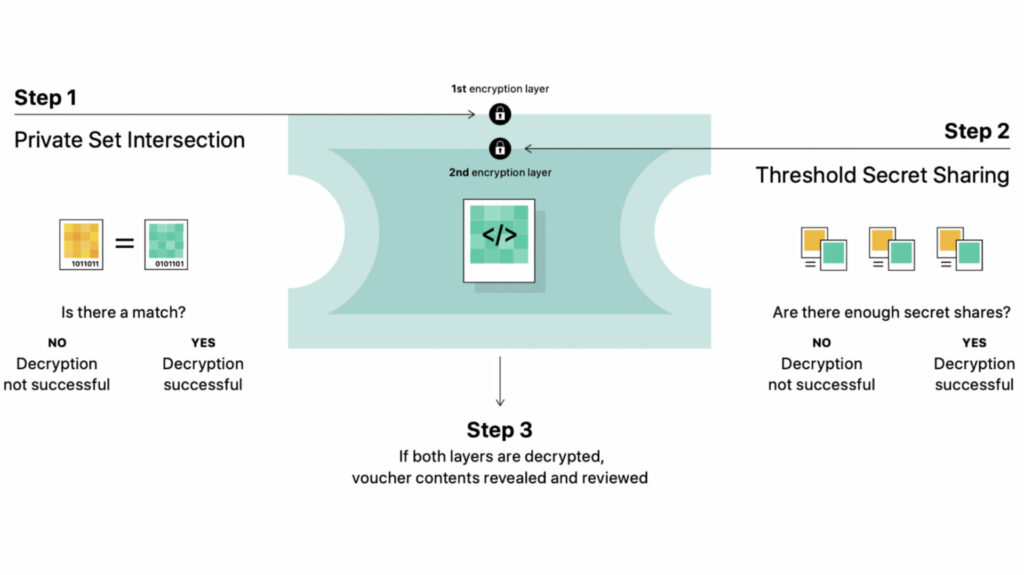

In the midst of a controversy concerning its tool to fight against child pornography, Apple took the floor to try to better explain the operation of the famous NeuralMach algorithm, at the heart of the controversy.

What are the safeguards?

In a document published on Monday August 9, Apple responds to the most serious criticisms addressed to it, including those concerning the possible risks of authoritarian drift of such a tool. ” Let’s be clear, this technology is limited to the detection of child pornography content stored in the cloud and we will not accede to any government request to expand it. The company writes.

Asked about the subject, Apple does not deny the possible problems that this tool could pose, but tries to reassure on its overall impact. For the moment, NeuralMatch is only deployed in the United States with the collaboration of an association for the defense of children’s rights. A possible extension will only be done after a serious analysis of the associated risks in each country, explains the manufacturer. The group did not expand on the hypothesis that countries with stricter laws may seek to legally force it to extend the device to their territory, and just ensures that it will look closely at all these aspects.

Beyond the geopolitical aspects, Apple specifies that other technical safeguards exist. First, users will be warned that such a tool is present on their systems and can still disable iCloud synchronization, rendering the tool obsolete since only photos sent to the web will be processed.

The 100% guarantee does not exist

The human check carried out at the end of the race, in the event of a problem, offers a final safety net. Apple ensures that it does not work directly with the authorities, preferring to be in contact with associations.

In the field, zero risk does not exist: no measure can guarantee 100% that this tool will never be misused. Apple believes that by limiting the scope of NeuralMatch and making the mechanism as sophisticated and complex as possible, the risk of abuse will be reduced. The file is of a sensitive nature. Even if Apple is generally quite firm on the issue of privacy protection, it will be necessary to remain attentive to the concrete operation of this future tool and to the way in which it will be implemented.