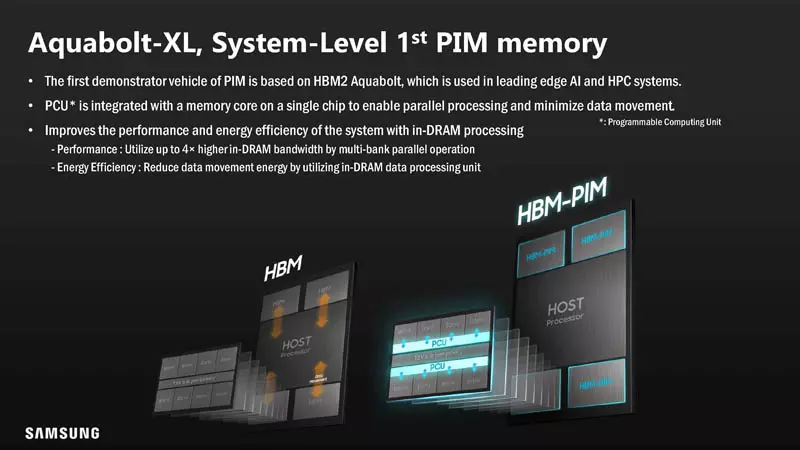

The first thing we have to understand, at the time of writing this article, is that the HBM-PIM is not a standard approved by the JEDEC, which is the committee of 300 companies in charge of creating the different memory standards, be they volatile or persistent. . At the moment it is a proposal and design by Samsung, which could be converted into a new type of HBM memory and be manufactured by third parties or, failing that, into an exclusive product of the South Korean foundry.

Whether it becomes a standard or not, the HBM-PIM will be manufactured for the Alveo AI Accelerator from Xilinx, a company that we remember has been acquired entirely by AMD. So it is not a concept on paper and not a laboratory product, but this type of HBM memory can be manufactured in large quantities. Of course, the Xilinx Álveo is an FPGA-based accelerator card that is used in data centers. It is not a product for the mass market, and we must bear in mind that it is nothing more than a variant of the HBM memory, which by itself is very expensive and scarce to manufacture, which reduces its use in commercial products such as gaming graphics cards or processors.

The concept of in-memory computing

The programs we run on our PCs work through a marriage between RAM and CPU, which would be perfect if we could put both on a single chip. Unfortunately this is not possible and leads to a series of bottlenecks inherent in the architecture of any computer, caused by the latency between the system memory and the central processing unit:

- As there is a greater distance, the data is transmitted more slowly.

- Energy consumption increases the more space there is between the processing unit that executes the program and the storage unit where the program is located. This means that the transfer speed or bandwidth is lower than the process speed.

- The usual way to solve this problem is to add a cache hierarchy on the CPU, GPU, or APU; which copies data from the RAM inside it for faster access to the necessary information.

- Other architectures use what is known as Scratchpad RAM, which is called embedded RAM, it does not work automatically and its content has to be controlled by the program.

So the RAM integrated in the processor has a problem and it is its capacity, where it stores very little data inside due to physical space limitations since the vast majority of transistors are dedicated to processing instructions and not storage.

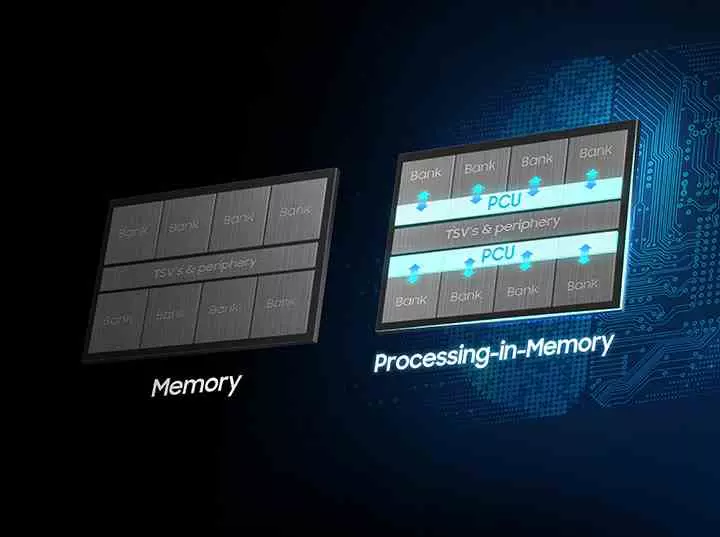

The concept of in-memory computing works in reverse compared to DRAM or embedded SRAM since we are talking about RAM to which we add logic where the bit cells have greater weight. Therefore, it is not a question of integrating a complex processor, but a domain-specific one and even hardware-wired or fixed-function accelerators.

And what are the advantages of this type of memory? When we execute a program on any processor, at least for each instruction, an access is made to the RAM assigned to said CPU or GPU. The idea of in-memory computing is none other than having a program stored in PIM memory and that the CPU or GPU only has to use a single call instruction and wait for the processing unit in memory computing to execute the program and return the final response to the CPU, which is free for other tasks.

The processor in the Samsung HBM-PIM

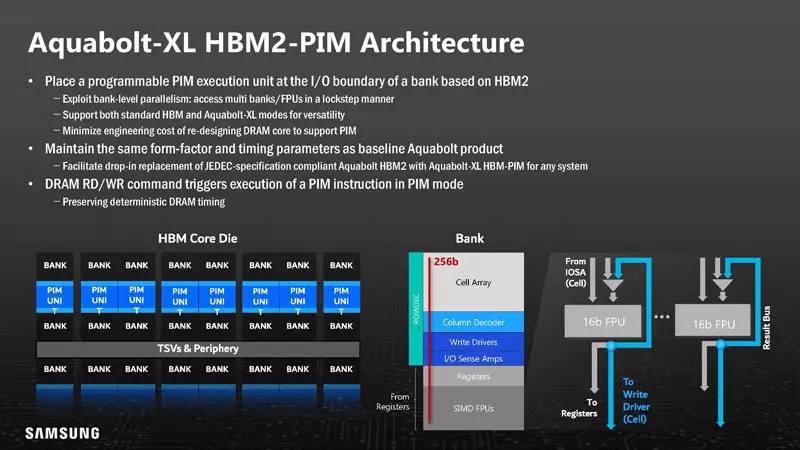

A small CPU has been integrated in each of the chips in the stack of an HBM-PIM chip, so the storage capacity of is affected by directing transistors that would go to the memory cells to assign them to the logic gates that make up the integrated processor and as we have advanced before, it is a very simple one.

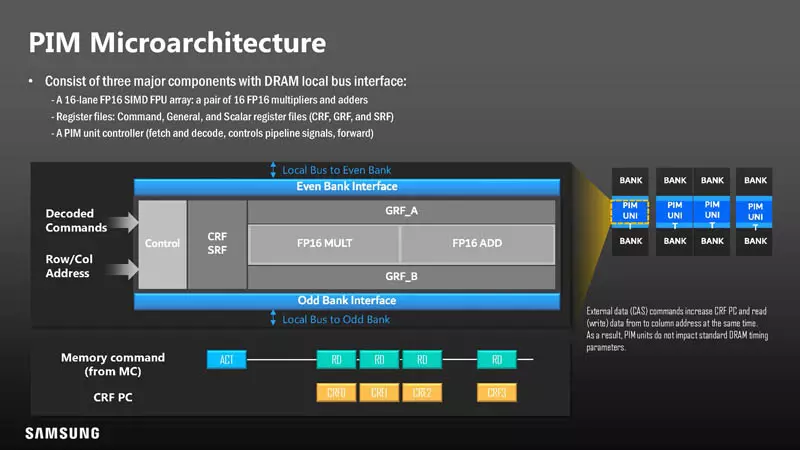

- It does not use any known ISAs, but its own with very few instructions in total: 9.

- It has two sets of 16 floating point units with precision of 16 bits each. The first set has the ability to perform additions and the second to perform multiplication.

- An execution unit of the SIMD type, so we are dealing with a vector processor.

- Its arithmetic capabilities are: A + B, A * B, (A + B) * C, and (A * C) + B.

- The energy consumption per operation is 70% lower than if the CPU did the same task, here we have to take into account the relationship between energy consumption and distance from the data.

- Samsung has baptized this small processor under the name of PCU.

- Each processor can work only with the memory chip of which it is a part, or with the entire entire stack. Also the units in the HBM-PIM can work together to accelerate the algorithms or programs that require it.

As can be deduced given its simplicity, it is not useful for executing complex programs. In return, Samsung promotes it under the idea that we relate it as a unit that accelerates Machine Learning algorithms, but it cannot handle complex systems either as it is a vector and non-tensor processor. So their capabilities in this field are very limited and focus on things that do not require a lot of power, such as voice recognition, text and audio translation, and so on. Let’s not forget that its calculation capacity is 1.2 TFLOPS.

Are we going to see the HBM-PIM on our PCs?

The applications that Samsung gives as an example of the advantages of the HBM-PIM are already accelerated at a higher speed by other components in our PCs, what is more, the high cost of manufacturing this type of memory already rules out its use within a home computer. In the case that you are programmers specialized in artificial intelligence, the safest thing is that you have hardware in your computers with a much higher processing capacity than Samsung’s HBM-PIM.

The reality is that it seems like a bad choice for the marketing department of the South Korean giant to talk about AI. And yes, we take into account that it is the fashionable technology on everyone’s lips, but we think that the HBM-PIM has other markets where it can exploit its capabilities.

What are these applications? For example, it serves to accelerate the search for information in large databases that hundreds of companies use daily and believe us that it is a huge market that moves millions of dollars a year. In any case, we do not see it being used at the domestic level and in scientific computing, although there is a possibility that the still unfinished HBM3 inherits part of the ideas of the HBM-PIM.