Artificial intelligence requires handling large amounts of data and we must bear in mind that the more data to process, the more power and this is where the division of labor becomes important and for this we need a large number of cores. And what kind of processors comply with it? Well, we have two candidates, on the one hand, those that are designed for HEDT or workstations and on the other, those that are designed for servers.

Which of the two types is better? Well, HEDT CPUs like the AMD Threadripper or Intel equivalents like the Xeon Workstation. The reason for this is that server processors, due to the need to be always on, run at lower clock speeds, they often lack Boost. In addition, its complementary hardware is more expensive and often not ready in terms of shape and size for use on a desktop.

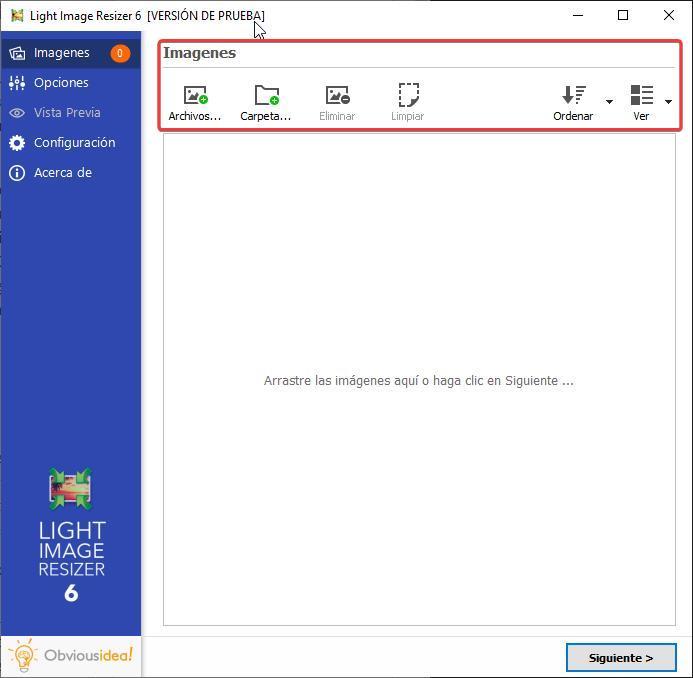

Are CPUs good for AI?

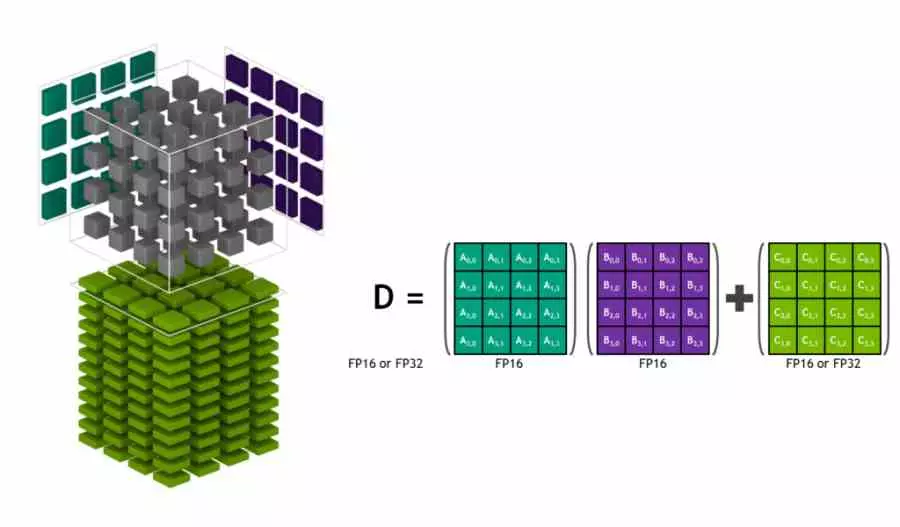

At first glance, no, if we make a classification of the different types of processor to execute artificial intelligence algorithms. Although this has a nuance and it is the fact that nowadays they are usually used to execute scalar parts of the code. What does this mean? Well, it refers to the arithmetic operations that operate with a single number, while those that operate with several are sent to coprocessors such as the powerful SIMD units of the GPUs or the recently added systolic arrays or Tensor units in them.

CPUs for AI don’t really exist, but in recent years we’ve seen new instructions and execution units that make them much better at executing such algorithms. For example, it is normal to work with data sets of low precision and, therefore, with a length of 16, 8 and even 4 bits. Well, these instructions were not common in a CPU, but they have been added due to demand and especially in SIMD units.

Tensor Units or Systolic Arrays in CPU

Then we have the fact that many deep learning problems are solved with the so-called Tensor units or Systolic Arrays, whose operation we will not go into in this article. What we will drop is that the neural processors, the NVIDIA GPU tensor units and the new AMX units of the Intel Core 12 are systolic arrays, so they are the Intel processors launched on the market from the end of 2021 to forward those who are more prepared to execute artificial intelligence algorithms. As long as the applications have been optimized to use these instructions.

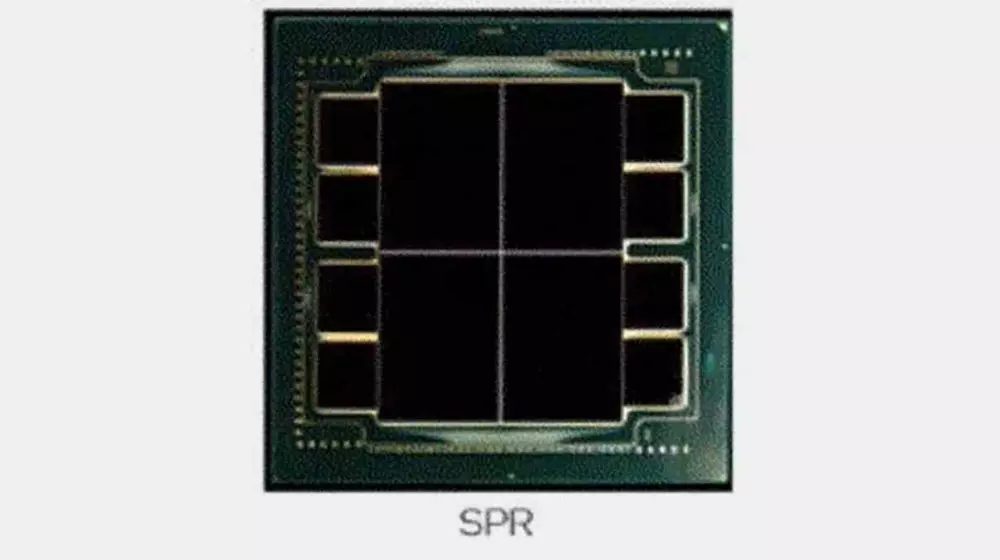

However, these instructions when moving large volumes of data require large bandwidths and here we enter the way in which Intel and AMD have respectively solved this problem. In the case of the three-letter company, what they have done is increase the LLC through the V-Cache to improve its performance, the company with the blue mark, on the other hand, has some models of its Xeon Workstation HBM memory in a way complement to get the most out of your AMX unit.

Whichever processor you choose, we recommend that you pair it with RAM with good bandwidth. It is for this reason that we recommend one of the HEDT type, since their motherboards usually have configurations with four and up to eight memory channels. This means a transfer speed or bandwidth two and even four times more than a desktop PC.

Isn’t a GPU better for AI?

Yes, that statement is true, but nothing beats the combination of a powerful workstation processor and a powerful graphics card working together. Let’s not forget that for some time now and especially in the case of NVIDIA we have seen their graphics processors evolve to optimizations to execute artificial intelligence algorithms, based on different types of machine learning. However, strength is found in union and since a system is only as powerful as its less powerful part, choosing a desktop CPU not only means a cut in terms of processing performance, but also in terms of memory. .

In the midst of a shortage of graphics cards, we have to look for alternative solutions and this is a problem that not only Intel and AMD are aware of in the world of x86 processors. The ARM world is also honing in on high-core, high-bandwidth-optimized AI CPU designs. We have examples of processors such as Nuvia, now Qualcomm, Ampere or the Fujitsu A64FX that mark a new paradigm in the face of new needs.

So, if you are looking to buy a PC to run your artificial intelligence algorithms and you don’t find high-caliber graphics cards available, then a HEDT or workstation CPU is the best option and even more so when they have the specialized units for it.