Apple wants to fight against the sharing of child pornography images with a new kind of tool, supposedly protecting privacy. To convince, the company detailed the operation of its system.

Apple will soon deploy on all its machines a new tool dedicated to the fight against child crime. Nicknamed NeuralMatch, this device will analyze the photos uploaded to iCloud (Apple’s remote storage solution) to find any child pornography pictures and, if necessary, alert the authorities.

Criticized by specialists in the protection of personal data, this tool is ” designed with respect for user privacy Apple announces on its site. To reassure its approach, the firm also gives some explanations on the technical operation of NeuralMatch.

How does NeuralMatch work?

To detect criminal photos, Apple will compare the electronic signature of an image with those listed in a database provided by the National Center for Missing and Exploited Children (NCME), an American association for the defense of children’s rights.

When a photo is about to be exported to iCloud, the owner’s iPhone or iPad will compare the photo signature with the database downloaded to the device. If the signature of the shared photo matches an image listed in the database, then a sort of encrypted “alert ticket” is generated. Beyond a certain threshold of accumulated tickets, an alert is sent to Apple which will be able to analyze the photos, potentially block the offending account and alert the NCME.

To avoid false positives, Apple explains that the database provided by the NCME is downloaded to each device and then encrypted to prevent possible malicious manipulation. Detection is therefore done on the phone’s local memory rather than on the Apple cloud and only occurs when a photo is uploaded to iCloud. If sync is not enabled, Apple will not perform any scans.

Safeguards criticized

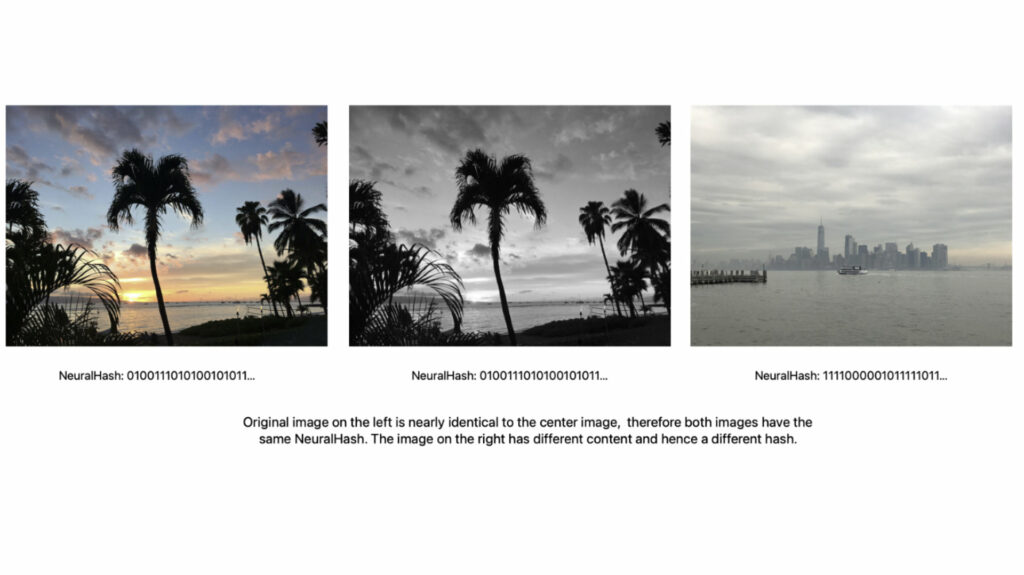

Rather than comparing the raw content of an image by performing a content scan, the phone will ” analyze an image and convert it to a specific unique number

in this image “. This transformation of the image into binary code allows the phone to perform a quick comparison with the database and avoids transmitting too much information about the content of the images. ” Apple does not learn about images that do not match the database », Explains the company in its technical documentation. NeuralMatch is powerful enough to detect minor changes between two photos (cropping, drop in quality) and associate them with the same cryptographic signature.

Finally, Apple ensures that if the threshold of “alert ticket” is not crossed, the company does not have access to their content. Apple does not specify from how many offenses an account will be screened, but assures that the system only has ” a risk in a trillion To create false positives. In the event of an error, owners will be able to appeal so as not to have their accounts suspended.

Cryptographic analysis of child pornography images is not exactly new, Apple was already performing similar checks on photos stored on iCloud. NeuralMatch worries data protection specialists, however, because it operates locally on the phone, opening the door to potential abuse. The Electronic Frontier Foundation (EFF), a famous NGO dedicated to the protection of rights on the Internet, calls the tool a “backdoor” that could be used by authoritarian governments to detect content potentially hostile to power, among others.