The concept of interlaced video is closely related to cathode ray tube screens, which is why it is a topic that does not appear when we talk about contemporary hardware, since although the first LCD screens supported resolutions in interlaced mode, they did not it took a long time to discard them because there was enough bandwidth in digital video signals to avoid having to use this video mode.

What is interlaced video?

For a long time the video standards par excellence were NTSC and PAL, but we will ignore the latter due to the fact that the advances that allowed home computing, although it hurts us, did not occur on the European continent. Thus, in order to take advantage of the ability of televisions to create a real-time information terminal, the first terminals for minicomputers of the time began to be created, consisting of a modified television without a radio frequency receiver.

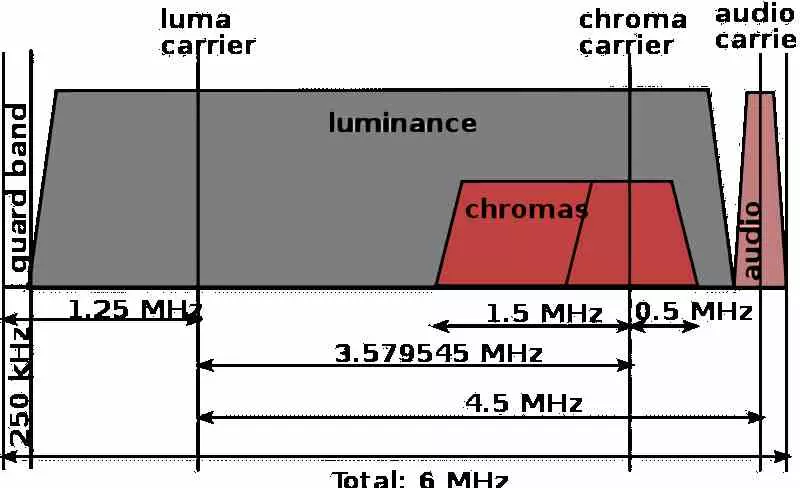

So in the late 60s and early 70s the first screens for PC began to be born. However, it was soon seen that there was a bottleneck and that the video signal that could be sent was limited in order to get access to high refresh rates. The answer to this was interlaced video, which is to make the video system draw the odd and even lines on separate frames. In this way, although the vertical resolution is reduced in each frame, the number of frames per second is doubled and all this without increasing the bandwidth of the video signal.

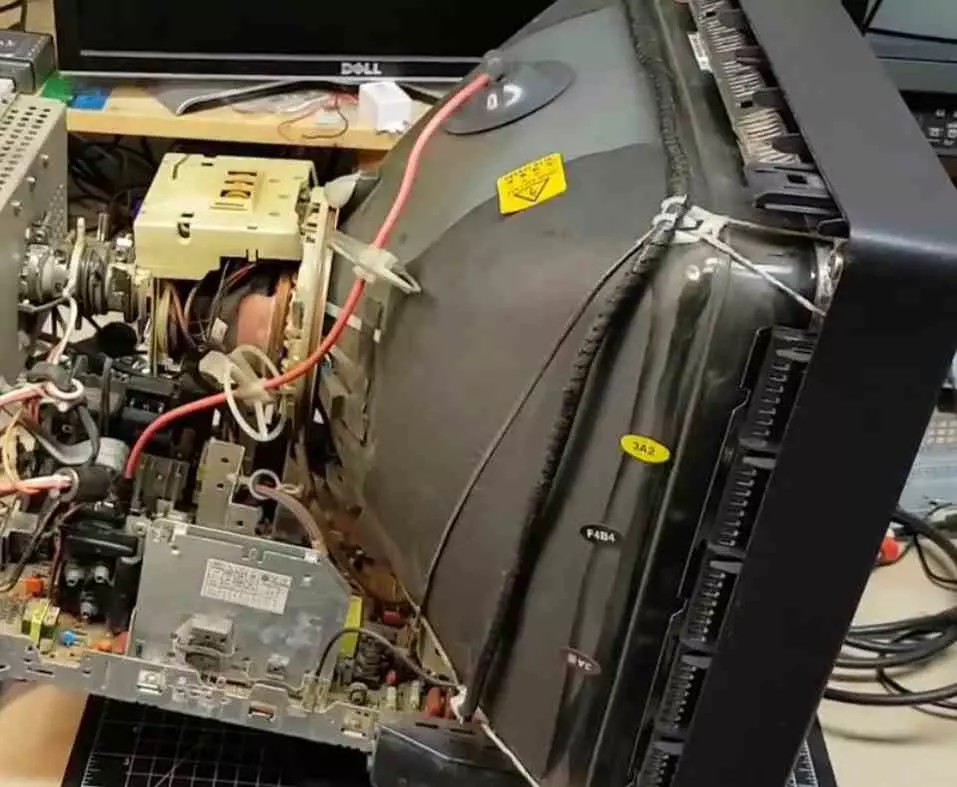

The operation of a CRT display

Think of a CRT monitor as a kind of printer, when a sheet is printed we can see how the head moves from left to right to print a line, when it ends, the paper goes up and the head returns to the left. In a CRT screen it is similar, the electron beam travels the screen from left to right and from top to bottom. When the frame is finished drawing it returns to the initial position to generate the next one.

This is done so quickly that our eyes perceive all those lines on the screen as one, but if we could observe our surroundings at a high frequency we would not see an image, but only the scan line through which the electron beam has just passed in every moment. So it takes advantage of a limitation of our visual system and our brain to create the illusion of generating images very quickly.

NTSC and PAL standards

What sets a standard is a series of parameters and specifications that all manufacturers that adhere to it have to comply with and in the case of NTSC and PAL, manufacturers had to adapt their screen to a series of times that were the following:

- The refresh rate, 60 Hz for NTSC in interlaced mode and 30 Hz in progressive mode. In PAL 50 and 25 Hz respectively.

- The number of scan lines, 525 in progressive mode and 262.5 in interlaced mode for NTSC. In PAL they were 625 and 312.5. In interlaced mode the last half line was not displayed.

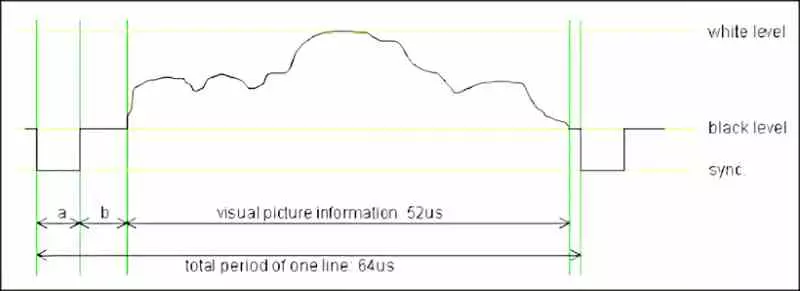

- The time it took for the electron beam to pass through a line was the same in both modes, however the times are different for each of the two standards. In the NTSC standard a total line lasts 63.3 microseconds, however the first 10.9 microseconds of these are for repositioning. In the case of the European standard, the times are 64 microseconds and 12 microseconds respectively.

The graphics hardware that has to deal with CRT screens has to take these times into account and usually has a series of binary counters integrated in charge of counting the times to know when to send and when not to send the video signal. This is a completely different operation from LCD screens.

Interlaced video on LCD and OLED displays

We have to start from an important point, in an LCD screen there is no electron beam that generates the image, but it is created by illuminating the hundreds of thousands and currently tens of millions of points that make up the screen. So the periods in which the electron beam is repositioned to draw the next scan line or for the next frame does not appear to exist.

However, due to the way in which the television signal was transmitted, and given that there had to be a transition period from one type of television to another, the LCD controllers were designed to display the scan lines one by one as occurred in the CRTs. To do this, the delay time corresponding to the electron beam repositioning intervals is added.

If anything, today’s video signals are fast enough that you don’t have to rely on tricks like video interlacing. So they allow images to be displayed at a high frequency without sacrificing horizontal resolution.

The problem of deinterlacing

If you connect an old console or a VHS or DVD player with an analog video signal emitting in interlaced mode to an LCD or OLED screen, you can see a huge drop in image quality compared to how it was seen in a CRT. And no, we are referring only to the representation of the color and the level of contrast, but to a problem called deinterlacing.

It is not a standard process, but a series of algorithms based on reconstructing the image from interlaced to progressive, which many times end up generating video signals with enormous flickering, if not to say that with a much lower image quality, as well as a series of artifacts that cloud the overall experience.

Today there are intermediate devices designed to solve this problem, as well as the creation of algorithms via deep learning that allow us to reconstruct the old interlaced video signal correctly on an LCD screen. As for the PC, interlaced mode disappeared along with the discarding of VGA and DVI in graphics cards, which is normal, since today CRT monitors are no longer sold and we do not have video signals limited by width of band.