There is no doubt that all variants of High Bandwidth Memory are the ideal type of memory, except for their price, due to the fact that they take up little space, consume little per transmitted data and have a much lower latency than DDR5 memories. and GDDR. The latter is thanks to the use of an interposer on which both the processor and the HBM memory have to be installed.

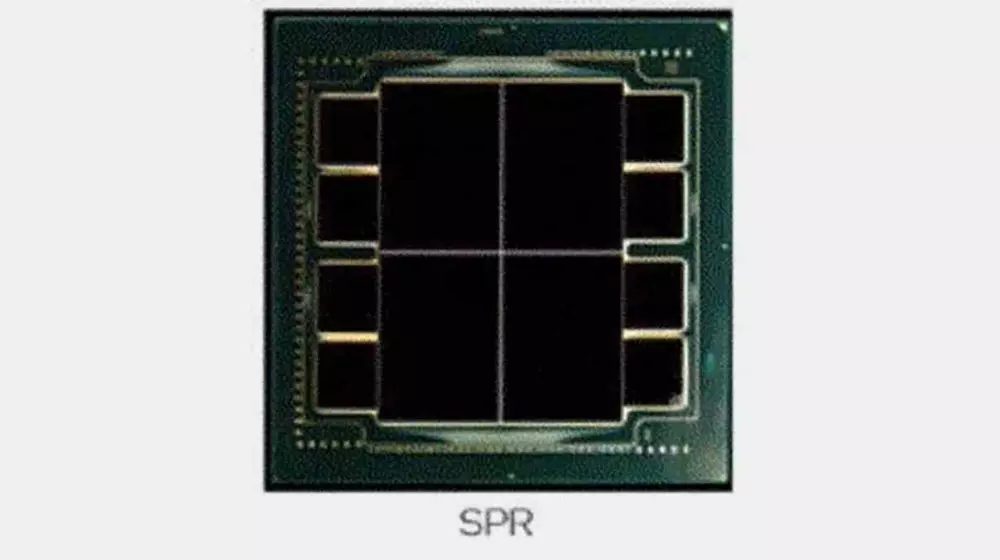

Intel Xeon Sapphire Rapids with HBM2E memory

This makes the price of creating a CPU or GPU with this type of memory much more expensive, in addition to the fact that the case of a CPU greatly limits the expansion capabilities by not being able to expand the RAM. So far we have not seen HBM-type memories outside of GPUs for high-performance computing such as AMD Instinct and NVIDIA Tesla, however, Intel has Intel Xeon Sapphire Rapids processors in the bedroom with this type of memory and therefore we have finally been able to see the first image of one of them.

The first thing to remember is that the server CPUs that Intel will launch in 2022 will be composed not of a single or monolithic piece but of several intercommunicating with each other through a common interposer. This is done due to the fact that the bigger a chip is when it comes to being manufactured, the higher the percentage of failures there is as its size grows and also, there is currently a limit on the size. By dividing the circuitry into smaller chips, you can create combo processors that go beyond the limit of a single chip for more good chips per wafer.

But the use of HBM2E memory in the case of Sapphire Rapids is due to the inclusion of AMX units among the execution units of each of the cores and that, as their name indicates, are designed for the calculation with matrices so typical in some artificial intelligence algorithms. This type of units to work make use of very large data collections and that is why they require memories with a higher bandwidth than the DDR, as is the case of the HBM2E.

And as you can see, Intel has created an Intel Xeon Sapphire Rapids composed of 8 tiles, something that we did not expect from the moment in which the standard model is 4. Each tile corresponds to a 2048-bit HBM2E interface, which allows it to communicate with two chips of this type of memory, each one of 1024 bits and each one housing a stack of 4 or 8 DRAM chips. The bandwidth it will provide you? 3.68 TB / s totall, although only for a capacity of 128 GB.

In addition to this data, the rumored 56-core count for the highest-end version will rise to 80 cores, which added with a higher frequency and especially with a higher IPC jump could equalize for the first time since the departure of the AMD EPYC the balance in servers, since those of the red team would plan to increase the count to 96, will it be enough ?