Cinematic mode is one of the new modes in the iPhone Camera app. We tested it on the iPhone 13 and iPhone 13 Pro: here’s how it works.

Presented with great fanfare, Cinematic mode is the most visible consumer camera addition of the iPhone 13 (our review) and iPhone 13 Pro (our review) – the first device can use it with its main sensor, when the second can also use it with the 77mm telephoto lens (zoom x3). To put it quickly, this is a camera app mode that promises to make a “cinematic” take. Behind this term hides above all an ability to focus dynamically on different planes, and therefore blur which is not the subject of the sequence like a professional camera.

But how does Cinematic mode work? We were able to test it extensively on the iPhone 13 and iPhone 13 Pro and we spoke with Vitor Silva and Johnnie Manzari, respectively Senior Product Manager for the iPhone and designer in the Interface team, to understand its inner workings. .

How the Cinematic mode works on the iPhone 13 and 13 Pro

” It’s a mode that well symbolizes our way of working together at Apple, launches Vitor Silva, because Kinematic mode is a combination of hardware, software, interface, the team that worked on the A15 processor, the team in charge of machine learning, etc. »: In short, a real global project centered around the capacities of the iPhone. And we immediately understand what Vitor Silva explains.

Calculate depth of field

Kinematics is first and foremost a process that happens in the iPhone. The Camera app will take a stereoscopic measurement using the difference in focal length between two lenses (the TrueDepth camera on the front calculates depth differently). To put it simply, it’s as if, by “seeing” far and near at the same time, the iPhone was able to measure the distance between two points, and therefore the depth between them. A notion of calculation in several dimensions exists, because this measurement will in fact create a ” disparity map “(depth map), which will give raw information to the smartphone: which elements of the shot are at what distance from the objective filming. All in real time and several times per second to constantly adjust this information.

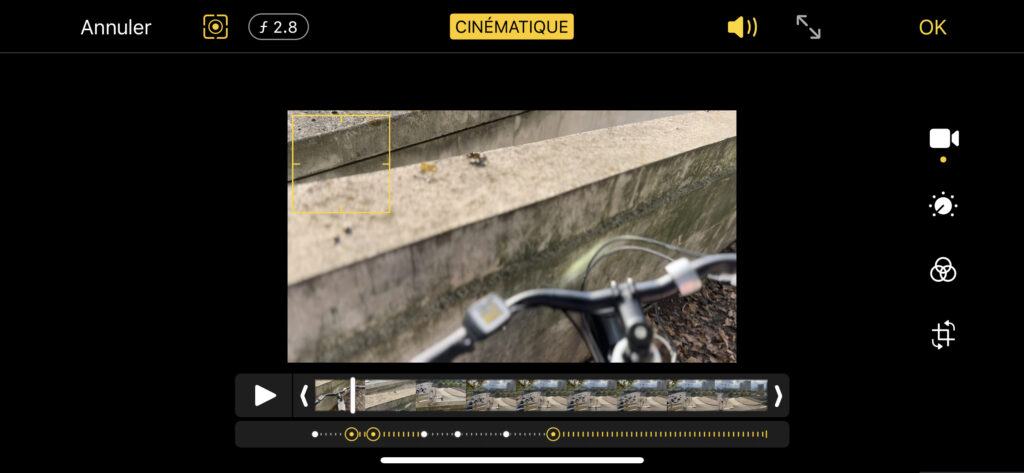

This technical process is only the first part of our business, because the iPhone has only one capacity at the moment: to see in depth and identify several plans. We understand very well how it works in the example below:

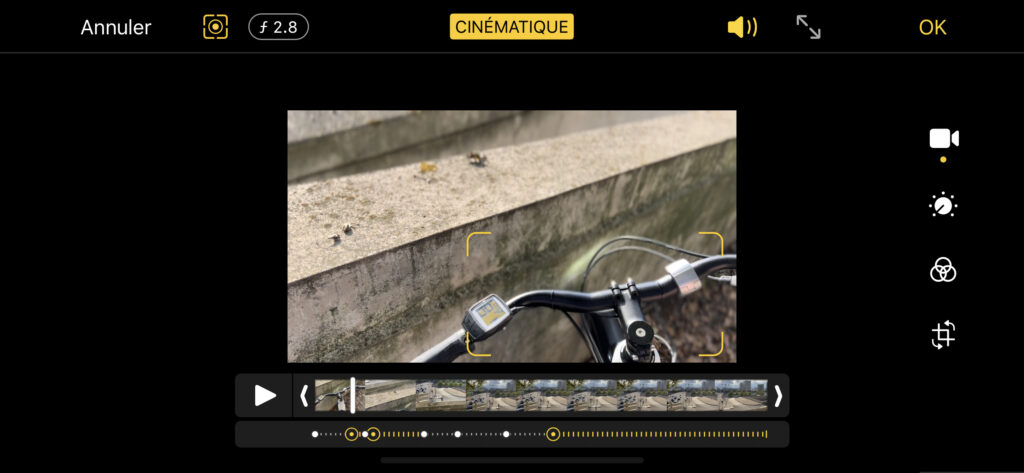

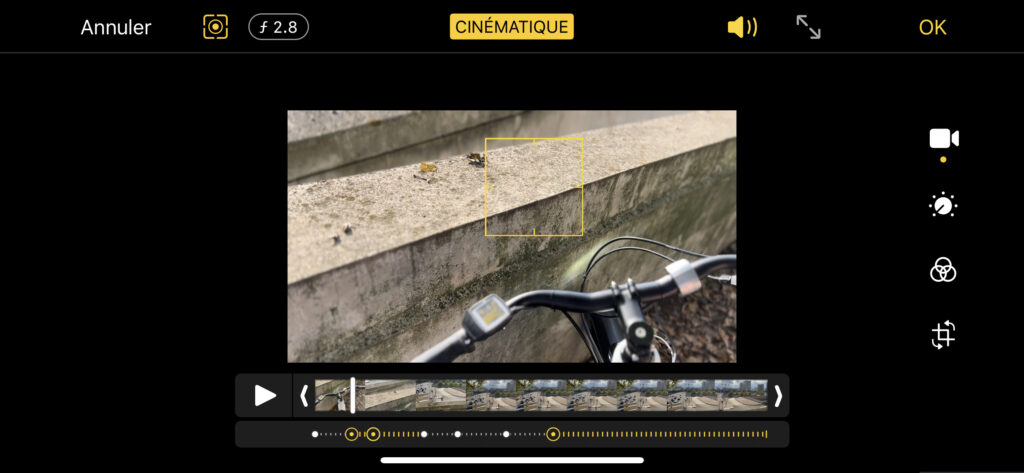

In this example, the iPhone 13 Pro has spotted 3 planes, at 3 different distances: that of the bike, that of the first wall and that of the second wall. It’s good to have a notion of distance, but that’s not everything. This is where the machine learning.

Understand what is happening on the screen

To ensure that the Cinematic mode can be used without any knowledge of audiovisual production, Apple has challenged itself to offer a suitable “automatic” mode. Basically, it is he who must, alone, make sure to focus on the right part of the frame. If a character enters the field, he will favor that entry. If planes change rapidly (as in our example above), it will focus successively on the different planes. If a person is speaking, the focus will be on them.

How does he decide? Vitor Silva tells us that Apple created an algorithm training process that was nurtured by “ hundreds of films “, Adjusted by” discussions with directors “. The objective: ” try to understand what makes the cinematography of a scene “. Like an apprentice-director, the algorithm was therefore trained to identify a difference in shot, a transition, an entry into the field, a counter-field in a dialogue, etc. The results of this learning have been integrated into the Camera application and the Apple A15 Bionic processor takes care, in real time, to apply them to what it sees. Of course, humanization is done here for educational purposes: everything is just numbers, vectors and shapes for computer vision.

Work on the aesthetics of a plan

Once you have the depth and a certain idea of the composition of a shot in the cinema, you have to apply a treatment to the image. This is where the neural engine of the A15 Bionic processor: it must interpret the complexity of the shot being shot and apply a bokeh effect realistic. This “blur” effect is quite an art: it is not enough to roughly blur part of the scene, but to ensure that to copy the operation of a real mechanical lens (we probably understand why the mode is limited to 1080p at 30 frames per second, any higher configuration would have been too resource-intensive).

In the capture below, we see how Kinematic mode figured out that the part of the ground between the shifter and the handlebars belonged not to the bike, but to the farthest plane.

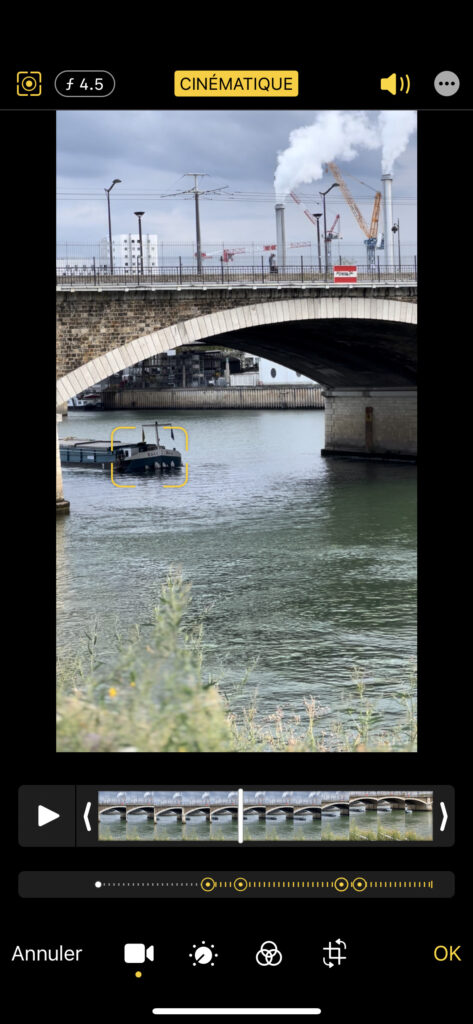

In the capture below, we see conversely that by focusing on the boat arriving on the Seine, the plants in the foreground are not well blurred at all. Here, the whole Kinematics process went well until the elements of ” post production », A sign that this is one of the most difficult tasks. We can remember, for comparison, how long it took for the iPhone’s Portrait mode to get really honest. This is the same task that Apple gives itself, but with humans, animals, objects, plants, etc., all in real time and on moving images. We understand that there are some hiccups.

Cinematic mode interface and postproduction

Apple wants its Kinematic mode to be used and for this, the company has sought to design a simple interface for a complex process – as we have seen. Out of the question of having to adjust point like film professionals, who were consulted for the development of the function, as Johnnie Manzari asserts. ” We wanted to make it possible for Cinematic Mode users to test new things with the iPhone camera. », Continues Manzari. And that’s precisely why all the work done live is also accessible in post-production, directly in the iPhone’s Photos application.

Each tap on the smartphone screen will add a “point” in the sequence, which corresponds to a change in focus. The user can also, very simply, select a character or an object on the screen to keep the focus above, regardless of its position in the field. The accessibility of the functionality is exemplary.

Cinematic mode, a general public tool

Let’s face it: Cinematic mode will undoubtedly evolve if Apple intends to make it a real one hit. Today, our tests show that he does brilliantly with humans (the Portrait mode experience?) But much less well with complex shapes (fences, plants, etc.). A professional director, if using the iPhone, will most likely prefer ProRes mode and lens control in a camera app that allows Manual mode – or even viewing multiple lenses from an iPhone Pro at the same time. Filmic DoubleTake is capable of this, for example.

But making this mode also available on the iPhone 13 also shows Apple’s intention: Cinematic mode is a mainstream tool, mainly for having fun creating differently. It may be able to give birth to vocations, before fading to software offering more precise control.