Artificial intelligence is unstoppable. Increasingly, she is fully involved in projects of a very different nature and in a multitude of sectors. And things have only just begun. In fact, this past Wednesday Nvidia and Microsoft presented a new collaboration to build what will be the super computer in the cloud focused on AI.

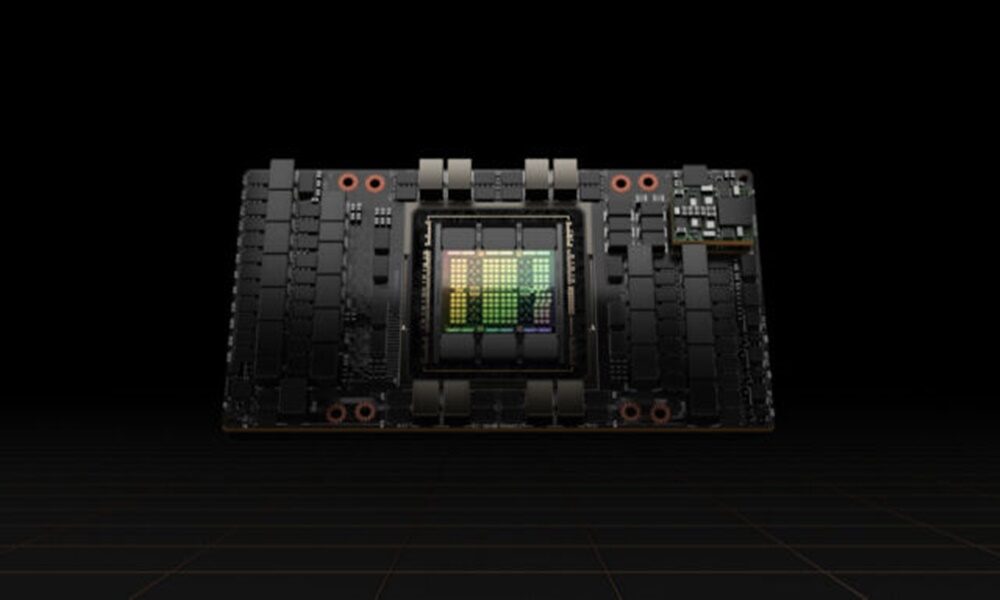

For its operation, it will use tens of thousands of high-end Nvidia GPUs. The goal is to make it the most powerful AI supercomputer in the world, at least the largest ever built. It should be noted that Nvidia’s flagship AI chip is up to 4.5 times faster than the previous one, something vital for the power of this supercomputer.

You’ll also have thousands of units of what is arguably the world’s most powerful GPU, the Hopper H100, which Nvidia released last October. It will also bring its second most powerful GPU, the A100, and will also use its Quantum-2 Infini Band network platform. Capable of transferring data at 400 gigabits per second between servers, linking them together in a powerful cluster.

And what will be the role of Microsoft? The tech giant will take over the Azure cloud infrastructure and ND and NC series virtual machines. While Nvidia’s AI Enterprise platform will be in charge of uniting everything. In turn, we must add DeepSpeed, Microsoft’s deep learning optimization software, which will play a relevant role in this AI mega project. Microsoft will optimize its DeepSpeed library to reduce computing power and memory usage during AI training workloads.

No release date yet

“As part of the collaboration, Nvidia will use Azure Scalable Virtual Machine Instances to further research and accelerate advancements in generative AI, a rapidly emerging area of AI where foundational models like the Megatron Turing NLG 530B are the foundation of unsupervised self-learning algorithms to create new text, code, digital images, video, or audio,” Nvidia said in a statement.

Already in 2021, generative AI models like Stable, DALL-E, and Diffusion celebrated unprecedented scale, all capable of synthesizing novel images on demand. Since then, similar models have appeared that can even reach create videos, synthesize voices and make transcriptions, among multiple uses.

Once Nvidia and Microsoft’s cloud computing becomes available, customers will be able to deploy thousands of GPUs on a single cluster to train massively large language models. Via Microsoft Azurethey will be able to use different instances of scalable virtual machines for their projects.

At the moment, both the cost of this project and its launch date are unknown.