AMD has confirmed a new collaboration with Microsoft that has already begun to bear fruit, and that is that the Redmond giant will use Instinct MI200 graphics accelerators to support the deep learning work of your Microsoft Azure platform.

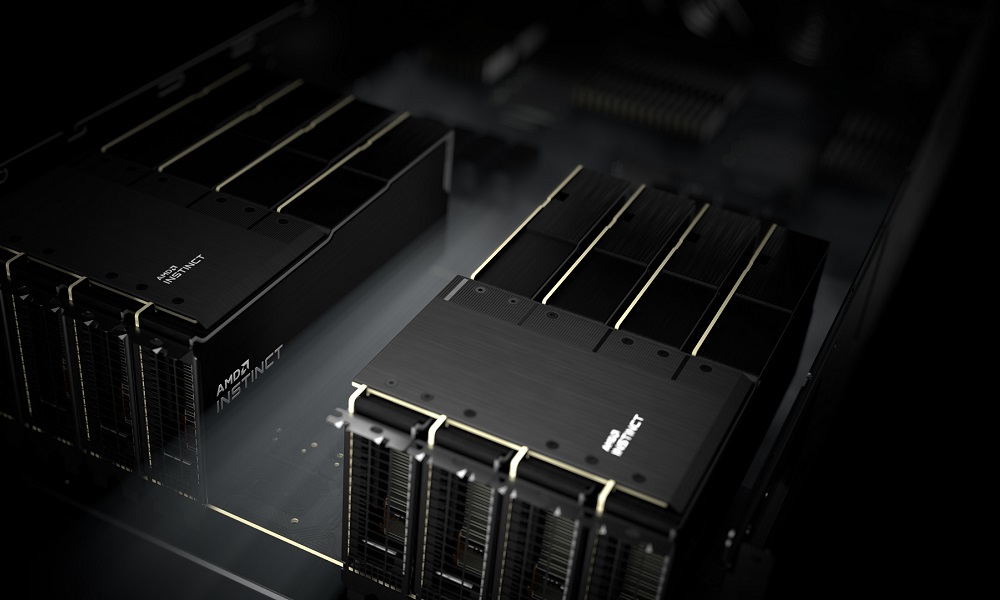

AMD’s Instinct MI200 graphics accelerators use the new CDNA2 architecture, are designed multichip Module, which means that combine two GPUs to create a “super GPU” with up to 220 compute unitswhich is equivalent to 14,080 shaders, have up to 880 matrix cores that, so that we understand each other without going into complicated technical explanations, are the answer to NVIDIA’s tensor cores, and in its most powerful version they integrate 128 GB of HBM2E memory.

Microsoft has also announced that it is working closely with the PyTorch Core team, and the AMD Data Center Software team, to optimize performance and developer experience with customers running PyTorch on Microsoft Azure while ensuring that developers’ PyTorch projects take advantage of the performance and features of next-generation AMD Instinct accelerators.

Brad McCredie, Corporate Vice President of Data Centers and Accelerated Computing at AMD, commented:

“AMD Instinct MI200 accelerators provide customers with cutting-edge performance in AI and HPC and will power many of the world’s fastest supercomputing systems that push the limits of science. Our work with Microsoft to enable large-scale AI training and inference in the cloud not only highlights the extensive technology collaboration between the two companies, but the performance capabilities of AMD Instinct MI200 accelerators and how they will help definitely that customers advance in the face of growing demands on AI workloads«.

For his part, Eric Boyd, corporate vice president of Azure AI at Microsoft, said:

“We are proud to maintain our commitment to innovation long-term partnership with AMD and make Azure the first public cloud to deploy AMD Instinct MI200 accelerator clusters for large-scale AI training. We have started testing MI200 with our own AI workloads, we see great performance and look forward to continuing our collaboration with AMD to provide customers with more performance, choice and flexibility for their AI needs.”