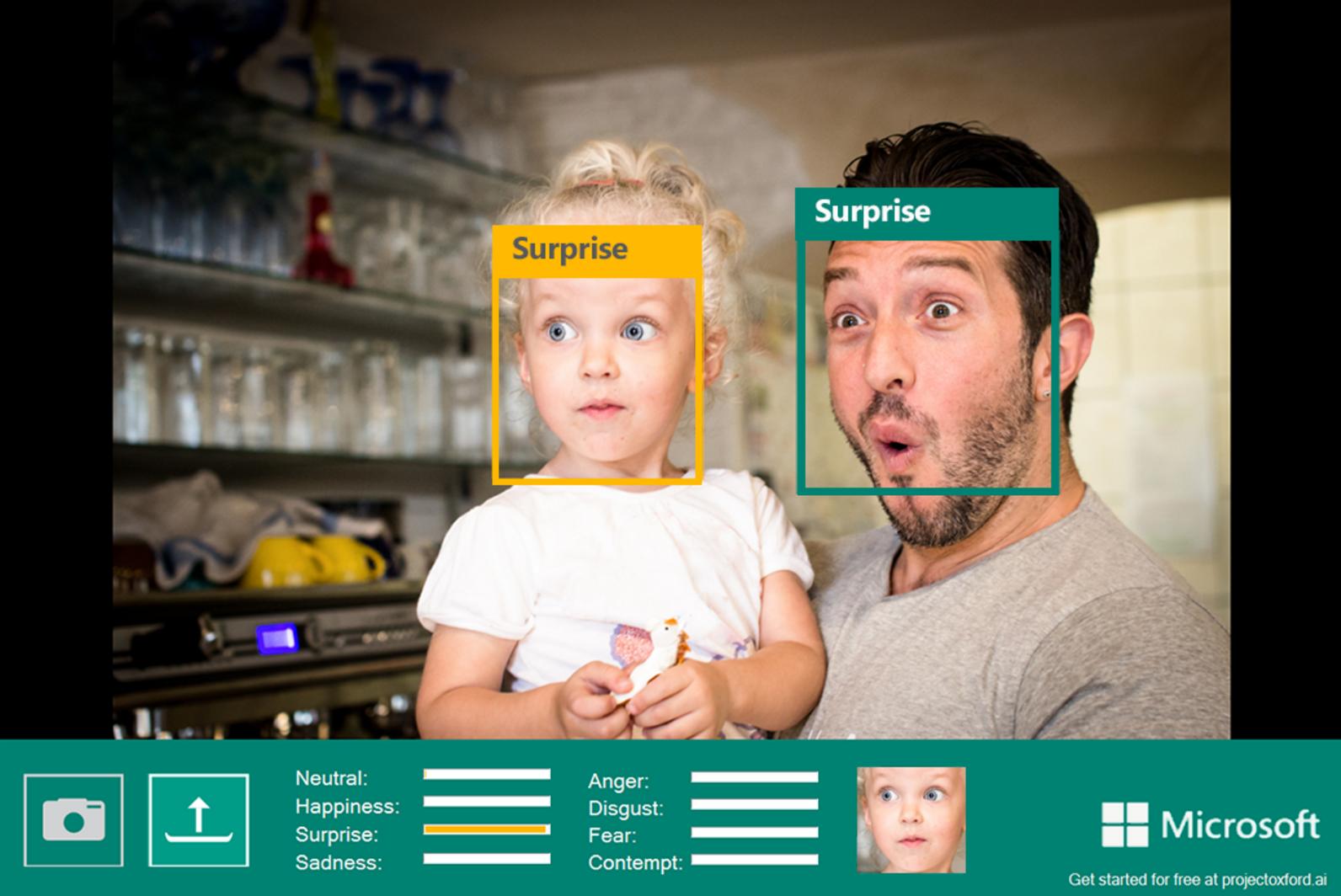

Currently, Microsoft has several tools for facial recognition using artificial intelligence (AI). However, in an effort to eliminate this practice, the company is gradually withdrawing public access to these features.

The main one, a tool that promises to detect emotions from photos and videos, was heavily criticized by experts. This is because many claim that it is not correct to equate external displays of emotion with internal feelings.

In addition, it was mentioned that, due to differences from population to population, the results could differ, causing adverse situations.

The decision to phase out these tools is part of a major overhaul by Microsoft of its AI ethics policies. Currently, their standards are geared towards the responsibility of finding out who uses their services.

With the new decision, Microsoft wants to limit access to some facial recognition features, such as those present in Azure Face. In more extreme cases, they will be removed completely. In the case of systems where there is automatic blurring of faces, access will remain open.

But in addition to removing public access to Azure Face’s tools, Microsoft will prevent the software from detecting diverse attributes, such as gender, age, smile, beard, hair and makeup.

Image: Disclosure

The elimination of these tools started to happen last Tuesday (21), although customers who use the functionality will lose their access on June 30, 2023.

“Experts inside and outside the company highlighted the lack of scientific consensus on the definition of ’emotions’, the challenges of how interferences pervasive across use cases, regions and demographics,” described Natasha Crampton, director of AI at Microsoft, when talking about the reasons why they made that decision.

But in addition to emotion recognition, Microsoft will implement restrictions on a feature called Custom Neural Voice, which allows the use of AI to create voices based on recordings of real people.

This has great educational potential, although it can also be used to inappropriately recreate voices and mislead people.

Microsoft internal use

Image: Microsoft

Even with the decision to withdraw public access, Microsoft will continue to use the tool for a necessary function. The Seeing AI app will keep access as it is used to describe the world to people with visual impairments.

Via: The Verge