The industry of software development has been experiencing a period of apparent decline for the last ten years or so. Due to some circumstances and trends that I will explain later, the industry seems to have become addicted to easy solutions, which do not necessarily have to be the best in terms of code quality and maintainability.

Many users have been able to notice in recent times how the software has been monopolizing more and more resources at the level of processor, RAM, graphics and disk space, however, on many occasions this greater use of resources, which in some cases is even exponential, does not translate into an improvement in proportional or justified terms. This not only covers video games, but can also be seen after comparing, for example, local audio players, an area in which certain “new inventions”, despite simplifying formulas from the past at the interface level, end up hogging more resources than older apps with more features.

The conclusion that can be drawn is that software has tended to “occupy” more resources to do the same thing instead of consuming more in order to offer significant improvements to the user. This is not always the case and in this article I am going to mention an obvious example, but it is obvious that in recent times a good part of the software we use has been made worse and it is no longer strange to see that applications and video games are officially launched in a clearly unfinished, and sometimes even tons of patches fail to fix the situation.

A hardware that is very powerful

The context that I am covering in this article is complex and has many edges, but there is no doubt that the power that hardware has acquired over the years is one of the main reasons why software is apparently increasingly neglected.

To understand this point you only have to ask yourself one question: what is old hardware? Possibly more than one reader has been thinking about where to put the barrierand it is that a sixth-generation Intel Core i7 is today a very competitive processor that can handle practically everything in a home environment, even running heavy video games with ease if it is accompanied by the appropriate graphics.

By taking it down a notch and making sacrifices, even an old Intel Core 2 Quad can be more useful than its age may seem, and not just for basic office and basic tasks, but also for things like programming on projects that don’t are especially large, to mention one example.

Another fact that there is a PC market that, at general levels, has been somewhat weak during the second decade of the 21st century. Here the fact that many people have decided to replace their PC with a mobile stands out, but that does not mean that the x86 PCs themselves have been very powerful for a long time.

What’s more, even a fourth-generation Intel Core i7, accompanied by a recent low-end graphics card, is capable of running a mastodon like Cyberpunk 2077 with more satisfactory results, even if it is not with high graphics settings. In any case, if one is not a gourmet, a recent video game can look good even with the graphic settings at medium level.

Applications hog more resources without bringing great things to users

Applications hog more and more resources, processor, RAM, graphics and even disk space, and sometimes we get the odd scare/displeasure, as is the case with Atomic Heart, which has gone from requiring 22 gigabytes of disk space to 90.

The fact that software tends to hoard more resources is something normal and we could even say natural, but in the last decade there is an aspect that irritates many, and that is that There are many who have the feeling that the software has increased its hardware requirements a lot, but in return it does not provide an experience or an improvement that justifies said increase.

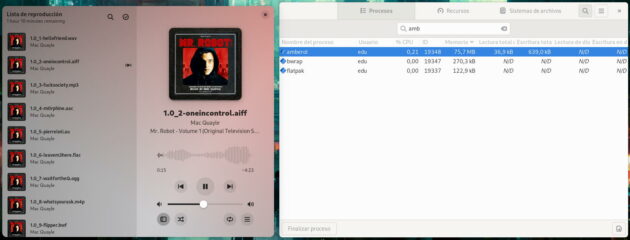

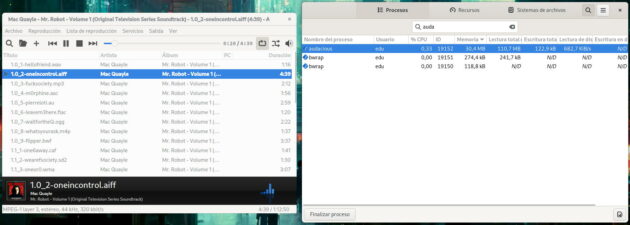

It is not necessary to turn to video games to see an example of increased consumption of resources that does not bring great things in return, since this can also be seen by comparing other types of applications, such as local audio players. Here we have Audacious, a veteran of the segment currently building with C++ and Qt, and Amberol, which is built with Python and GTK. Even though Amberol is a simpler application than Audacious, it ends up consuming more memory.

Another factor that may have contributed to the increase in hardware resources needed to run software is the abuse of technologies that work at a high level. Languages like Python and JavaScript have gained a lot of ground over the course of the last decade and are used for many things, including technologies that work at the server level and graphical applications that are installed locally.

At this point, we cannot forget about some webapps promoted through the framework Electron, which have a legion of detractors mainly among Linux users, and it is that An Electron application is basically a self-contained website within a browser, which adds quite a bit to the resources required for its correct execution.. Added to this is the traditional poor performance that the webapps.

Little work in polishing and optimizing the software and slow recycling

Because the PC video game market works primarily through digital distribution, this has encouraged many developers to release the games raw, although on more than one occasion it has been pressure from investors and other unrelated parties. to development which has forced the premature release.

Another point that must be taken into account is that, far from what is perceived from the outside, the software development sector is actually quite conservative and averse to change. This is evident in the video game industry, where you can see that an outdated API like DirectX 11 still holds its own against newer ones like DirectX 12 and Vulkan.

One of the reasons why DirectX 11 is more relevant than Vulkan and DirectX 12 is that the latter two require learning, while the old API is already widely known. Basically DirectX 11 works, so why change it? This mentality also affects professional software such as Blender, in which it was possible to see how the development of its Vulkan support ended up stopped in front of an OpenGL that, after all, worked and continues to work, although from the creation and rendering solution 3D graphics work on Vulkan was resumed later.

From the point of view of “because it works” it seems that the existence of Vulkan and DirectX 12 does not make sense, but these APIs work at a lower level and therefore take better advantage of the hardware, so the consequence of keeping DirectX 11 and OpenGL is an increase in hardware requirements in order to “correct” its limitations. It is true that DirectX 12 is beginning to take hold in the video game industry, but the transition is being quite slow if we see that the API came to light in the middle of the last decade.

Due to the slowness of much of the software development industry in updating their knowledge, a perverse dynamic has been created in which consumers are encouraged to buy more and more powerful hardware to run under software conditions. obsolete, instead of putting the batteries the developers to use technologies that take better advantage of the hardware.

Chrome, a justified case of increased resource consumption (at least initially)

With this article I do not want to criticize the consumption of resources itself, but rather certain trends that have been consolidated around it, especially the fact that in return they do not provide great improvements or things that justify it. However, not all cases are negative, and Chrome (rather Chromium, the base technology, although I’ll focus on Chrome here for simplicity) was at least initially a positive case.

Chrome appeared fourteen or fifteen years ago as a hurricane and driven by the powerful machinery of Google. That browser, which is essentially a Chromium with some Google additives and with the closed source code (it is proprietary), noted for the use of multithreadingwhich translated into a notable increase in hogged hardware resources compared to its competitors.

Chromium, the technological base of Chrome.

Using multithreading enabled Chrome take better advantage of multicore processors that were already common at that time. As a result, Google’s browser clearly consumed more resources than Firefox, but in exchange it offered much better performance and response, things that were even more appreciated in a quad-core, and not necessarily recent. What’s more, in 2016, before the arrival of Quantum, Chromium browsers performed much better than Firefox even on an old Intel Core 2 Quad.

Despite the fact that Chrome was harshly criticized for the large amount of resources that it monopolized and continues to monopolize, the reality is that the majority of users saw that this compensated them, so Mozilla had no choice but to get their act together and implement the multithreading in Firefox to try to plug user flight, something it hasn’t been able to see how the web browser market has worked since the advent of Quantum.

Conclusion

The trends in the software development industry in the last decade have ended up artificially increasing the hardware resources needed to use the software, sometimes for it to work properly, other times because it takes up too much disk space, which in devices like the Steam Deck you can force the use of a microSD card as an additional storage solution.

However, not everything is pessimistic, since Rust has appeared in recent years, a programming language that is making a lot of noise and that for a few years has claimed an important place in software development. Rust was born within Mozilla and currently operates independently. It is being pushed hard by its enthusiasts to introduce it into the Linux kernel and is focused on type safety, performance and concurrency.

Here the question is not so much that Rust spreads by itself, but rather that it acts as an axis or becomes a reference to recover certain ways of proceeding that are being lost in favor of choosing the easiest path and delegating everything to the hardware capabilities.

Cover image: Pixabay