ChatGPT has become a true phenomenon, and has generated enormous interest among many giants in the technology sector. Microsoft has undoubtedly been the company that has shown the most interest, but we must be clear that this is really only the tip of the iceberg, and that the adoption of increasingly advanced AI models It will only grow in the coming years.

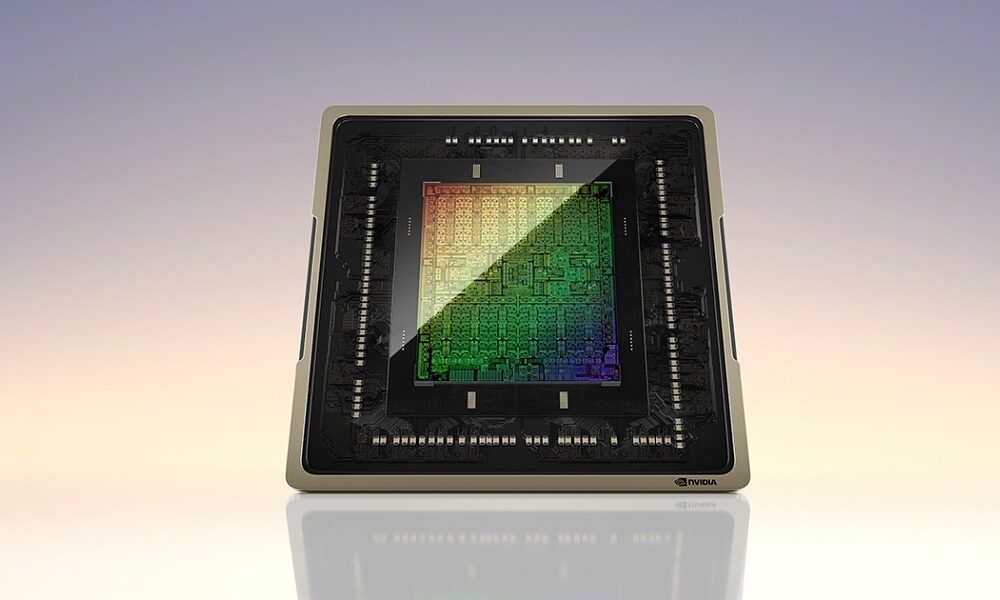

This reality leads us to a very important issue, the hardware on which these advanced artificial intelligence models are based. Today, most of them rely on dedicated NVIDIA hardware, specifically on GPUs from that manufacturer, in fact ChatGPT was trained on a total of 10,000 NVIDIA graphics accelerators. I haven’t been able to find out which exact models were used, but my guess is that it would be the Tesla series based on the Ampere architecture, which comes with 3rd generation Tensor cores specializing in AI, inference and deep learning.

What I just said may end up becoming a major problem, because it means that there is a huge reliance on NVIDIA GPUs in the world of artificial intelligence, due not only to its performance but also to its support. CUDA continues to play a key role in many programming models today because of its ease and parallelization, and many deep learning libraries and frameworks, such as TensorFlow and PyTorch, are not only CUDA-compatible, but also optimized. for NVIDIA GPUs.

With Google and Microsoft betting on ChatGPT, and with artificial intelligence emerging and establishing itself in more and more sectors, it is clear that Demand for NVIDIA graphics accelerators (GPUs) will only grow in the coming yearsand this could end up triggering a crisis in the GPU industry if demand ends up vastly outstripping supply.

Some may believe that we are exaggerating, but the truth is that we have very clear data that reinforce this idea. For example, it is estimated that Google would need a total of 512,820 NVIDIA A100 HGX servers to be able to fully integrate ChatGPT into its well-known search engine. In total, that equates to a whopping more than 4.102 million A100 GPUsand would cost Google more than $100 billion.

This would only apply to a giant, so think about how demand for GPUs could skyrocket if there is mass adoption of AI and solutions like ChatGPT. Maybe with the arrival of future graphics architectures the total number of GPUs needed is reducedbut in the end it is an indisputable fact that the demand for this type of solution will end up being getting bigger.