The arrival of CPUs and APUs in PCs that make use of heterogeneous cores and therefore cores of different complexity and size is a fact. But how do these heterogeneous cores differ in nature and performance? That is the question that many ask themselves when they read about the different architectures that are appearing on the market. Why after more than a decade using a single type of core has the leap to the use of big and little cores in CPUs.

Why the use of different types of cores?

There are several reasons for this, the best known is the one that has been used in the now classic big.LITTLE of CPUs for smartphones, where two collections of cores of different power and consumption are switched in use according to the type of applications according to the workload on the smartphone at all times. This was done to increase the battery life of such devices.

Today this concept has evolved and it is already possible to use both types of cores simultaneously and not in a switched way. So the combined design is no longer based on saving energy, but on achieving the highest possible performance. This is where we get into two different ways of understanding performance depending on how heterogeneous cores are used.

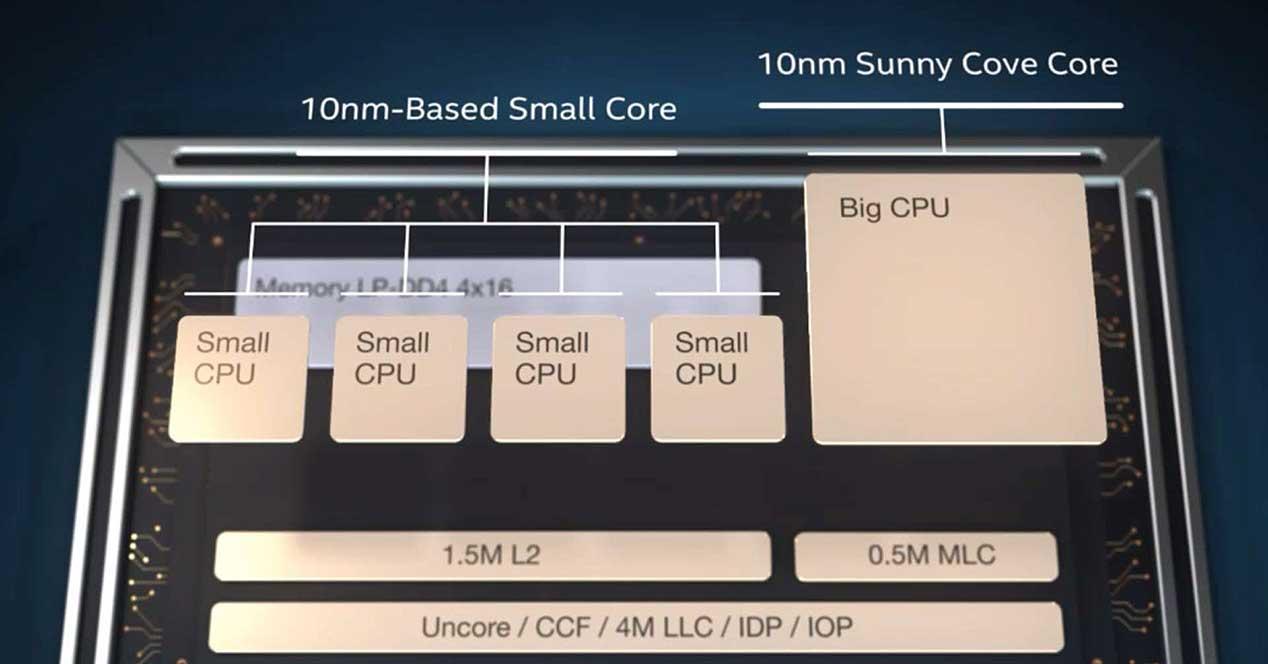

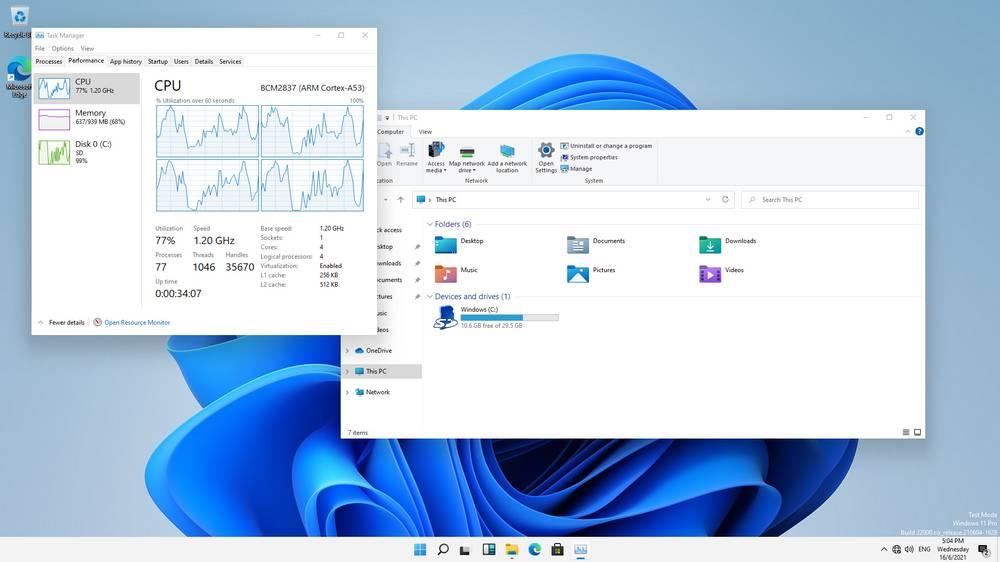

The most widely used of these, as it is the easiest to implement, consists of assigning the lightest threads in terms of workload to the cores with the least power, a task that the operating system has to carry out. Which is the piece of software in charge of managing the use of hardware resources including the GPU. This way of working is the same as the Intel Lakefield and its future architectures like Alder Lake, as well as the ARM cores with DynamiQ.

Whatever it is, the organization is based on the use of two cores with the same set of registers and instructions but with different specifications. What are the differences between the different heterogeneous nuclei? Let’s see.

Big cores vs. small cores today

First of all let’s get into the obvious, the first difference between the two types of nuclei is in size. Since big cores are more complex than little cores, they have a more complex structure and therefore made up of a greater number of transistors. Ergo are larger than little cores which have a much simpler structure. This means that within the space of the chip we can include more Little cores in the space of the chip than Big cores.

To all this, the first thing you will ask yourself is: what is the performance advantage when applying the two types of cores? We have to take into account that on the PC today, on our PCs, several applications are running at the same time, each one executing several threads of execution. What the fact of adding a greater number of cores, even if it is based on doing it with cores lighter in power ends up adding to the total performance.

In reality, the smaller cores are just one more way to lighten the work of the larger and more complex cores, taking away work to do. Not only that, but even additional cores can be used to manage the most common interruptions of the different peripherals, so that the rest of the cores do not have to stop their operation at any time in order to attend to them continuously and at all times. .

The architectures of the future go through heterogeneous configurations

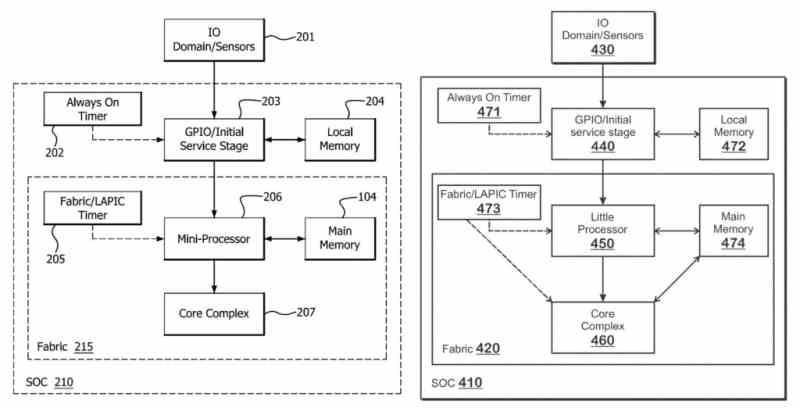

The other method is more complex to implement big and little kernels differs from the previous one, since it consists of dividing the set of registers and instructions of the ISA and repeating it in two classes of nuclei. The reason is that not all instructions have the same energy consumption, but the simplest will always consume more in the more complex cores. So the idea is not to distribute the execution threads to their corresponding kernel, but rather that the execution of a single execution thread is shared between two or more cores in an interleaved way.

Therefore, its implementation is much more complex than the current model, since the different cores in charge of the same thread of execution must have the necessary hardware to coordinate when executing the code of the programs. The advantage of this paradigm is that in principle it does not require the work of the operating system to manage the different threads of execution that the CPU has to execute. But, in this case, as we have already mentioned, the division of heterogeneous kernel types depends on how the set of instructions is distributed between both kernels.

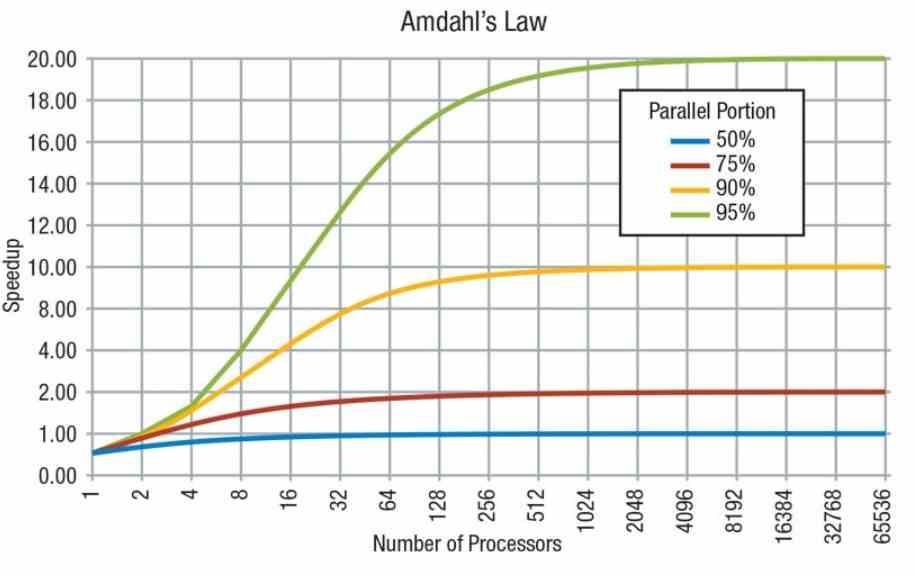

The operation of this method has to do with the so-called Amdahl’s Law and the way in which programs scale in terms of performance. On the one hand we have sequential parts that cannot be distributed among several cores as they cannot be executed in parallel and on the other parts that can. In the first case the power will not depend on the number of cores but on the power of each core, while in the second it will depend on each core.

Traditionally, the most complex instructions in a CPU are implemented from a succession of simpler instructions in order to take advantage of the hardware much better. But the new fabrication nodes will allow more complex instructions to be wired into the more complex cores directly, rather than being a composite of multiple cores. This will also serve to increase the general performance of the programs, since when executing these instructions, they will take much less clock cycles to execute.