Intel’s upcoming Xeon “Sapphire Rapids” processors feature a memory interface topology that closely resembles that of first-generation AMD EPYC “Rome”, with a modular design with multiple chips in the processor. In 2017, the Xeon “Skylake-SP” processors had a monolithic matrix, but that era is long gone and it seems that multi-tile chips are the future of modern computing.

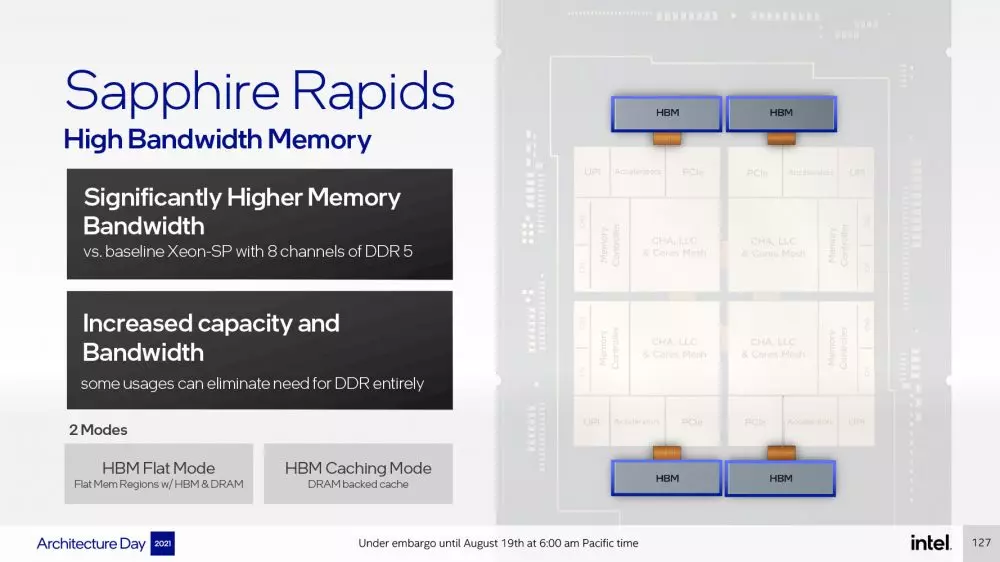

Intel Sapphire Rapids memory topology, with HBM + DDR5

Despite being spread across multiple memory controller tiles, Intel described the 6-channel DDR4 memory interface as an advantage over EPYC “Rome”; AMD’s first Zen-based enterprise processor was an 8-core 14nm ‘Zeppelin’ quad-array multi-chip module, each with a 2-channel DDR4 memory interface in addition to 8-channel I / O processor. Like Sapphire Rapids, the CPU core of any of the 4 die has access to memory and I / O controlled by any other die, as all four are networked via the Infinity Fabric in a configuration that, essentially, it looks like “4P on a stick.”

With Sapphire Rapids, Intel is taking a suspiciously similar approach: It has four compute tiles (matrices) instead of a monolithic matrix, which Intel says helps with scalability in both directions; each of the four computation tiles has a 2-channel DDR5 memory interface o 1024-bit HBM, which adds to the processor’s total 8-channel DDR5 I / O.

Intel says that each tile’s CPU cores have equal access to memory, top-level cache, and I / O controlled by any die. Communication between tiles is handled by physical media EMIB (55 micron blow-through wiring); UPI 2.0 it constitutes the interconnection between sockets, and each of the four computation tiles has 24 UPI 2.0 links operating at 16 GT / s.

Intel hasn’t detailed how memory is presented to the operating system or the NUMA hierarchy, and yet much of Intel’s engineering effort seems to be focused on making this disjointed memory I / O work as if “Sapphire Rapids” were a monolithic die. The company claims that “constant low latency and high cross-bandwidth is a common denominator across the SoC.”

Another exciting aspect of the Xeon “Sapphire Rapids” processors is the compatibility with HBM, which could be a game changer for the processor in the HPC and high-density computing markets. Specific Xeon Sapphire Rapids processor models could therefore come with HBM on the same processor die.

This memory can be used as a sacrificial cache for caches in the compute tile matrix, greatly improving the memory subsystem, working exclusively as a standalone main memory, or even working as a non-tiered main memory alongside DDR5 RAM with flat memory regions in the system. Intel refers to these as modes HBM + DDR5 software visible and software transparent HBM + DDR5.