The latest rumors suggest that the next generations of graphics cards will increase in size and consumption. This is a problem, to which it seems that a rather ingenious solution has been found. NVIDIA would have developed PrefixRLa system that makes it possible to reduce the circuits and, consequently, make the graphics cards have lower consumption.

It is rumored that the NVIDIA RTX 40 Series could consume close to 600 W in the most powerful models. We are talking about excessive consumption, despite the fact that the size of the transistors is reduced. This problem is known by manufacturers of graphics (and processors) solutions are being sought.

NVIDIA will make its future graphics cards more efficient

Designing the GPU circuitry of a graphics card is not easy at all. The goal is always improve design to increase power, but at the same time make the design as small as possible. Reducing the size of the circuit means that it is faster and cheaper to manufacture.

To help engineers, NVIDIA has developed a AI model named PrefixRL. This system is presented as an alternative solution to traditional tools for integrated circuit design.

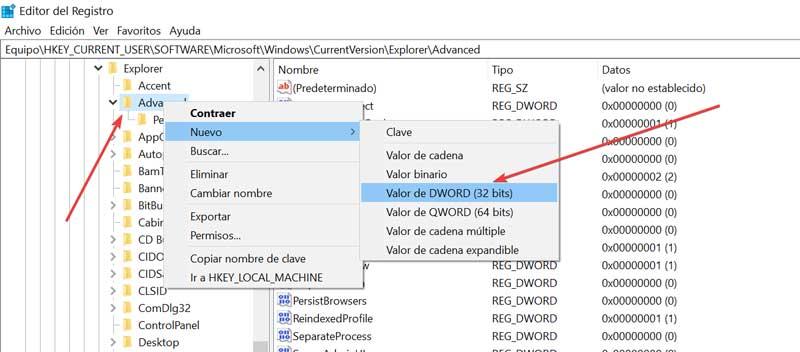

Currently use EDA (electronic design automation) that is offered by providers such as Cadence, Synopsys or Siemens, among others. Each vendor uses a different AI system for silicon placement and routing. PrefixRL it seems to be a much more powerful solution that allows a higher level of integration.

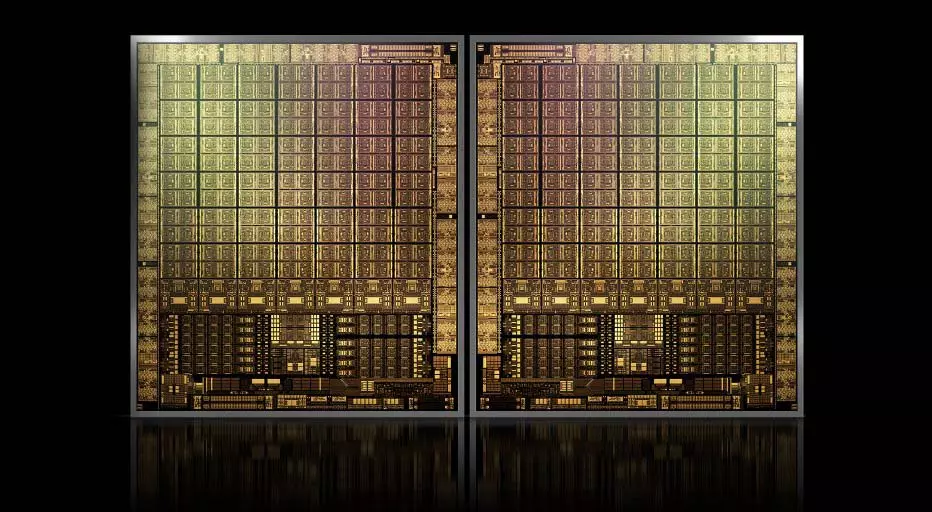

The idea is to get the same features as EDA systems, but with a smaller die size. According to NVIDIA, GPUs Hopper H100 make use of 13,000 instances of arithmetic circuits designed by PrefixRL. The design based on PrefixRL would be 25% smaller than the one designed by EDA. Always with equal or better latency.

The system is quite complex and requires a large amount of hardware to be able to do the development. It has been estimated that to design a 64-bit adder circuit, require 256 processor cores and about 32,000 hours. Obviously, the higher the number of cores, the better the timing.

How will it affect gaming graphics cards?

As well, NVIDIA has not given data in this regard, but it is possible that for the RTX 40 Series This technology has already been used. Rumors point to high consumption, although there is no evidence in this regard. It is possible that one hypothetical RTX 4090 consume a lot, but the mid-range and, from the outset, have consumption similar to current graphics.

Presumably, as this technology for circuit design improves, the level of integration will improve. In the long run, it could reduce consumption and increase performance. It also helps in reducing manufacturing times, therefore, the manufacturing costs of chips for graphics cards.

Possibly, NVIDIA will wait for specialized events, such as Hot & Chips to give more information about this technology and its operation. Without a doubt, a very interesting solution that can improve the graphics cards of the future.