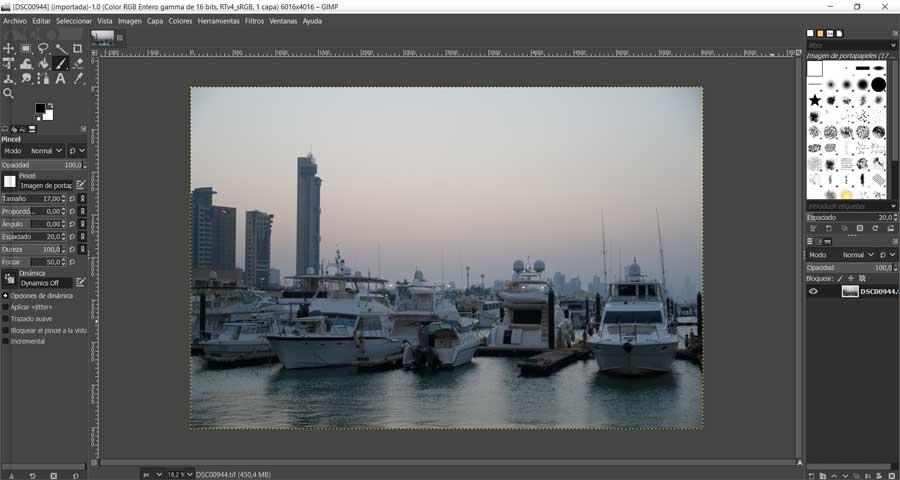

If you’ve ever wondered why we no longer see inside the world of PC two processors or more, that is, several processor chips mounted on a board, then you are in luck, since we are going to give you the answers to it. Although it may be that you have never asked yourself. In any case, aren’t two better than one?

This question has two different answers depending on the context we want to give it. The first one is the economic reason, due to the fact that for most users having a single chip as a CPU or system processor is enough. So it ends up being an unnecessary cost for motherboard manufacturers in the end, since it will be used by very few people. That’s why we don’t see dual-socket systems for dual processors. In switching to technical reasons, the explanation is somewhat more complex.

Why don’t we see two processors on PC?

Well, the reality is that We have long used more than two processors, since each CPU chip has several processing cores and each of them is a complete processor in itself. That is, with its control units, execution units and their corresponding caches. All this seasoned with a last level cache so that there are no conflicts when accessing information in memory, as well as access to memory in common thanks to the fact that today, the part in charge of accessing the RAM is located inside the processor.

Now, we have to understand that any program executed by a processor can be divided into two different categories:

- sequential code that it cannot be executed in parallel and that it works on only one of the cores. So the communication between these will not be important.

- parallel code which can be executed between two or more cores. It is here where Communication speed is very important.

If there is a lot of physical distance between the cores that are collaborating with each other, then this can become a performance bottleneck when executing programs, we will see this problem below.

Disaggregated processors and latency

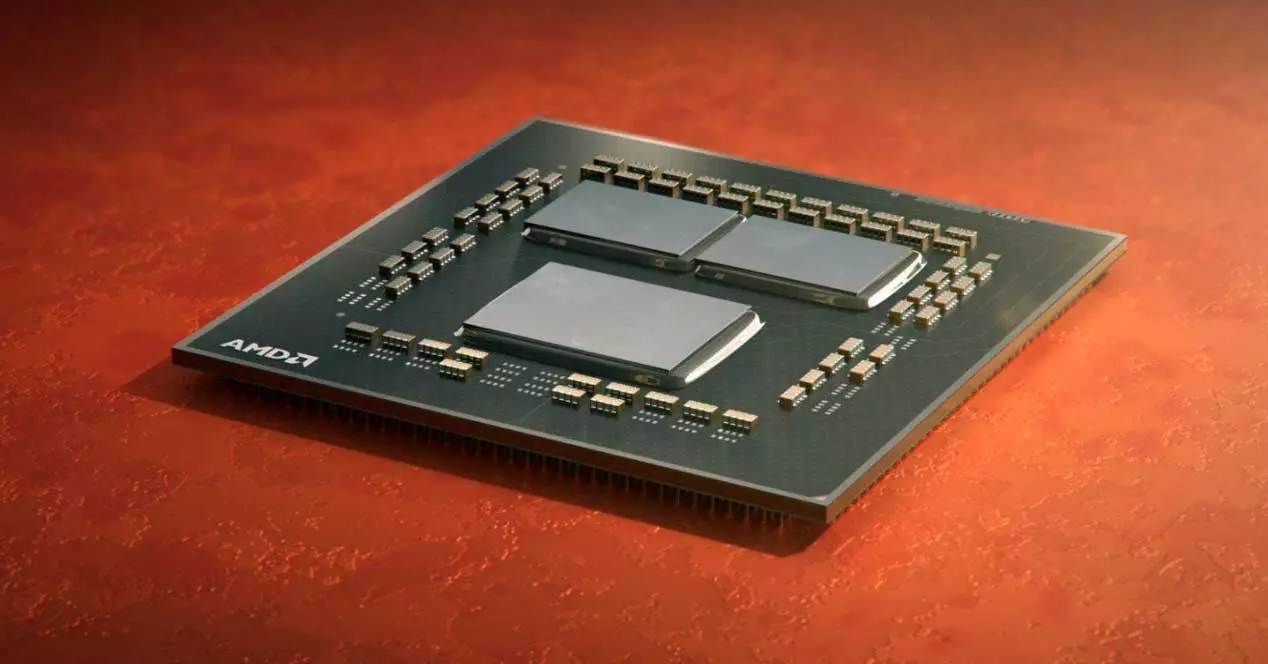

One of the biggest problems with running code in parallel is latency. Today most chips are monolithic and made in one piece. Although we have the case of AMD that with its desktop Ryzen has been completely disintegrating the processor for some time, especially in its Ryzen 9. That is, two separate chips that include the different cores in full and their cache levels.

Well, taking a Ryzen 9 5950X we find the following phenomenon:

- The latency of each core with its neighbor is 6.2 nanoseconds.

- Due to the ring structure where the data circulates in each sector of the processor per clock cycle and not all at the same time, the latency with the rest of the cores within the same CCD is up to 19.2 nanoseconds.

- If we have to intercommunicate a core of a CCD with another CCD, then we can go to latency figures of 83.6 nanoseconds.

As you can see it is counterproductive for performance to separate processor cores. However, AMD’s solution is not two totally separate CPUs, since the memory controller is inside the IOD and therefore memory access is common. So it’s not the same as two totally separate processors.

Consumption is higher with two processors or more

Another point to take into account is the fact that communication between the components of a chip is more expensive the more distance there is between them, since power consumption increases with latency. That is why in that aspect, it is better to have the cores as close as possible and even within the same chip. Although of course there are also limitations on them such as the speed of internal communication and the maximum size that can be manufactured. So not only would the electricity bill increase by placing a second processor, it is also that these would consume much more than separately, since we would have to place an additional bus for communication between them.

Many chips are difficult to communicate with each other

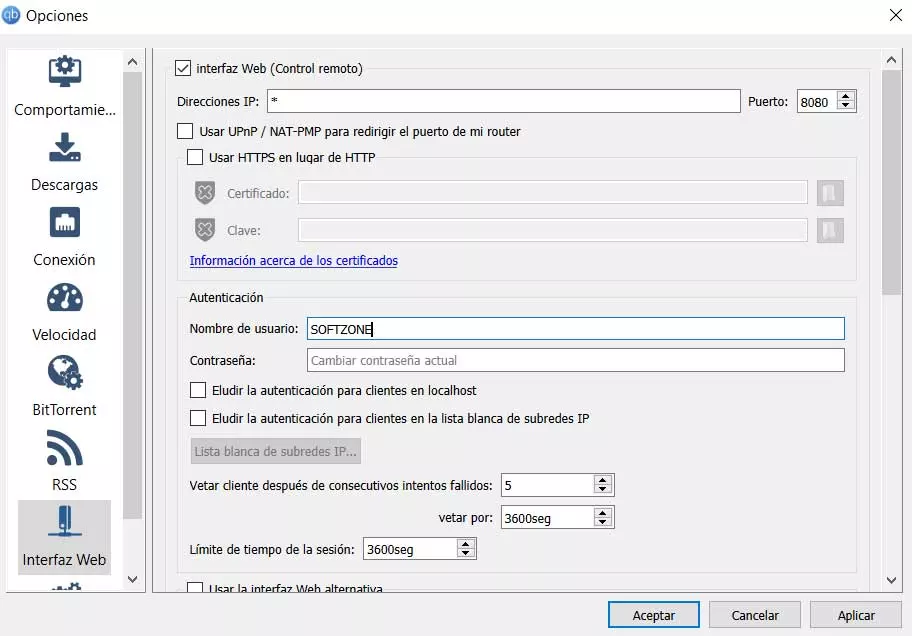

We must start from the basis that every CPU has a series of interfaces that allow it to communicate with the outside. On the one hand, the one that allows it to interact with the RAM memory and on the other, the one that makes it communicate with the peripherals. However, we are saying that all of them have to collaborate in a common work. How do we make it so that two processors collaborate with each other without conflicts of any kind?

- To start we must divide the RAM memory into several different spaces, one for each of the processors.

- The operating system kernel runs on the first processor, but not all processes. So it ends up needing a coherence system between both parties. That is, we must create a data synchronization system between both processors, so that the duplicate variables have the same information.

All this is achieved today with current multicore processors. Although things get complicated, if we talk about separating the cores into different chips and all of them with their peripheral and memory interfaces. When processors are single core, having two chips and coordinating them was very easy, and even four.

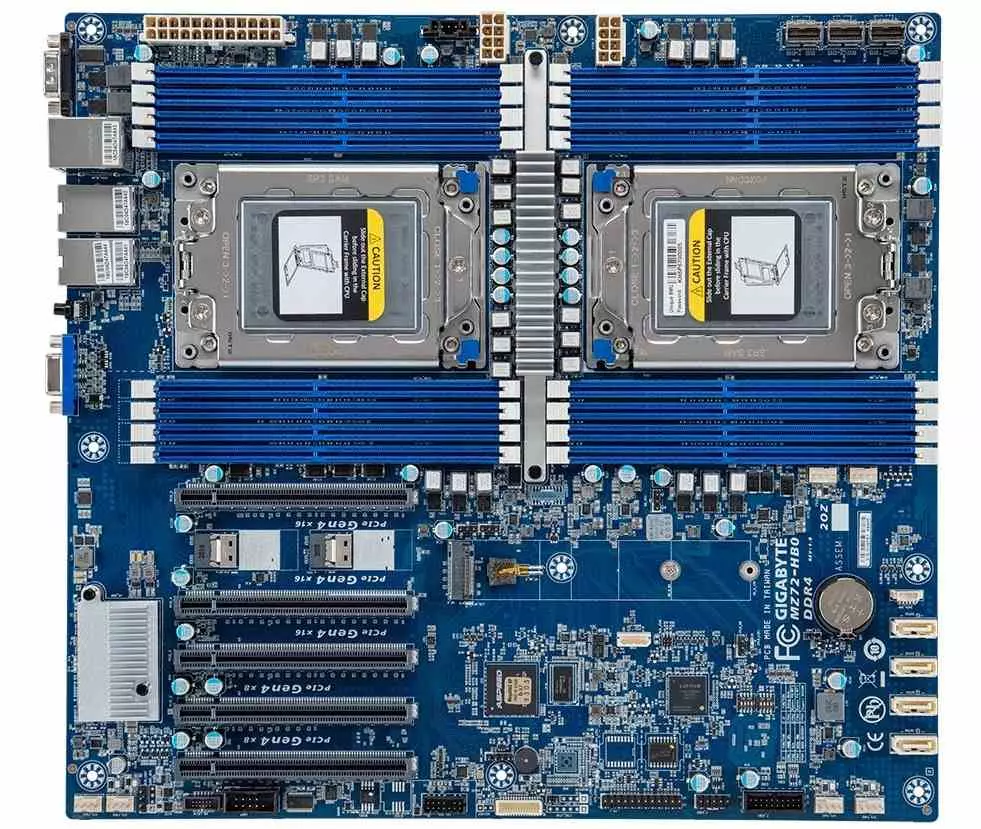

Precisely you don’t usually see more than four processors in servers, that’s why. Not only because of the fact that the latency between cores could kill the performance increase completely, in addition to requiring a very complex communication. Think that the number of interfaces to communicate is to multiply the number of these and subtract 1. Thus, 4 processors require 15 interconnections, but eight of them 63.