By announcing the arrival of a tool to fight against child pornography on the iPhone, Apple has put its finger in a gear that has propelled it in the midst of a severe controversy over its privacy policy.

Apple is currently at the heart of a controversy over privacy. The company, however better known for its firm position in favor of the protection of personal data, is criticized by associations and specialists because of the installation of a new tool on its iPhones.

The fear of authoritarian abuses

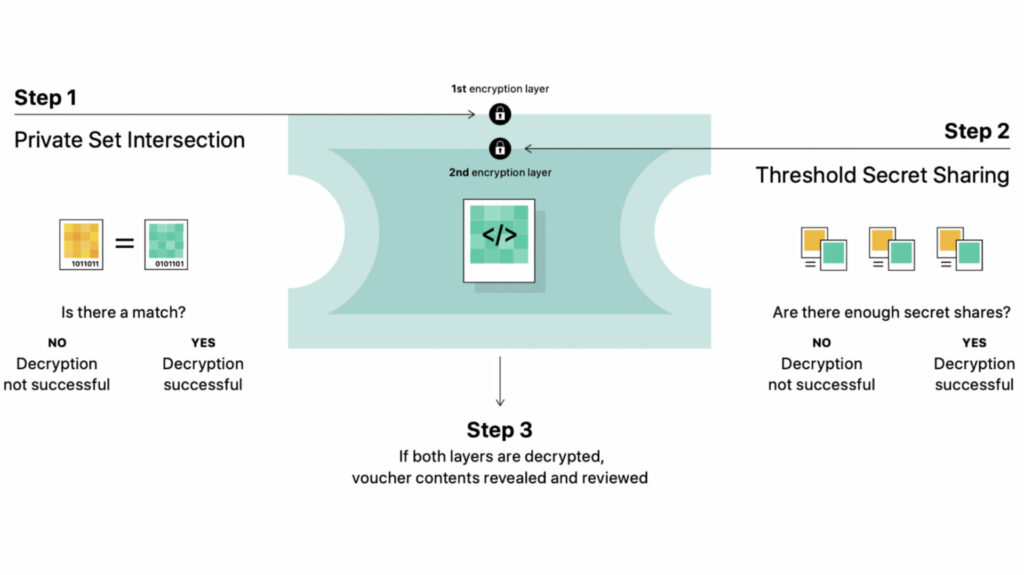

Named NeuralMatch, this system, which will arrive with a future update, aims to identify potential child pornography content shared by iPhone owners. Technically, this system only compares the electronic signature of a photo with those contained in a database provided by the NCEMC, an association for the defense of children’s rights.

Except that the mechanism employed is particularly intrusive according to many privacy advocates. Each photo sent to iCloud will in fact be scanned locally on the phone and will inherit a unique (and encrypted) signature which is supposed to allow the identification of potentially illegal content. The fear is that this tool, designed to fight against child exploitation, will be hijacked by authoritarian governments to identify critical contents of power.

Critics from Edward Snowden

” Governments that ban homosexuality may require the tool to be trained to identify LGBTQ + content. An authoritarian regime may require it to be able to spot popular satirical images or leaflets of demonstrations “, Worries the EFF on its site. The famous American NGO for the defense of digital rights does not hesitate to describe the solution as a “backdoor”, in allusions to the weaknesses intentionally installed in computer systems to allow espionage. ” Don’t be fooled, this is degradation of privacy for all iCloud Photos users, not an improvement », Writes the NGO.

No matter how well-intentioned, @Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.

They turned a trillion dollars of devices into iNarcs— * without asking. * Https://t.co/wIMWijIjJk

– Edward Snowden (@Snowden) August 6, 2021

The argument is echoed by famous whistleblower Edward Snowden who added in a tweet that ” no matter the good intentions, here Apple is deploying a tool for mass surveillance of the whole world. Don’t get me wrong: if they can scan child pornography today, they can scan anything tomorrow “. Asked by the Financial Times, Ross Anderson, a specialist in engineering security called this idea ” absolutely appalling “.

“The shrill voices of the minority”

For its part, Apple defends itself by explaining that ” its method of identifying child pornography is designed with the protection of privacy in mind “. By comparing only the cryptographic signatures of the photos, the company explains that it “ learns nothing about images that do not match an entry in the database “. Only iCloud accounts that are reported multiple times will be alerted, followed by human analysis, the company says.

An internal note obtained by the AppleInsider site nevertheless shows that the company is aware of the delicate position in which it is. ” We know that some people have misgivings and others worry about the implications of such a tool, but we will continue to explain and detail its functionality so that people understand what we have built. “Writes an Apple official. In the same message, the NCEMC regrets this outcry and qualifies the critics as ” shrill voices of the minority“. Those concerned will appreciate.