The fact is that we have support for two key technologies such as Mesh Shaders and Ray Tracing that require changes in the architecture of the integrated graphics processor that have not occurred neither in the A15 nor in the M2. As for the equivalent of DirectStorage, the same thing happens, it is necessary that there are mechanisms that give access to the system’s SSD, which at the moment are unpublished. What is our complaint? The message from Cupertino implies that these technologies are already available and will be accessible with a simple update of the operating system when this is not the case.

The reality is that a good part of these technologies have already been on PCs for a long time. DirectX 12 Ultimate was officially launched in May 2020 and the latest generation of graphics cards featuring the NVIDIA RTX 30 and AMD RX 6000 already support them. So the reality is that Apple is still in tow in terms of computer video games.

Why is the Apple M2 not a gaming chip?

Extraordinary claims require proof of the same caliber. We know that it is not enough for you that we say that the Apple M2 is not enough for video games, but you need proof of it. However, from Cupertino they have a very powerful marketing that goes deep and that is why it is important to put the points on the i’s.

Graphic technology for mobile and not for PC

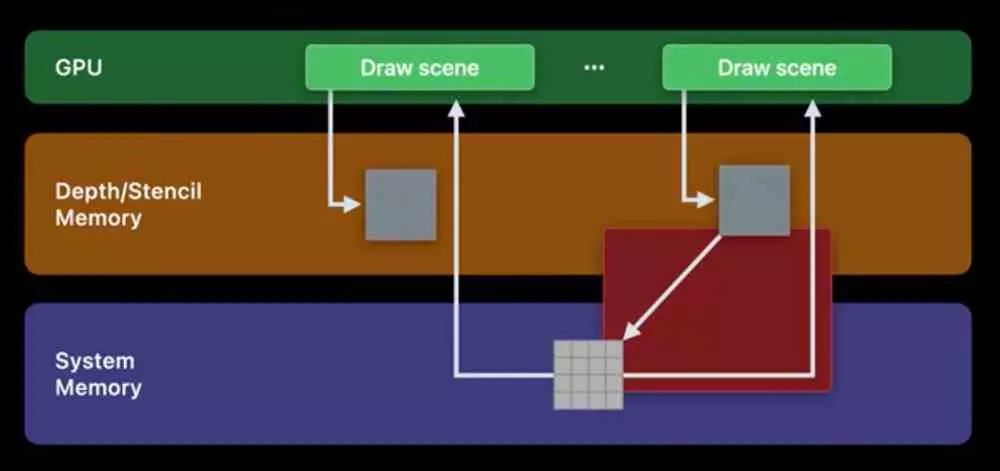

The graphics processor in a Tile Renderer, these types of architectures have the ability to not depend on external bandwidth by dividing each frame into small blocks that can be rendered with the internal memory of the chip. This allows them to reduce the dependency on external memory and specifically its bandwidth. So they can work with simpler memory configurations.

This type of architecture allows them to be much more efficient as integrated graphics, since they have a lower dependence on memory bandwidth. Thus, while in an integrated Intel or AMD, the bandwidth of the RAM, shared with the CPU, is a bottleneck. In the case of the Apple GPU it is not in most cases. Although later we will see that this is not entirely in this way.

for what i knowIdeal for environments that are limited by space and consumption. That is why the Tile Renderers, while they have not achieved anything on PC, they have done so on battery-powered devices such as mobile phones and tablets. Let’s not forget that the chips for today’s Macintosh come from their technology for iPhones and iPads. After all, the M1 derives from the A14 and the current M2 from the A15. The difference? In the case of the M2 we have an integrated graphics card with twice the number of cores compared to the A15, as stated in the official specifications of both devices.

What disadvantage does this approach have?

Due to the large size of texture maps used in games and the fact that they cannot be dumped in their entirety to the GPU’s internal memory, these must be stored in external video memory. so this completely breaks the advantage of a Tile Renderer and it becomes extremely bandwidth poor during the texturing stage of the 3D pipeline. Precisely the one that needs the most computing power, since at this stage the graphics chip works with millions of pixels that it has to texturize.

It is for this reason that the visual quality of the Apple versions of certain games is disappointing. By cutting the bandwidth with the memory, the information that the GPU has to manipulate and process is also cut, causing lower precision graphics than what we can get on the PC for hardware of the same price. They cannot use GDDR memory due to the fact that this would trigger consumption and would not be possible in their industrial designs.

That is why Apple talks about energy consumption, which is a trap, since in low consumption and in any graphic architecture the efficiency is much greater, but tile renderers have an advantage here. The problem is when they scale up and we need power, then the efficiency curve falls apart. If tiled rendering was the best option, it would have been adopted by NVIDIA and AMD long ago. Reality? The mobile device market has been relegated and not precisely on a whim.

For the same price we can find a better PC

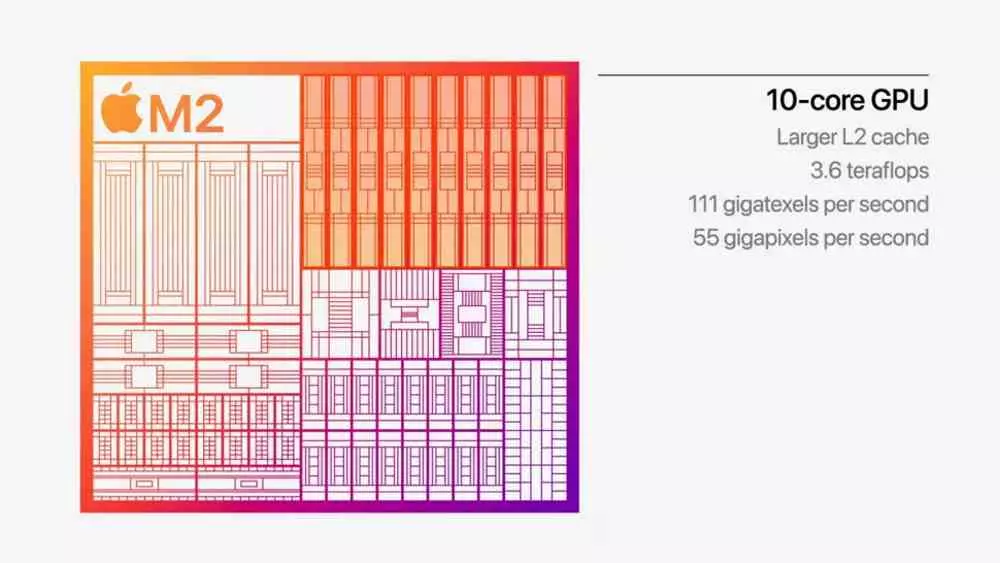

Apple has been very brief about the technical specifications of the graphics processor in the M2. To begin with, the M2 GPU does not seem like a substantial change in terms of architecture compared to its previous chip, except for the fact that we now have 10 cores instead of 8. Although there is also an 8-core configuration. In any case, the technical specifications are as follows:

Putting things in perspective, the worst new generation dedicated graphics card that we can find on the market is AMD’s RX 6500 XT. Which has a power of 5.8 TFLOPS, a texturing rate of 180.2 gigatexels per second in terms of texturing rate and 90 gigapixels per second. That is, at the power level, the dedicated graphics card with the least powerful face and eyes of all is located above the M2. And that’s not to mention superior options like AMD’s own RX 6600 or NVIDIA’s RTX 3050.

If you are looking for a laptop to play video games, then you have to burn a very clear concept in your mind: the dedicated graphics card is essential. It is true that having an ultra-slim and low-power computer with advanced gaming capabilities would be ideal. Unfortunately this is impossible to do.

Does this mean that the M2 is a bad chip and so are Apple computers?

Not at all, but contrary to what many are trying to make you believe, we are not dealing with hardware that is optimized for video games. No one seriously serious is going to build a gaming PC based on an integrated GPU. And this is where the Cupertino graphics processor gets a good grade. The reason? In raw power it is comparable to the graphics card that the Ryzen 7 6800U has. Processor that we can find in models such as the new 13-inch ultraportable ASUS ZenBook S 13 OLED. Although nobody dares to call this computer a PC for games and by the same logic we should not say it of any Mac based on the Apple M2.