Jensen Huang, founder and CEO of NVIDIA, has been responsible for the keynote that has kicked off Computex 2023, one of the most important technology fairs of the year and which began today in Taipei, Taiwan, and which will continue until June 2. The fact that it was the technology chosen for said inauguration is already quite significant and, as it could not be less, the company has ensured that said presentation lived up to expectations.

Most of the announcements have been directed, yes, to the professional sector, unlike what we are used to seeing in other types of events more aimed at the final consumer, as is often the case, for example, at CES. Thus, we have learned of very interesting developments and that, without a doubt, will have an impact on our day, although they will do so through improvements in the performance of data centers, infrastructures, etc., of the products and services that we already use. daily, as well as those that are yet to come.

However, among the ads there are one that will have a more direct impact on end users, and especially among the vast majority of PC users who spend at least part of their time in front of it to play. A field in which NVIDIA has a lot to say, in which it has made fundamental contributions related to artificial intelligence. It is known that this technological field, that of AI, is a priority for NVIDIA, something of which we had a new confirmation last week, and that today is confirmed again with the announcement of NVIDIA CES.

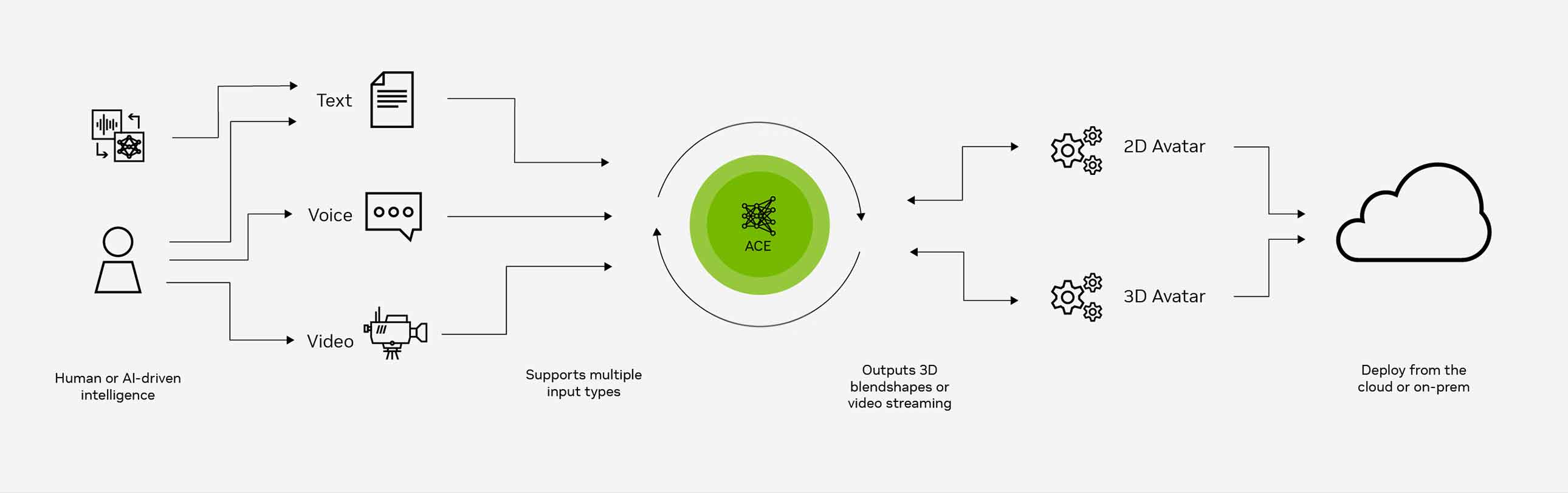

Behind the acronym ACE is the Avatar Cloud Engine technologywhich is based on a generative artificial intelligence model that will serve to substantially improve interactions with NPCs (Non Playable Characters) of the titles that integrate this technology, by making them able to maintain more natural dialogues. In the video shown above this paragraph, you can see the demo shown at Computex 2023, in which Kai, a playable character, enters a futuristic room where he is having a conversation with an NPC (Jin). Jin’s responses to Kai’s dialogues are created, in real time, by the AI algorithm.

It is true that, when analyzed with a magnifying glass, Jin’s answers can seem somewhat rigid, excessively formal (especially if we take into account that many games, nowadays, compete to see which one uses the most aggressive language), but we can understand that this it will adjust over time, so what is really interesting is to think about the universe of possibilities that open up with NVIDIA ACE, because just like in the demo we see how the NPC responds to Kai’s phrases, nothing prevents this same technology from can be used to respond to everything we want to say ourselves.

Thus, if this AI model has a wide enough context window, it can be used to do away with some very annoying elements of how NPCs currently work, such as the constant repetition of the same dialogues, their lack of response to the context, etc. Thus, a technology like NVIDIA ACE can put an end to all these problems, which on many occasions completely break the immersive experience of the game, and only in the best of cases does this translate into a laugh.

In a complementary way (in the sense that these technologies complement each other), Avatar Cloud Engine has three fundamental components:

- NVIDIA NeMo: As I have already indicated, NVIDIA ACE is based on a generative model, and NeMo is the component responsible for creating, customizing and implementing it. This personalized control of the model will allow the developers to integrate, in it, all the necessary context so that the responses adapt correctly to it. In addition, managers will be able to use NeMo Guardrails to prevent conversations and responses that should not occur.

- NVIDIA River: This component is mainly responsible for improving the interactions between players and NPCs, as it offers the functions of automatic speech recognition to process the speeches of the player, and text-to-speech to take the response generated by the AI model and reproduce it with the voice and the patterns of the same that have been assigned to the NPC.

- NVIDIA Omniverse Audio2Face: to complete the credibility of the result, and as its name indicates, this component is in charge of “listening” to the text generated by the AI model and, automatically and immediately, generating the necessary facial animation so that the NPC’s expression matches with what you are saying.

At the moment, no game has been announced that will integrate this technology, and we may still have to wait a bit for it. Nevertheless, we can be sure that NVIDIA already has a project underway about it with a developer, so it is only a matter of time, that the NPCs become much more interesting than they are today.

More information