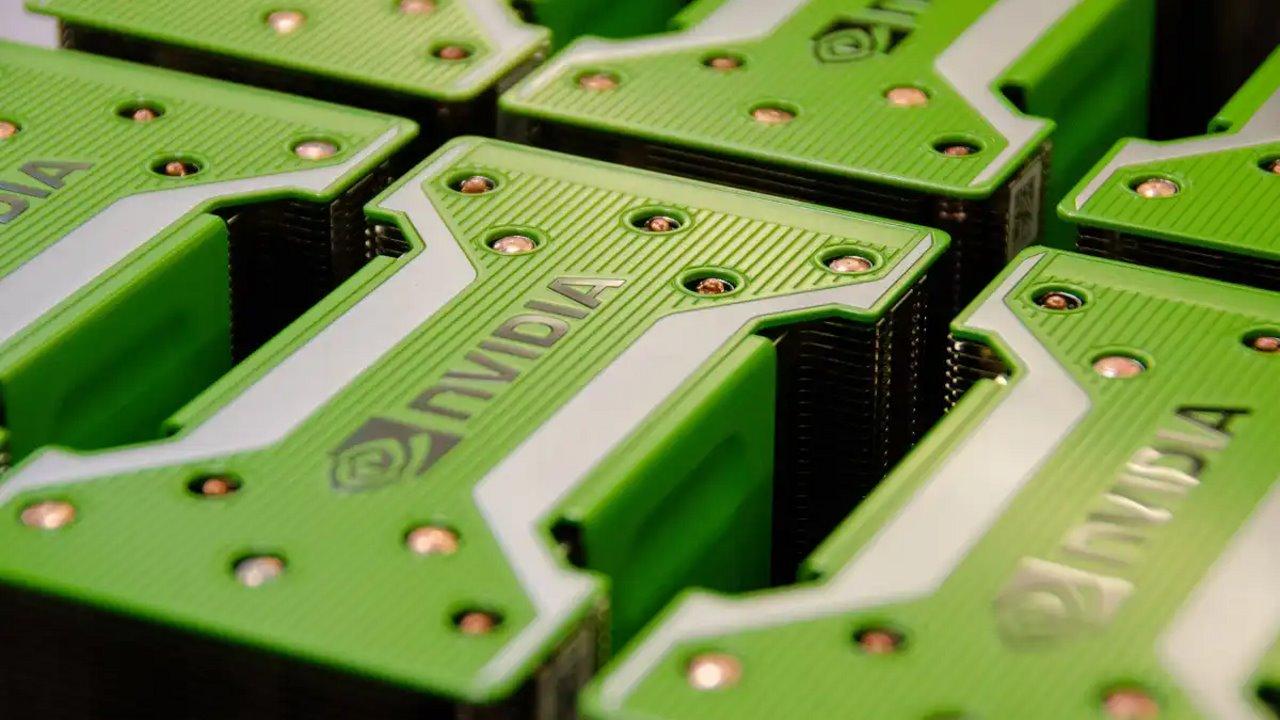

It is undeniable that the Artificial intelligence is the hot topic these days, and of course the big companies have started a race for their dominance, which includes huge investments to try to outperform the competition. In this race, NVIDIA and Open AI they might be working on a new project that would combine not thousands, not even hundreds of thousands… but even 10 million GPUs dedicated to AI.

So far, NVIDIA and OpenAI have already collaborated on projects like ChatGPT, whose latest model (GPT-4) uses several thousand NVIDIA graphics cards designed to power AI. According to the latest reports, the graphics card giant has supplied OpenAI with 20,000 graphics cards more recently, and this number will grow in recent months… but this is just the tip of the iceberg. Let’s see.

OpenAI will create one AI model to rule them all

Having 100,000 GPUs for a project is already quite a milestone that would require a simply beastly infrastructure, which is why having nothing more and nothing less than 10 million graphics cards seems perhaps too ambitious. However, according to Wang Xiaochuan, founder of the Chinese search engine Sogou, ChatGPT is already working on an AI computing model capable of connecting these 10 million GPUs together.

Of course, from said to fact there is a stretch, as they say; The fact that a system has the capacity to connect 10 million graphics cards to each other does not mean that it can be carried out, especially due to the technical requirements that this would entail.

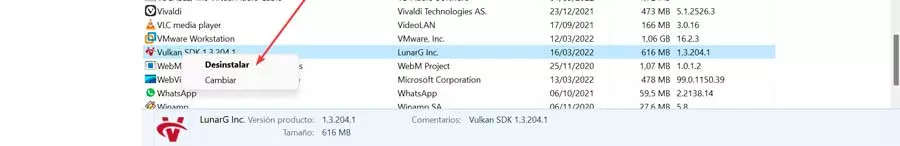

Perhaps with the aim of increasing his credibility, Wang himself has invested in a new Chinese Artificial Intelligence company called Baichuan Intelligence, which aspires to become OpenAI’s main competitor. It should be noted that this company has already released a language model called Baichuan-13B capable of working in terms of hardware with a consumption similar to an RTX 3090.

But here we go: 10 million AI GPUs, a figure that is presumed astronomical and exaggerated in physical terms (imagine the infrastructure that would be required to provide electrical and cooling service to this), especially when according to estimates, NVIDIA has a production capacity of 1 million AI GPUs per year, which would mean that they would need 10 years (at least) to make this project a reality.

Now, keep in mind that NVIDIA is working with TSMC to increase chip supply and production, although the scale of their deal is unknown (in other words, even though NVIDIA is already working to improve its production capacity, there is no telling how much it will increase, but hardly 10x to make this 10 million GPU OpenAI project a reality).

Really, this project seems somewhat utopian and impossible to achieve realistically, and it is quite difficult for us to imagine a data center with 10 million connected graphics cards. Now, dreaming is free and having such an infrastructure would surely put them at the pinnacle of AI dominance.