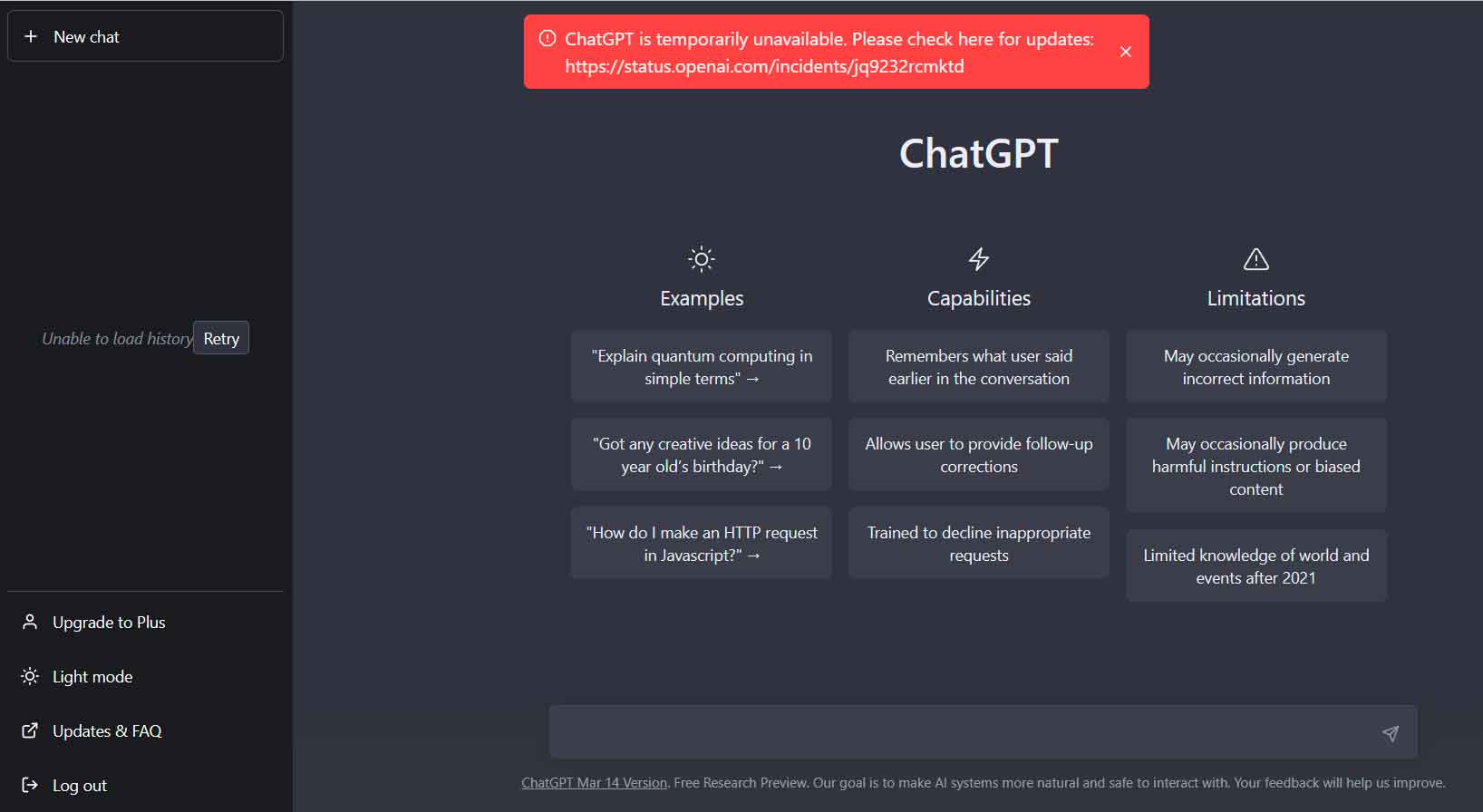

Perhaps you remember that, as we told you, Last Monday ChatGPT experienced a multi-hour outage. Finally, the incident was resolved throughout the day, although some users did not recover their conversation history until a few hours after the incident was considered resolved. From the information we could see on the incident status page, everything pointed to a bug that affected the chatbot’s operation, nothing more.

This fall led me to reflect on the considerations that we must take into account when integrating an artificial intelligence model in any facet of our lives: work/company, study, etc. I have lost count of the number of times I have mentioned how useful these services are and how beneficial they can be for a multitude of cases, but always keeping in mind that we cannot exclusively depend on them, just as we cannot exclusively depend on them. of the smartphone to open the door of the house, because if we are left without a smartphone… we are left without being able to enter the house.

However, over the days we have been able to learn that what happened on Monday was much more than it seemed at first, since It was not a fall, but a voluntary shutdown. And this is not a rumor or a leak, no, it comes from the official OpenAI blog, in which they report on what happened, in an entry that begins by indicating the following:

«We took ChatGPT offline earlier this week due to a bug in an open source library that allowed some users to see titles of another active user’s chat history. It is also possible that the first message of a newly created conversation was visible in someone else’s chat history if both users were active at the same time.»

This, by itself, is already a rather worrying privacy problem, but the truth is that it is reduced to nothing when, when we continue reading, we find the following:

«Upon further investigation, we also discovered that the same error may have caused the Unintentional visibility of payment related information of 1.2% of ChatGPT Plus subscribers that were active during a specific nine-hour period. In the hours before we took ChatGPT offline on Monday, some users might see another active user’s first and last name, email address, payment address, last four digits (only) of a credit card number, credit and credit card expiration date. Full credit card numbers were not disclosed at any time.»

From that point on, OpenAI says that the circumstances that must exist for a user’s information to be exposed are extremely specific, so minimizes the volume of users that may have been affected For this problem. In addition, he affirms that all of them have already been informed about it, while he shares a message in which he tries to reassure them, stating that their security will not be compromised by what happened.

The problem is that actually the simple exposure of personal data already compromises securitybecause in the event that said data is disseminated and ends up in the wrong hands, it can be used in attacks based on spearphishing, so, in reality, those users of ChatGPT, and especially those of the Plus version, will have to act with special suspicion before unexpected communications.

Yes, I must admit, and this is a positive point, that OpenAI reported on the exact causes of the incidence, as well as on the measures taken to solve the problem. The cause is found in a problem with Redis-py, a Python client for Redis, an in-memory database that stores key-value data and is used by the OpenAI chatbot.