There is no doubt that the cost of electricity will rise due to the greed of politicians and their measures, which are easy to say, but they are made with all of our money. However, we do not talk about these issues and although we know that the electricity bill has risen artificially, what interests us is to explain why graphics cards consume more and more, like processors, RAM memories. That is, all the components inside our PC.

That the new chips raise the bill is inevitable

If we look at any processor architecture, general or specialized, we will realize that more than half of the circuitry, if not more than two-thirds, does not exist to process data, but to communicate it between the different parts. And we must not forget that today’s chips can have one or more functions at the same time, but they can be summarized in three main functions:

- To process data.

- transmit data.

- Store data.

Well, although it may seem counterproductive to many, the fact of processing information has a cost both in the number of transistors inside the processor and in the really negligible energy cost. And a good part of the design of the processors is to bring the information to the execution units so that they can process it. The problem is that due to the laws of physics it is more expensive to transport bits than anything else today and one of the consequences is that chips raise the electricity bill, obviously consuming more.

This is called the Von Neumann wall, since it is inherent in all architectures, whether we are talking about a server, a mobile phone or a video game console, and it has become the biggest headache for engineers today. . And more with the need to reduce the carbon footprint.

Explaining the problem quantitatively

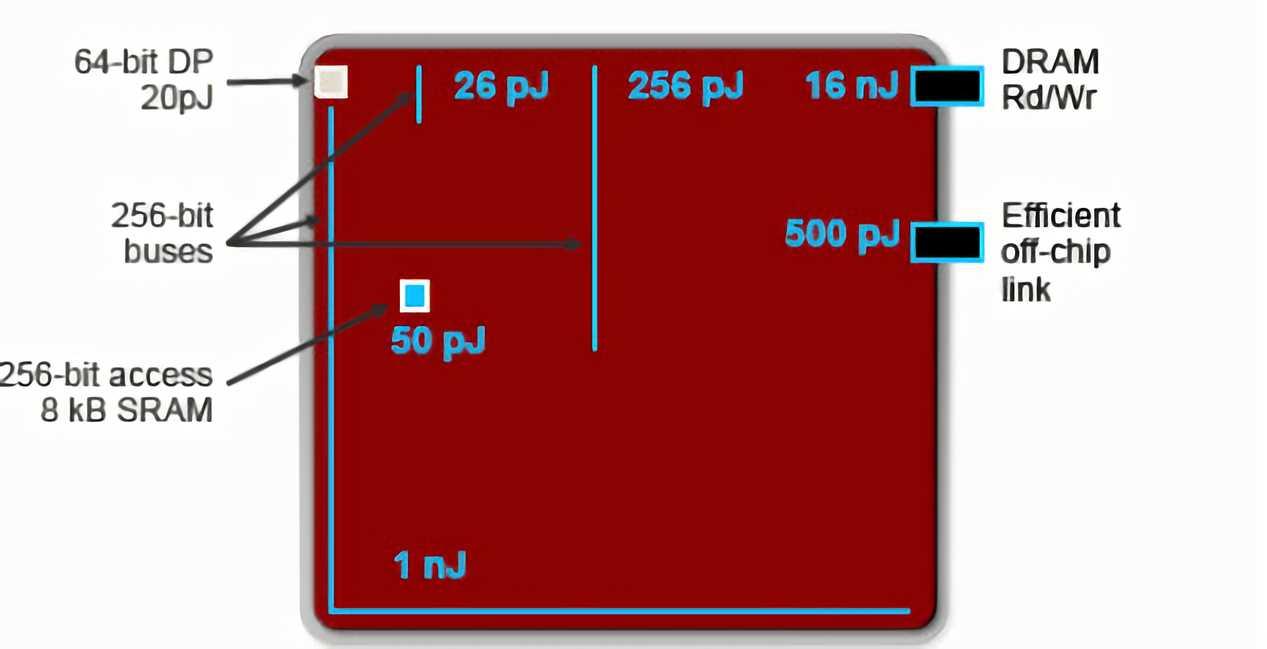

Normally, we usually give the power consumption of the chips in watts (W), which are Joules per second. Because the bandwidth is Bytes or Bits per second, not to confuse both terms, one way to measure a simple data transfer is to see how many Joules it consumes. Well, we owe the following graph to Bill Dally, NVIDIA’s chief scientist, and one of the world’s foremost experts in computing architectures:

Well then, we have to start from the fact that one nJ or nanoJoules are 1000 pJ or picoJoules. That is, if when processing a double-precision or 64-bit floating-point arithmetic operation, depending on where the data is located, the consumption to perform the same operation will vary:

- If the data is in the records, then it will only cost 20 pJ or 0.02 nJ.

- If we have to access the cache to find them, the thing goes up to 50 pJ or 0.05 nJ

- On the other hand, if the data is in RAM, then the consumption rises to 16 nJ or 16000 pJ.

In other words, accessing RAM costs 1000 times more energy to perform the same operation than the information is in the processor. If we add to this the internal intercommunication between the components of a processor and the external one, we end up having chips capable of processing large volumes of data, but at the same time they end up needing a large amount of energy to function.

What solutions will we see in the future?

At the moment they are just lab solutions, but they are proven and could change the way we understand PCs. Mainly, we have two different solutions to the problem.

Near Memory Processing

The first of these is near-memory processing, which consists of moving memory closer to processors. The idea is none other than to put memory very close to the processor, unfortunately we cannot put the tens of gigabytes of RAM that we will see in the short term in PCs, but an additional large cache level that greatly increases capacity to be able to find the data in it and reduce consumption.

Curiously, the strategy of increasing the size of the caches is the one carried out by NVIDIA with its Lovelace architecture, increasing L2 from one generation to another 16 times. However, this is not enough. Which leads us to the conclusion that a new level of memory will be necessary. Closer to the processor and, therefore, with less energy consumption. That is, in a few years we will talk about fast RAM and slow RAM on our PC. The latter possibly using the CXL interface.

Processing in Memory

The second thing is what we call PIM, they are not processors themselves, but memory chips with internal processing capabilities. That is to say, they are still memories, but certain specific algorithms can be run in them. For example, imagine that we have to make several requests to a database that is in RAM to find the email address of a client. In the conventional mechanism, we would need several accesses to low consumption external memory. In this way, instead, the RAM itself searches for the data itself, with very little consumption as it does not have to be accessed externally and only has to send data to the processor.

In this way we are able to greatly reduce the amount of transfers between the RAM and the processor and thereby cut energy consumption. The counterpart is that the applications should be designed for this paradigm. Although it is necessary in order to reduce energy consumption and prevent the new chips from raising the electricity bill even more.