Developing a new CPU or GPU architecture is not easy. One might think that you simply have to take the design of the current generation and improve it to launch a new one, but the thing goes much further and, as we are going to explain below, really a period of 2 to 3 years between new generations in It is actually quite logical and, in fact, we could say that it is even short.

How is the development of a new CPU or GPU architecture?

In terms of time and money, developing a new CPU or GPU architecture is no small feat. It all starts with the design team, with a large group of engineers working “on paper” (not literally) to find ways to improve what they already have, both in terms of performance and other factors such as efficiency or density. But to be able to work with theoretical data on paper, they first need to be knowledgeable about the lithography they are going to use, and for this they must work together with the wafer manufacturer, something that is sometimes complicated especially in the case of AMD and NVIDIA. They do not have their own factories because they depend on third parties.

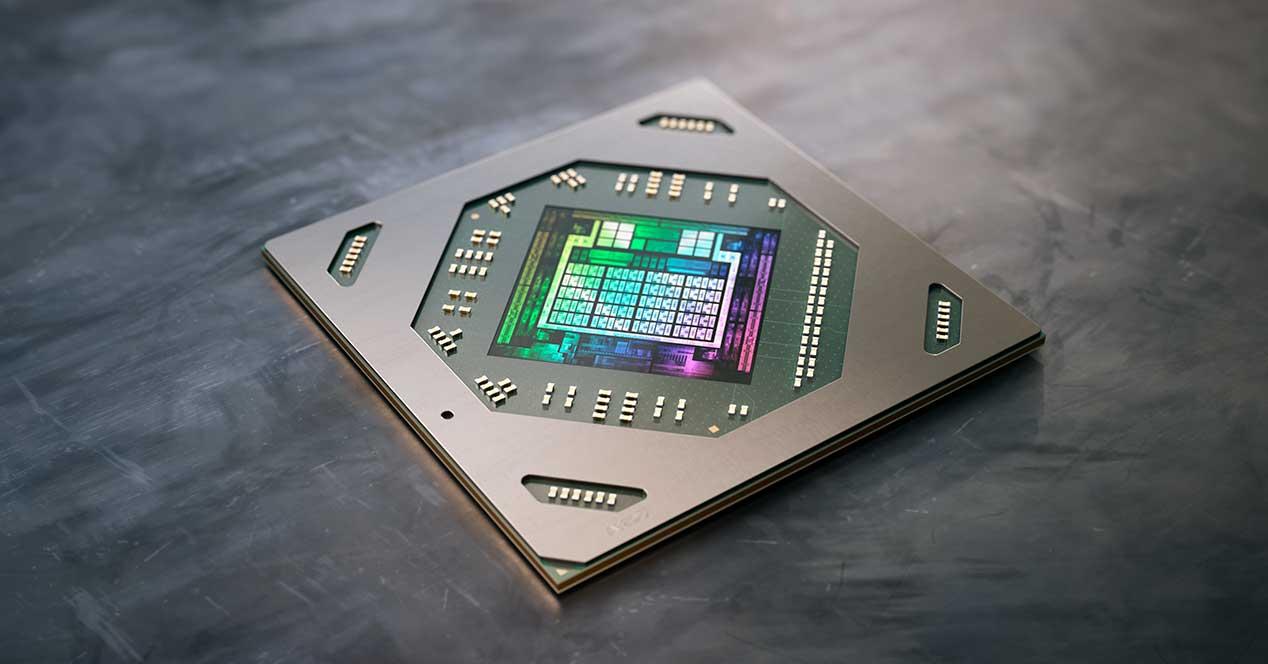

The design process, we repeat that it is merely theoretical, it can last from a few months to more than a year and that taking into account that they are successful in their investigations. Once they have a design for a chip that they consider functional, they send the designs back to the factory to make the first functional samples of the CPU or GPU. Once they have the first chips, now they must work to integrate them into a functional ecosystem, that is, there are several steps from simply having the chip or die until you integrate it into a complete CPU or GPU, with its PCB, connections, IHS, etc., and there comes another period of several months of work and testing until we have a functional device. If something (or everything) goes wrong, they will have to go back to the design stage to find and fix the problem.

Once you have the first functional device, it is time for tests with the so-called engineering samples: just a handful of them are sent to be manufactured and a series of tests and validations begins to ensure that everything is correct in a process. that usually lasts from two to four months approximately; This is so because obviously they cannot launch a product on the market that they have not made sure of is working properly. Meanwhile, the original chip began to work on variations to be able to launch different versions of the product on the market (that is why, for example, we see different graphics cards based on the same chip but with different variations).

Once they are sure that the chips are fully functional and that they work as expected, another new phase of development begins in which they try to optimize their operation; They already have the hardware ready, but now they need a firmware that tells the software how it should work, and this phase also lasts several months since after each new modification they must carry out the pertinent tests to ensure that everything is fine.

Finally, once everything is tested, measured and guaranteed, the mass production phase of the device begins in a process that usually takes four to six more months until the product is ready to be launched on the market. Obviously in between, another new phase of packaging, packaging, marketing, etc. has been introduced. It also requires an investment of time and money.

As you may already suppose, we are talking about an extended period in time and that takes a lot of money and work involved, so really an intergenerational period of between two and three years is nothing, and shows the mastery with which manufacturers such as Intel, AMD or NVIDIA are able to work to have their products on the market with ever better performance, efficiency and quality in general.