When talking about generative artificial intelligences, models and chatbots like ChatGPT, Bing, Claude, Bard and others, we usually focus on some basic and technical aspects about its specifications and operation, such as the maximum number of interactions per conversation, the amount of data used in its training, its speed, the means of input that it allows… that is, those that are quickly measurable and that, of course, have a significant impact very direct in interactions with them.

There is, however, other lesser known but equally important aspects, in some cases even more, and the context window is one of them. But, of course, to understand the reason why the jump that I mention in the headline of this news is something truly tremendous, it is necessary to first understand some technical concepts. But don’t worry, they’re basic and interesting, and we’ll go over them in a moment.

The first fundamental concept is token. If you have ever used a generative model, it is quite likely that you have seen that its output is measured in tokens, not in words, pixels, notes or those measures that are used, as a general rule, to measure the type of content that is displayed. Is generating. Tokens are basic units of information that are used to represent and process data in generative artificial intelligence models.

Tokens can be words, characters, symbols, or pixels, depending on the type of data used. Put another way, models learn with words, notes, and bits, but break them down into tokens. And conversely, they always generate their output in tokens, even though the result they deliver to us, logically, is structured in a way that is readable/interpretable by us. In the case of generative text models, it is usually considered (although it is highly variable) that ten tokens are between seven and eight words.

For his part, the context window refers to the maximum volume of information, measured in tokens, that a model can handle for a work session, as a complete conversation if we talk about chatbots, or the data that can be accepted as input to make an output based on them. It is important, however, not to confuse the data in the context window, which is specific to each work session, with the data used to train and validate the model, which is the information that is usually provided when talking about them. , and whose volume is gigantic.

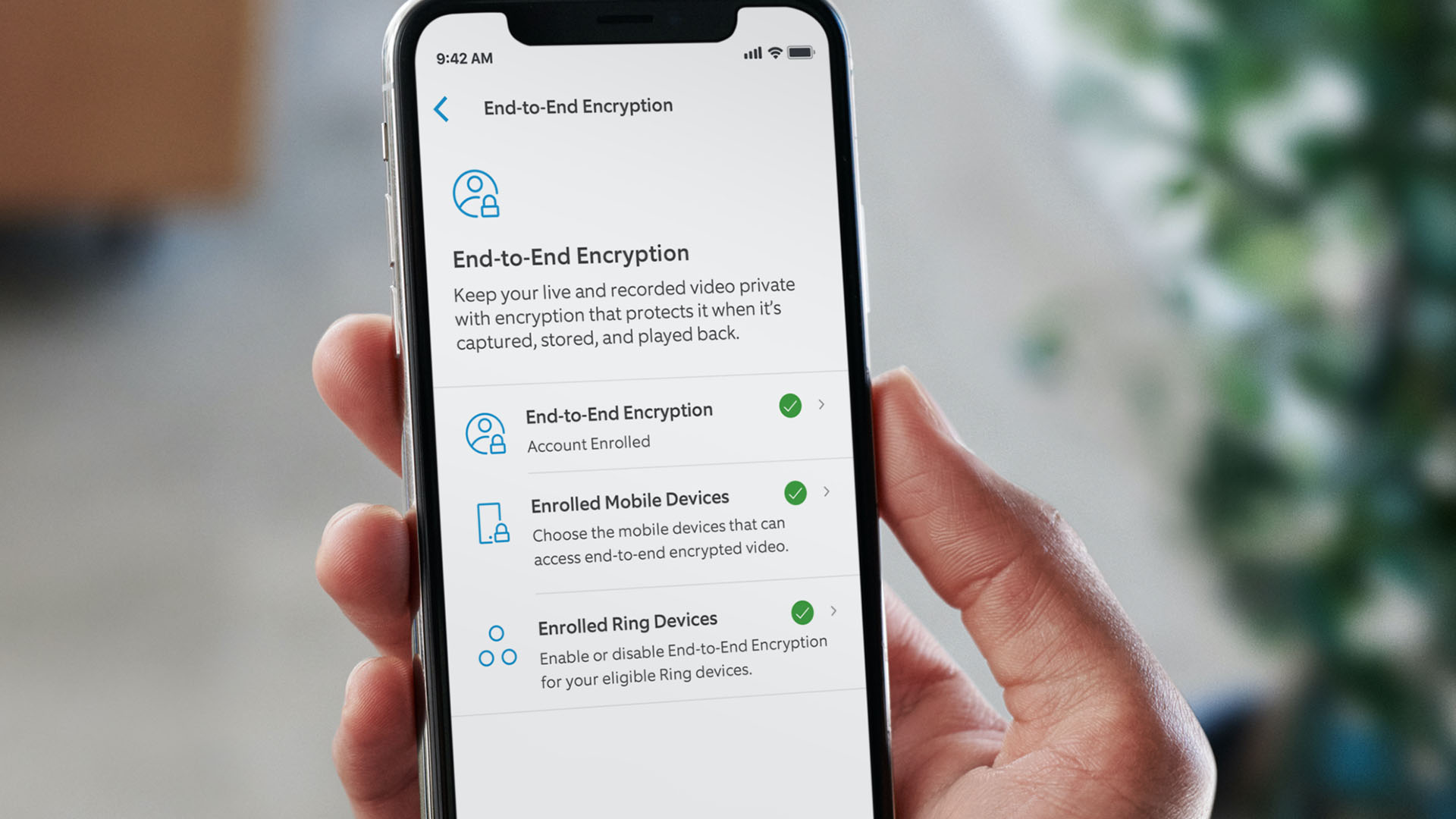

The maximum size of the context window therefore depends on the data that we can give to a model so that it generates an output based on them. And, of course, another important metric is the time the model needs to ingest that data. And it is at both points that Claude, the generative model of Antrophic and that you can try, in chatbot mode, in Poe, has decided to hit the table, setting yourself far above your competitors.

Specifically, as we can read in a post on his official blog, Antrophic has increased Claude’s context window from 9,000 to 100,000 tokens, which the company quantifies at around 75,000 words. To give a bit of context (pun intended), the standard GPT-4 model is capable of processing 8,000 tokens, while an extended version can process 32,000 tokens. For its part, ChatGPT has a limit of around 4,000 tokens.

This increase allows, for example, Claude is capable of ingesting an entire novel for one work session, which would allow, for example, to use it to review a complete draft. Or also use it to be able to review a large selection of reports, in order to generate a new one based on their information. And, for a closer use, that the duration of the conversations can be extended much more, before the model begins to experience confusion due to all the data present in it.