How does DLSS work?

In the first two versions of the algorithm, DLSS can take up to an additional 3 milliseconds of frame creation time. In the case of DLSS 3 we do not know, but we assume that it is less due to the greater power of the RTX 40. In any case, the DLSS needs the information of the newly generated frame, so if we want the game to work at a frame rate Specifically, we must calculate the frame time, for example 16.67 ms if we want 60 FPS, and subtract from that the time it takes for the graphics card to apply it.

Suppose we have a scene that we want to render at 4K. For this we have an indeterminate GeForce RTX that at said resolution reaches 25 frames per second, so it renders each of these at 40 ms, we know that the same GPU can reach a frame rate of 5o, 20 ms at 1080p. Our hypothetical GeForce RTX takes about 2.5ms to scale from 1080p to 4K, so if we turn on DLSS to get a 4K image from a 1080p one then each frame with DLSS will take 22.5ms. With this we have managed to render the scene at 44 frames per second, which is higher than the 25 frames that would be obtained by rendering at native resolution.

What happens if the graphics card does not have enough power?

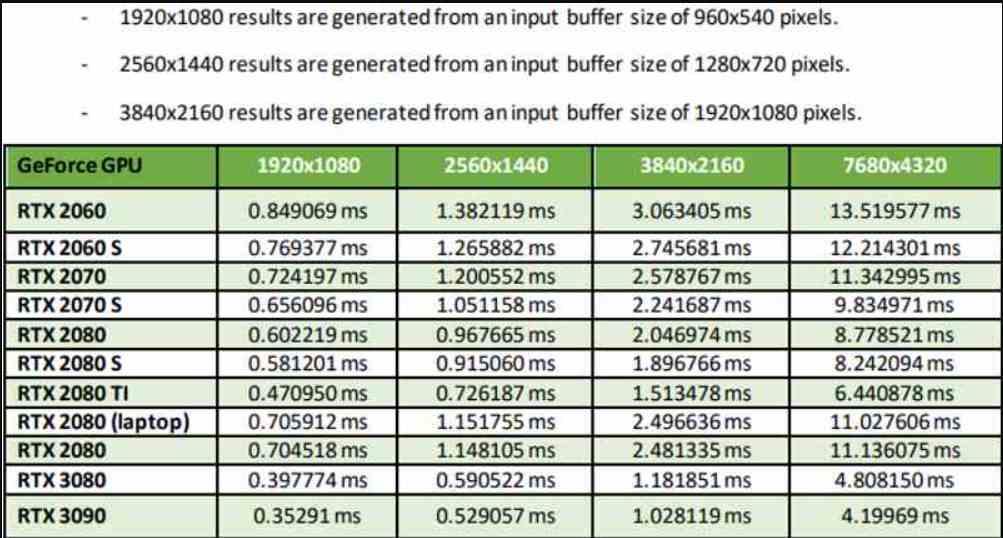

Each NVIDIA RTX model has its speed when applying one of the DLSS variants depending on the source resolution and the destination resolution. The table that you see below we have taken from the documentation of NVIDIA itself, in it the increase in resolution in number of pixels on the screen is 4 times more. So it corresponds to the so-called Performance Mode.

As you can see in the table, the performance not only varies according to the GPU, but also if we take into account the GPU that we are using. Which should not surprise anyone after what we have explained previously. The fact that in Performance Mode an RTX 3090 ends up managing to scale from 1080p to 4K in less than 1 ms is impressive to say the least, however this does mean that DLSS works better the more powerful the graphics card is.

DLSS is not a reconstruction, but a prediction

This is important to keep in mind, the reason why it is an algorithm that requires training and therefore trial and error is due to the fact that despite the fact that the frames are generated very quickly, it does not do so fast enough to skip certain errors. And while the algorithm can be trained not to extract bad pixels from the prediction, the lack of information is fatal. For example, the less resolution the source image has, the less quality the final image obtained will have.

The other point has to do with the geometry of the scene. All GPUs have been designed so that the smallest size an object can be is 2×2 pixels at most. The consequences on the DLSS? Any object smaller than that size is discarded and we have to take into account that objects get smaller and smaller with distance. This means, for example, that a 4K image generated natively and not with DLSS will have additional detail.

DLSS 1.0 vs DLSS 2.0, how are they different?

Each version of DLSS is based on the previous one, so DLSS 3 is an evolution of DLSS 2 and the latter of the original version. The first DLSS did not support temporality in the sense that it did not use motion vectors for image reconstruction. What it did was use information from a single frame, hence its image quality was much worse. Actually, NVIDIA’s DLSS was a differentiating factor with respect to the competition from the second version.

What is Optical Flow and why is it important for DLSS 2 and 3?

To understand how DLSS 3 works, first of all, we need to know what NVIDIA means by Optical Flow. And it is something that has been used since the RTX 20. Although it is not a hardware element, but rather a series of software libraries that are defined as follows:

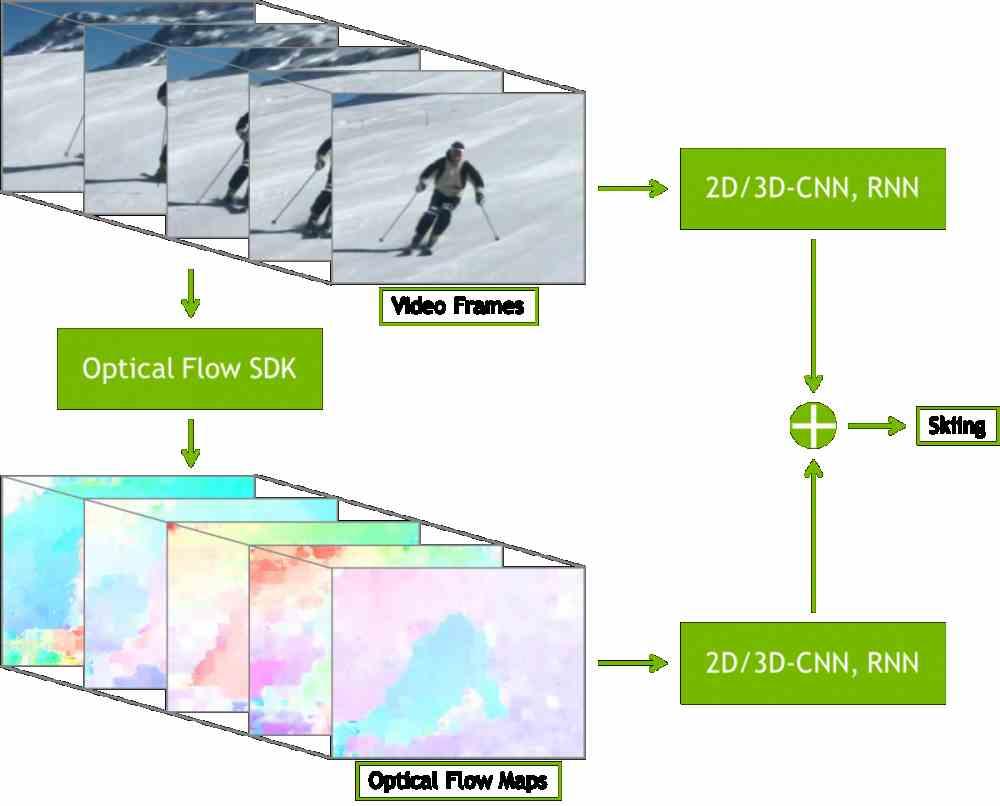

The NVIDIA Optical Flow SDK exposes the latest hardware capabilities of the Turing, Ampere, and Ada architectures dedicated to computing pixel motion between images. The hardware uses sophisticated algorithms to create high-quality vectors, which are variations from frame to frame and allow you to track the movement of objects.

This is called frame interpolation and the green mark has been using it in various applications. It consists of assigning an identification to each object in the image or each pixel, depending on the level of precision, creating what we call an ID Buffer. This will also allow you to know where each object is in each frame and be able to predict its movement.

What it does is generate the data in the form of a chain of matrices or tensors. A data format optimized for processing on NVIDIA GPU Tensor Cores. Where each value corresponds to a pixel of the image and each matrix to a frame or to a color subcomponent of it. This type of data structures are used in AI and due to their nature they require specialized units to execute.

What are your applications?

The first utility? The clearest is the creation of interpolated frames, which are intermediate frames that are placed between two existing ones.

Although the most famous, commercially speaking, is the creation of motion vectors that can be used for algorithms such as Temporal AntiAliasing, DLSS 2 and 3, FSR 2.0. Since to reconstruct the image they use the information from previous frames for greater precision.

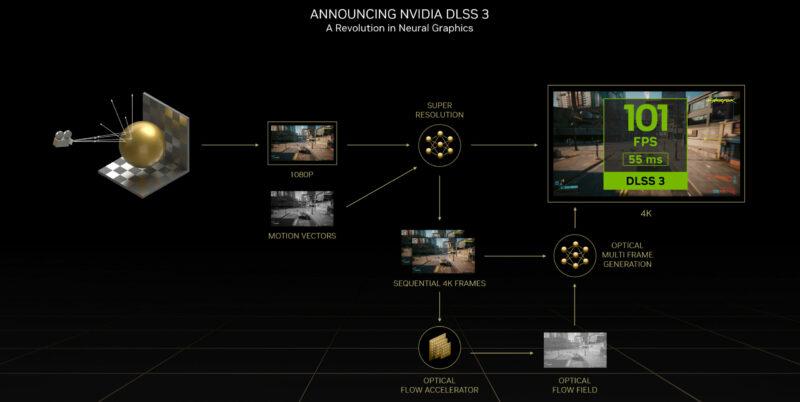

The Optical Flow Accelerator

Frame interpolation algorithms and motion vector creation can be done through algorithms running on the GPU itself. However, NVIDIA after years of knowledge in automatic driving and especially in computer vision has been able to apply this knowledge to games.

We understand computer vision as the ability to identify and delimit objects in an image. That is, it’s not about generating objects. Well, the Optical Flow Accelerator is a piece of hardware inside the RTX 40 that what it does is automatically observe the objects on the screen, identify them and calculate the movement vectors from several previous frames and calculate the trajectory.

This means that in DLSS 3 where it is used, the part of the code related to temporality has been completely removed. It is also a trick by NVIDIA to prevent competing algorithms, such as AMD’s FSR 2.0, from continuing to use the DLSS 2.0 libraries for their benefit. In return, it has a counterpart and that is that the games that use it can only be run on the NVIDIA RTX 40 with Ada architecture.

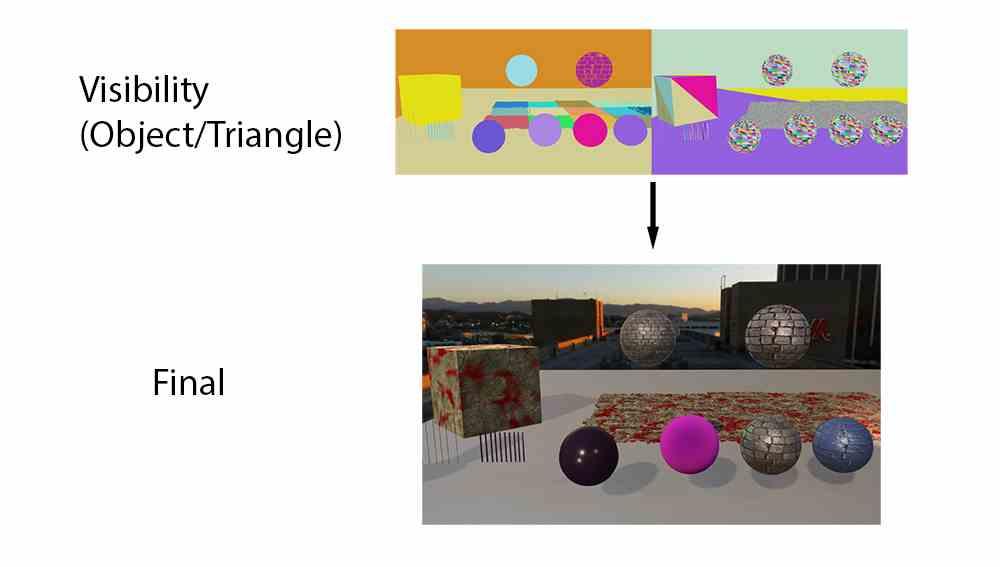

Visibility buffer

This is one of the key elements in the latest versions of Unity and Unreal Engine, the two most used engines for creating video games, and it is what relates DLSS 3 to Ray Tracing, two elements that NVIDIA itself has related to each other. Yes. Well, what the Optical Flow Accelerator does is generate it automatically without the participation of any external element.

Frame interpolation in games

In a movie, because all of its frames exist and are recorded, it is easy to do a frame interpolation. In a video game, each one of them is unpublished and a great deal of computing power is needed to carry out the entire identification process quickly enough to be useful in real time and not affect gameplay.

However, it happens that between what the GPU generates in the VRAM of the graphics card and what is sent to our screen, there is usually a delay. What ends up happening? Well, many times the first frame has not been sent to the screen and the GPU has managed to make the second and this happens a lot in games that work at a high frequency, that is, they have to resolve a frame in a few milliseconds.

If the succession of images is very fast, our brain does not pay attention to the details, so we can generate a series of intermediate ghost frames. And NVIDIA has taken good advantage of this with DLSS 3, where thanks to this capacity they can generate new frames. Mind you, the game engine that is the speed at which the CPU generates the display list for each frame is not going at the speed they are generated, since many of them have been generated automatically through interpolation.

What games support DLSS 3?

At the moment there are 35 titles, although two of them belong not to games but to graphics engines such as Unity and Unreal Engine. At the moment, the list of compatible games is as follows:

- A Plague Tale: Requiem

- Atomic Heart

- Black Myth: Wukong

- Bright Memory: Infinite

- Chernobylite

- Conqueror’s Blade

- cyberpunk 2077

- Dakar-Rally

- Deliver Us March

- Destroy All Humans! 2 – Failed

- Dying Light 2 Stay Human

- F1 22

- IST: Forged In Shadow Torch

- Frostbite Engine

- Hitman 3

- Hogwarts Legacy

- Icarus

- Jurassic World Evolution 2

- Justice

- loopmancer

- Marauders

- Microsoft Flight Simulator

- Midnight Ghost Hunt

- Mount & Blade II: Bannerlord

- Naraka: Bladepoint

- NVIDIA Omniverse

- NVIDIA Racer RTX

- perish

- Portal with RTX

- ripout

- STALKER 2: Heart of Chornobyl

- scath

- Sword and Fairy 7

- Synced

- The Lord of the Rings: Gollum

- The Witcher 3: Wild Hunt

- Throne and Liberty

- Tower of Fantasy

- Unity

- Unreal Engine 4 & 5

- Warhammer 40,000: Darktide

We will be adding titles to the list of DLSS 3 compatible games as they are announced by both its developers and NVIDIA itself.