It’s been a few weeks since Apple introduced Apple Vision Pro, its revolutionary augmented/mixed reality viewer which, in full isolation mode, can also be thought of as a virtual reality headset. Although, if we stick to the definition given by the company itself, it is much more than that, since they define it as the world’s first space computer, something that makes sense, since it does not need a connection to other devices to work, unlike other viewers.

Apple showed us some very interesting aspects of the device, but as always in these cases, there are still many doubts that we have about it and, as is also common, rumors, leaks and other sources of information will clear up these doubts before its arrival on the market, which, as they told us in the presentation, will take place at the beginning of next year, initially only in USA.

A very interesting source of information about Apple Vision Pro is going to be its SDK, that is, the application development kit for the viewer, which has already been published by Cupertino, with the intention that developers who wish to can start working on specific apps for the viewer, as well as for adapt to this new device those already offered in other Apple devices. And it is that these types of tools allow us to find out a lot about the devices for which they have been designed.

So with Apple Vision Pro SDK now available for interested developersThese are some of the most interesting aspects that we have been able to find out:

- travel mode: This is something that we already got a sense of in the presentation, and that has been confirmed with the SDK. Apple’s space computer will have a travel mode, which the user will have to activate when using a means of transport. Its main reason for being is that the system adapts to the effects of displacement on the Apple Vision Pro sensors. Of course, it is also reported that when this mode is activated, some functions will be limited.

- spatial limitation: Apple has decided that when the user uses the viewer in VR mode, that is, with complete isolation from the environment, an area of three by three meters will be established around it, and that when the user approaches the limits of it , it will begin to show the exterior. Although it is not explicitly clarified, we can understand that the reason for this limitation is to avoid accidents.

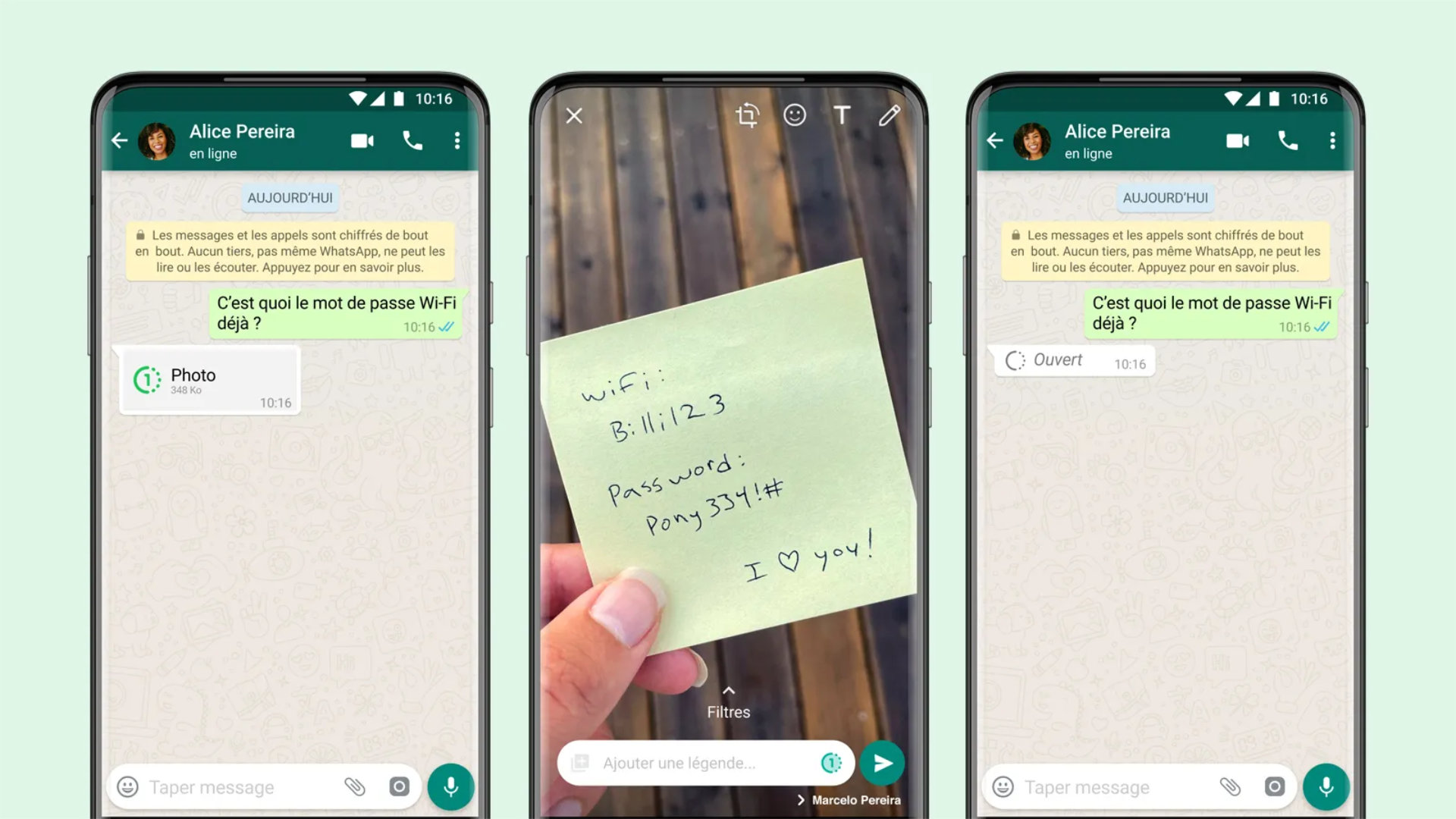

- visual search: thanks to the cameras and external sensors, Apple Vision Pro can scan the environment in which it is being used and, in this way, help us to identify objects and read texts in it. Thus, for example, we will be able to have live translation functions, copy and paste text from the real world to a document and open printed links, among other functions.