When designing a processor, be it a CPU or a more specialized one such as a GPU, a relevant part is the memory controller, which is designed according to the specifications given by the JEDEC. However, these specifications are within a range. So if we talk about graphics cards we have to take into account that things like the amount of memory available per chip and the bandwidth. All of this is information that will not be known until the memory vendor contacts NVIDIA or AMD during final card assembly.

Subsection: it is impossible to predict the technical specifications years in the future

Hence, it is not possible to know quantitatively what the generation leap of a graphics card will be while it is in the design phase. If someone tells you, for example, that the RTX 5090 is going to have 48 GB GDDR7, ignore it, since they have not yet decided the capacity of each chip. The same if they tell you that it will be 2.6 times more powerful. These are speculations that ignore that memory chips are usually delivered a few months before the launch of the graphics card.

1 GB GDDR6 should never have existed

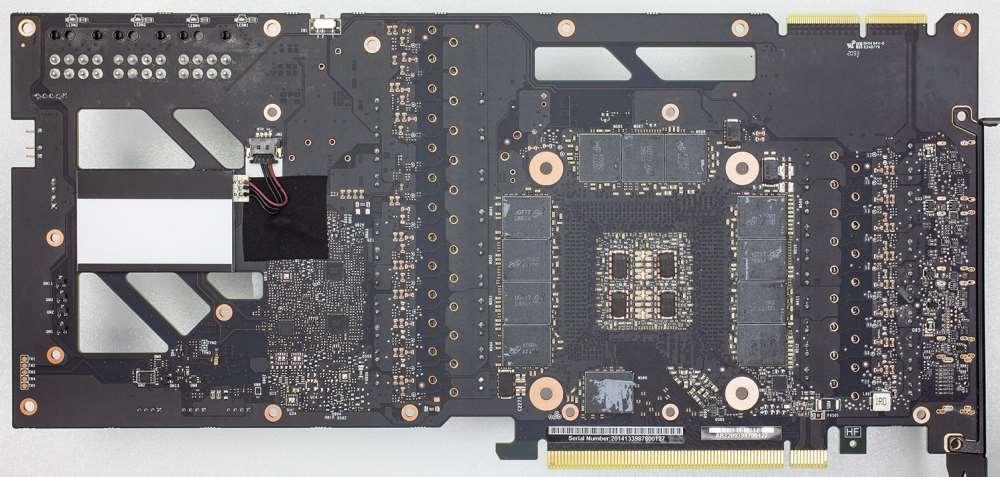

And here we come to the most important part of the story, that of the VRAM conspiracy. To understand it, we have to go back to 2013, when SONY decided to launch the PS4 with 8 GB of GDDR5 memory instead of 4 GB, but with the problem that there are no chips larger than 0.5 GB and they are limited by the bus. The best solution? Pull clamshell mode, in which two memory chips, on the front and back of the board, plug into the same interface. The bandwidth is not increased, but the amount of addressable memory is. The problem? The associated costs of having to put twice as many memory chips.

In 2016, the Slim versions of Xbox One and PS4 appear, with this the number of memory chips is halved to 1 GB, Clamshell mode is no longer necessary and costs drop. The big problem? It comes when in 2018 instead of making the leap to 2 GB memory chips, which would be the natural step, we still have to settle for 1 GB capacities and the worst comes when Micron, NVIDIA’s partner for GDDR6X in The RTX 30 unilaterally decides to continue with a density of 1 GB.

Think about it, four years with the same density per chip in terms of memory is not normal and that is where we can think that: “well, GDDR6X was only for NVIDIA and, therefore, they may have agreed to that capacity” and This is where things get murkier.

What is the VRAM conspiracy?

The console contract is extremely succulent and even more if we talk about a system whose predecessor has exceeded 100 million and ensures a constant flow of money. SONY’s conditions to manufacture GDDR6 memory for the PS5? 2 GB of capacity per chip, which left the inventory completely empty for NVIDIA. However, one of NVIDIA’s strategies in recent generations is to look for exclusive manufacturing nodes and technologies to ensure its full availability. For example, they scrapped TSMC’s 7nm node at the last minute so they wouldn’t have to compete for manufacturing resources with Samsung and went with GDDR6X for the same reason.

And here we are already at an interesting point, this memory was designed to work with the AD102 chip, but not the AD104 of the RTX 3070 and RTX 3060 Ti at first. However, NVIDIA knew that adding faster video memory would be a benchmark boost and ended up sacrificing the 16GB versions as well as the 20GB versions of the RTX 3080 in the process. of the story, the other part is that their partner decided not to make 2 GB chips during the last generation said GDDR6 variant.

The objective? Create a planned obsolescence so that users could jump to the next generation when the time came, as it has been. The question here is: If Micron had made the 2 GB GDDR6X chips available would they have been used by NVIDIA? We believe that yes, it does not make sense to have the lower-middle range with more VRAM than the upper-middle range.

What have been the consequences of these decisions?

For dire PC gamers out there, if you have an 8GB RTX 3070 and even worse, an RTX 3060 Ti, then you’re much better sold than 12GB RTX 3060 users due to lack of VRAM. The consequences? Much lower frame rates in certain parts of the games, annoying appearance, sudden textures and in some cases even having to lower the average quality of the games, having to completely waste the capacity of their GPUs, which is a real pain. grief.

It is not the only problem, in the event that we want to use the capabilities to encode video, now increased with the latest revisions of the drivers, we are going to be limited in terms of memory. Honestly, it is logical that NVIDIA does not want to give part of its market to its main rival for a limitation such as video memory. Although planned obsolescence is necessary in every industry to maintain a speed of consumption, there are things that do not make sense and are so absurd that they could be considered self-sabotage, which is total nonsense.

Conclusion: yes, there has been a VRAM conspiracy

So that you understand, NVIDIA is not interested in having games that work better in the competition than in their own graphics cards, and even less for something that they cannot control and that to solve it implies an increase in costs and, therefore, getting much lower margins. Hence, we think that there has been a VRAM conspiracy where hBasica sought to limit the availability of 2 GB GDDR6X chips for an entire generation to create a more than clear planned obsolescence.

Which is a shame, as this move does more damage to the PC gaming hardware market, but it’s all the same to them since they also sell their memory chips in consoles. What’s more, we think we can all agree on this, there should have been no 1GB GDDR6 memory under any scenario, not even almost five years ago.