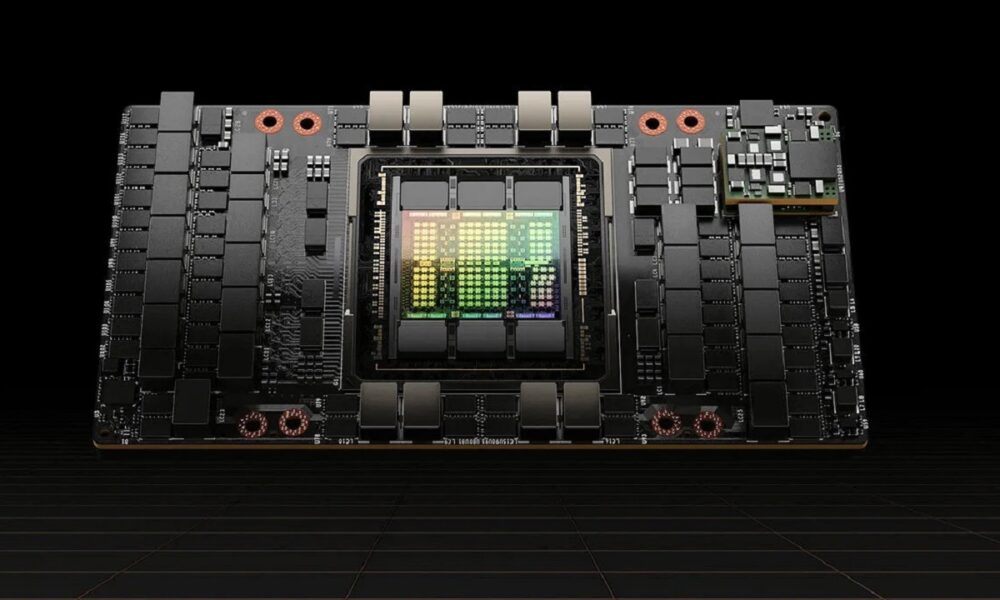

Six months have passed since NVIDIA presented Hopper, a next-generation architecture that the green giant is using to drive its graphics accelerators and that, as we told you at the time, has been able to triple the raw power of the previous generation maintaining, however, a very balanced consumption, which translates into high efficiency (performance per watt consumed).

In that presentation we were able to confirm that the SXM version of the NVIDIA H100 was configured with a total of 80GB of HBM3 memory, and that its specifications were simply impressive. This GPU has 16,896 shaders or CUDA coreshas 528 tensor cores, has a 5,120-bit memory bus, and has a total of 80 billion transistors.

NVIDIA developed a PCIe version that kept the most important keys of the SMX model, but that reduced the TDP from 700 watts to 350 watts. To fine-tune this important reduction in TDP, those in green were forced to cut the number of CUDA cores, which down to 14,592 shadersand the tensor cores, which went to a total of 456. The rest of the keys remained, including the 80 GB of memory, although this was of the HBM2E type.

Well, we are aware that NVIDIA is preparing a new version of the H100 PCIe, and we know that this model will have a whopping 120 GB of HBM2E memory. The same type of memory that we saw in the 80 GB PCIe version is maintained, but it seems that NVIDIA will keep the shader count of the SMX version (16,896 shaders). If this is confirmed, the difference in performance between this 120 GB model and the 80 GB model would be quite large, since their differences at the hardware level would not be limited to the shader count.

We have no information about the TDP level configuration of this version, but Everything seems to indicate that it will be located at 450 watts, that is, at an intermediate level between the NVIDIA H100 SMX and the 350-watt PCIe version. The launch date has not transpired, but it is most likely that it will arrive at the end of this year.