One of the key technologies is the noise elimination algorithms, the NVIDIA OptiX Denoiser is one of these algorithms that seeks to solve this problem, but first we need some background.

What is the OptiX Denoiser necessary for?

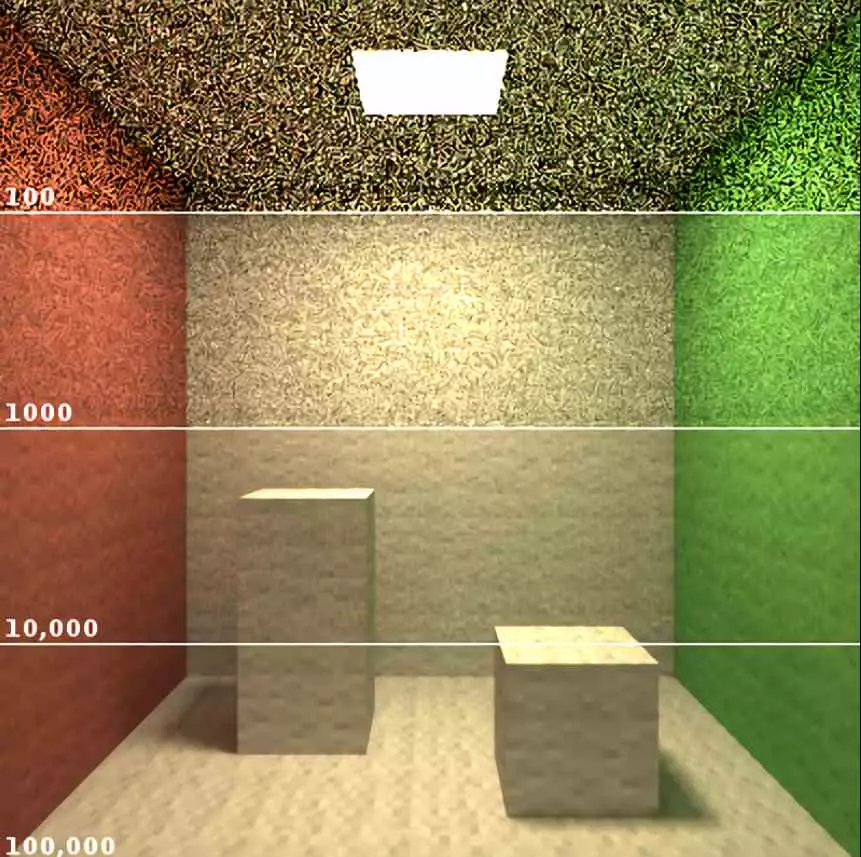

To understand the problem of noise we are going to suppose an example, imagine that you have a huge fronton wall in front of you, you have to paint. To do this they tell you that you have to do it with tennis balls full of paint that you have to throw against the wall using a racket. How long will it take to paint the entire wall? Most likely a lot of time. Well, the problem with Ray Tracing is the same and although at first glance the algorithm says that a ray is projected per pixel and it suffers from several bounces through the scene, the reality ends up being like the image above.

This means that the system needs to invoke a large number of rays per pixel to achieve a much sharper image with less noise, but each new ray that is invoked requires more and more power. What makes the cost of getting a sharp version of the image a titanic effort on the part of the GPU or other hardware that is composing the scene at that moment.

If we talk about audiovisual content studios in charge of creating extremely complex scenes where Ray Tracing is used, this can be a great amount of time and if we talk about completely computer-generated films, it is even weeks to render the entire film in a center of data and how to say time is money.

What is a Denoiser or Noise Removal Algorithm?

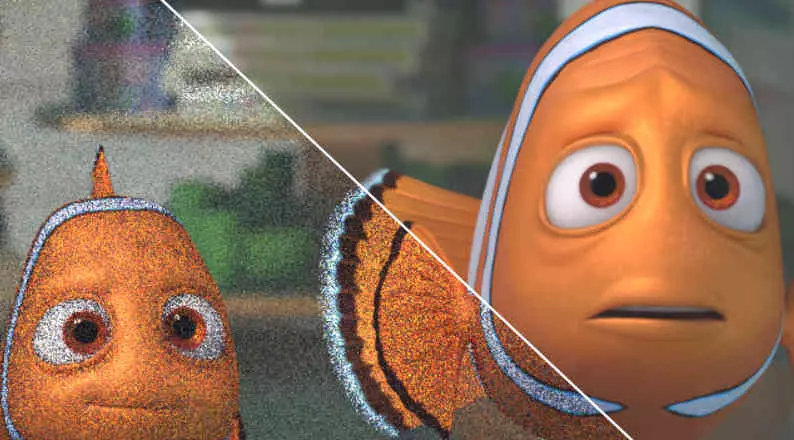

Disney engineers, let us remember that it owns Pixar, to save money and offline rendering time in its films, they developed a noise reduction algorithm based on the use of a neural network and therefore based on Deep Learning. The algorithm is capable of generating an image with the quality of 1024 samples from a very few samples, 32 on average. It must be taken into account that the number of samples is equal to the number of rays that are projected per pixel, so it is a huge cost saving, since with this they manage to render the scenes up to 32 times faster.

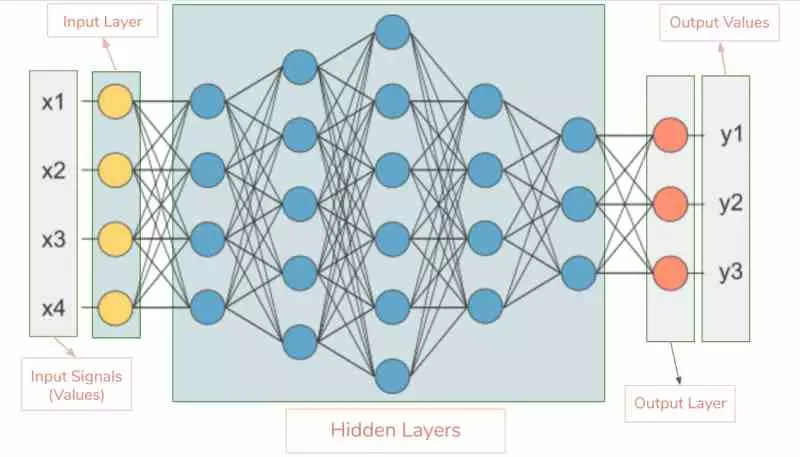

The deep learning algorithms are often used to catalog things or to predict values and in the case of the noise reduction algorithm we find an algorithm of the second type. How does it work? Through a series of data the neural network creates a mathematical function that you Predict the value of worthless pixels in a scene with a large amount of noise.

The whole process requires a learning and therefore the neural network must know where are you wrong. That is why it has to be trained with a large amount of data, which allows you to see a pattern, which represents in the form of a mathematical function. At the same time, the same image is rendered in full definition and compared with the image that has been predicted by the neural network, which learns from its errors and adjust math function to remove an increasingly accurate result.

Little by little the neural network ends up generating an ever closer version of the images. The final idea is that the neural network from an image rendered by Ray Tracing with a large amount of noise can generate a clean image without noise of any kind. Which has less computational cost than rendering the scene with hundreds or even thousands of samples per beam.

What is NVIDIA Optix technology?

- Lightning generation

- Shading of materials

- Intersection of objects

- Scene tour

The last two can be done through the use of intersection units known as RT Cores or by raw code in the form of Compute Shaders. Actually the entire rendering engine in this case is programmed through CUDA, which allows the creation of offline rendering engines that use different versions of Ray Tracing.

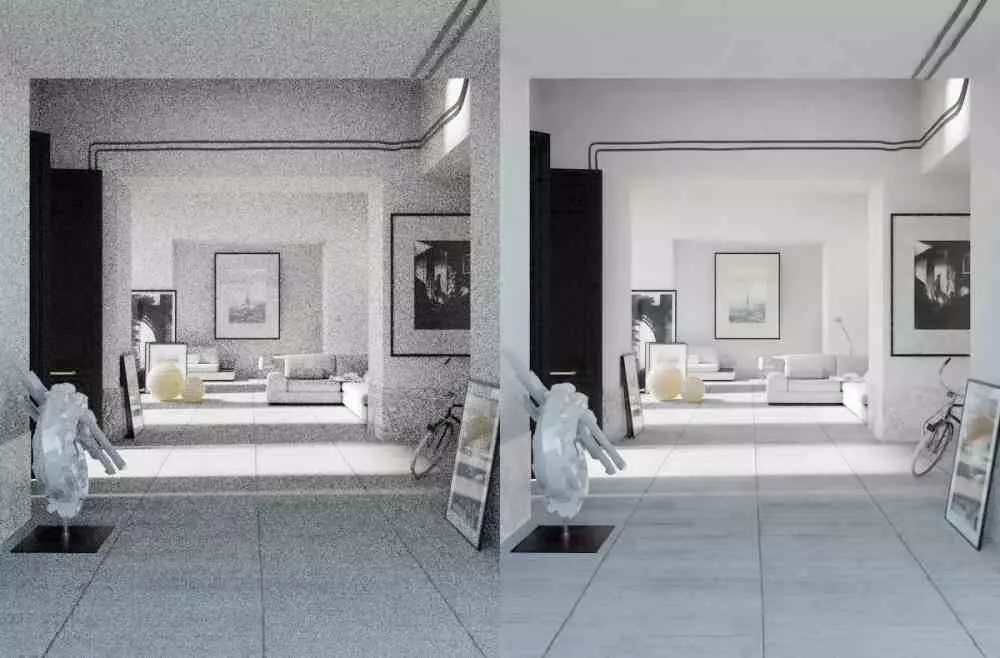

Given NVIDIA’s foray into the world of artificial intelligence, it is not surprising that they have implemented technologies such as the NVIDIA Optix AI Denoiser, which is based on the same principle that we have discussed before with the example of the PIXAR noise elimination algorithm. .

The NVIDIA Optix denoiser

Now that we have all the necessary elements we can already deduce what Optix AI Denoiser is, which began to be implemented from its Volta architecture, where NVIDIA began to implement what we call tensor units. Which are key to accelerate deep learning algorithms as is the case are the noise elimination algorithms, which are known in English as Denoiser.

In the current version of it this has not been designed under the idea of rendering a single frame, but a sequence of themas it uses the information from previous frames to give more precision to the generated noise removal function. It must be taken into account that the more information it has as its basis, the easier it is for the AI to predict the values that it has to guess and the more accurate it is when doing so.

However and to finish, despite the high rendering speed compared to a few years ago, the reality is that a denoising algorithm is not fast enough to carry scenes rendered with pure Ray Tracing in real time, for this we still there is a long wait of several years.