Why is Virtual Reality after so many years and PC advances still something residual? In recent years, we have seen how NVIDIA and AMD have gradually moved away from this medium to tiptoe and not even mention it in their marketing. Is there a clear intent to sabotage VR, or are they technical limitations instead?

The high technical requirements of Virtual Reality

We have to start from the fact that virtual reality is based on completely different rules to those for generating graphics on a conventional screen, mainly due to the fact that we are generating two images simultaneously, one for each eye, which although they are presented in the same LCD panel is actually two separate display lists. That is to say, the VR, without optimizations, forces the CPU to have to work twice as much per frame as in a conventional situation, but it is not the only element to take into account.

Because for immersion the latency must be as low as possible, and by this we refer to the entire process, from the moment the player performs an action until the consequence of it is seen in our eyes. Not only the time it takes for the CPU and the GPU to generate a frame, but also the time before to interpret said actions and the time after to send it to the screen. This is called photon motion latency, if it is above 20 milliseconds it can cause dizziness and nausea. The ideal? 5 milliseconds for augmented reality applications and 10 milliseconds for virtual reality.

The other problem is that since the screen is so close to our eyes, the density in pixels and, therefore, the resolution must be very high, if we do not want to see the grainy image or the spaces between them. This further increases the technical requirements in terms of power.

Why do we say that there is sabotage to VR?

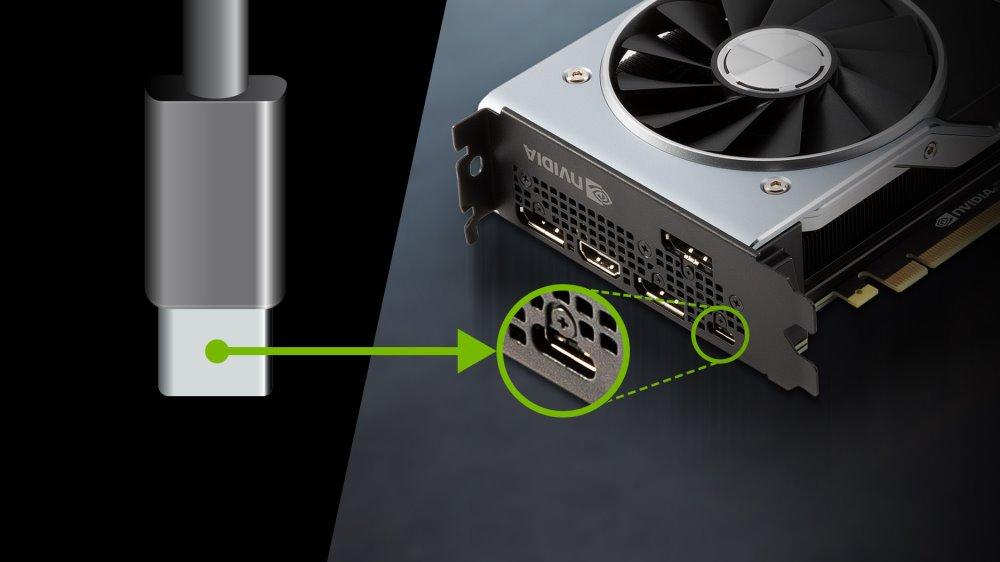

The first of the reasons has to do with USB-C connectivity. One of the peculiarities of the PS5 is that its front USB-C port is switched so that it can receive the signal from the display controller integrated into its main chip, this means that the PSVR 2 has a direct connection to the GPU. On the other hand, on PC things are more complicated.

- If we talk about laptops, we will see that it has been recently when they have begun to consider that the video outputs beyond the one used by the main screen have the same latency.

- On the desktop it is more complicated, since the image buffer has to be passed through PCI Express to the RAM where it has to be copied and from there it is sent to the USB-C output of the motherboard. This adds an additional latency of several milliseconds which does not make it ideal.

- The best solution? If the CPU has a built-in USB controller, typically via a PCIe to USB 3.1 or higher bridge, then the data can be sent directly. The problem is that Intel never puts a USB controller in their CPUs, but AMD does.

The way to solve it? Well, forcing the graphics card and the CPU to have to go full lung to generate the graphics to alleviate this problem.

DLSS and FSR are part of VR sabotage

Now, many of you will think that the solution can come from NVIDIA and AMD’s resolution scaling techniques that allow more frames to be generated. Well, the problem comes from the fact that they add a fixed latency to carry out their work, which can be counterproductive. We must bear in mind that one thing is the speed at which the frames are generated and another is the latency of movement to photon.

And this is something that many of you are going to experience when you are using FSR3 or DLSS3 and the frame interpolation that both have. Take a game at 60 FPS interpolated at 120 FPS and then another native one at 120 FPS and believe us that you will notice how in the first case the response time will seem much higher. This breaks the immersion in virtual reality and its inclusion and promotion can be seen in a certain way as a sabotage of VR.

The solution that got lost along the way

In the midst of the boom in failed 3D screens, a little over a decade ago, in the era of DirectX 10, the company Crytek, creator of the popular Crysis, did not come up with anything other than to launch a solution that allowed one normal image to be converted into two stereo images and for very little. Well, really what it did is that the processor did not have to calculate two frames and the geometry of both did not have to be calculated, but only one.

The technique I was doing was using the already extinct DX10 Geometry Shaders to generate a modified and displaced version according to the camera to have the two frames. This didn’t save the cost of coloring the pixels, the most expensive part of the process of generating a 3D image, but it did help a little and speed it up. The problem? With DX 11 Geometry Shaders became extinct and so far neither NVIDIA nor AMD have implemented this mechanism in the raster unit of their GPUs.

The real reason, video memory

It is not that there is conscious sabotage of VR, but rather that we have to start from the fact that virtual reality not only requires a very high refresh rate, but also extremely high resolution. Other solutions such as Ray Tracing, despite its lack of popularity among users, at least you can sell them for the beauty, but VR costs much more to sell. The point is that:

- Increasing the resolution has an impact on the bandwidth of the video memory.

- Raise the frame rate the same.

What happen? That the biggest limitation that graphics cards have today is the speed of the video memory, really the reason why there are solutions such as FSR and DLSS is for this reason. In any case, we have already said that they are counterproductive for virtual reality.