A series of tests revealed that nine out of 10 leading real-time facial recognition software makers failed against deepfake attacks, according to the Sensity AI reporta startup focused on identity security.

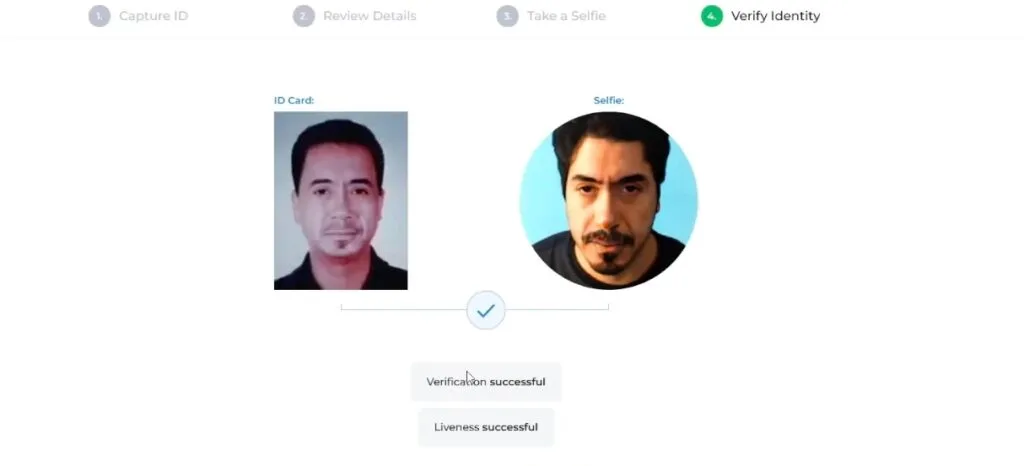

In the tests, the engineers responsible scanned a person’s image from the identity document and mapped the resemblance of the face with those of another person. Attacks against the software subjected the image to “liveness detection”, which attempts to authenticate identities in real time, relying on images or video streams from cameras such as facial recognition used to unlock cell phones, for example. .

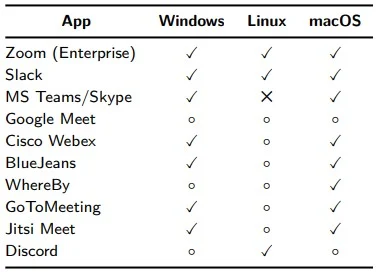

Change of visual identity carried out in the identification photo. Having a photo of the target is enough to use it as a face. Image: Reproduction/Sensity AI

According to Sensity, nine of the 10 companies tested failed; the names of manufacturers susceptible to live deepfake attacks have not been published for legal reasons. “We told them ‘it looks like you are vulnerable to this type of attack’ and they said ‘we don’t care,'” Francesco Cavalli, head of operations at Sensity, told The Verge. “We decided to publish it because we think that, at a corporate level and in general, the public should be aware of these threats.”

“We tested 10 solutions and found that nine of them were extremely vulnerable to deepfake attacks,” said Sensity Chief Operating Officer Francesco Cavalli.

For banks and government authorities, liveliness tests are risky, especially if these institutions use automated biometric authentication. Although attacks are not always easy to carry out, “sometimes everything they [atacantes] What they need are cheap special phones available on the market for a few hundred dollars, capable of hijacking the mobile camera and injecting pre-made deepfake models, as happened in a gigantic scheme against the Chinese tax system in 2021.”

“I can create an account; I can move illegal money to digital bank accounts from encrypted wallets,” says Cavalli. “Or maybe I can apply for a mortgage because today online lending companies are competing with each other to issue loans as quickly as possible,” Cavalli exemplified.

The report also warns that most companies that rely on the KYC method — Know Your Customer (a process of identifying and verifying the identity of the customer), are unaware that, despite efforts to prevent online fraud by applying advanced technologies, identity verification is not well designed, such as Face Matching (face matching) and Liveness (both active and passive) still cannot provide enough security. As the test proves, deepfakes can easily spoof KYC systems as technology evolves.

Face Matching and Liveness can be easily tricked by deepfakes. Image: Reproduction/Sensity AI

Deepfake: the risks that come with artificial intelligence

AI-generated videos, in which you replace a person’s face in an existing video with a similar one, known as deepfakes, have increased by 330% from October 2019 to June 2020, according to startup Deeptrace.

The result of Sensity AI’s test series is not unprecedented in a way that poses a danger to facial recognition systems. Identity spoofing not only threatens security systems, but can influence public opinion during an election or implicate a person in a crime.

“Imagine what you can do with fake accounts created with these techniques.” And nobody is able to detect them”, warned Cavalli.

with information from The Verge and The Register and VentureBeat