A significant step that hints at the future of autonomous programming… for better and for worse.

Deepmind unveiled the progress of its AlphaCode platform, an AI-based system whose goal is to learn to program on your own based on human instructions. And apparently, she is doing like a charm: she would be about as good as the average human programmer today.

To understand what makes this announcement interesting, we must first go back to the institution that is behind it. This is DeepMind, a Google satellite company that is one of the absolute references in artificial intelligence research. And that’s no exaggeration; in a few years, these true wizards have built an extremely solid reputation; wherever they go, problems of immense complexity pass away.

So far, their systems have already knocked out poker stars, obliterated the best players in Starcraft, and even psychologically broke the best player in the history of Go to the point of making him retire. And it’s not just entertainment, far from it. DeepMind has also distinguished itself with sensational scientific contributions, particularly in the medical and pharmaceutical fields. Very recently, for example, we told you about AlphaFold, a revolutionary system that has already begun to redefine molecular biology.

Human creativity, the missing piece of the puzzle

But unlike the latter, AlphaCode has nothing to do with molecular origami. His field is programming. And no question of being content with a vulgar autocompletion system like VisualStudio’s IntelliSense, or an AI-based suggestion system like OpenAI’s Copilot. Here we are talking about a system that collects initial information about a problem, then produces a program to solve it.

It is an activity that is extremely complex in nature, but it’s even worse for an artificial intelligence. Indeed, the latter must operate without human intuition; a definite disadvantage, since this element allows developers to take many shortcuts without even realizing it.

It is therefore extremely difficult to develop an AI capable of taking on this task. instill in him the basic syntax and logic is not enough; he must also be taught to correctly interpret all contextual elements. And that’s another story. “This is very difficult to do, as it requires both strong programming skills and the ability to creatively problem solve.”, explains Petr Mitrichev, engineer at Google.

At the level of the average programmer

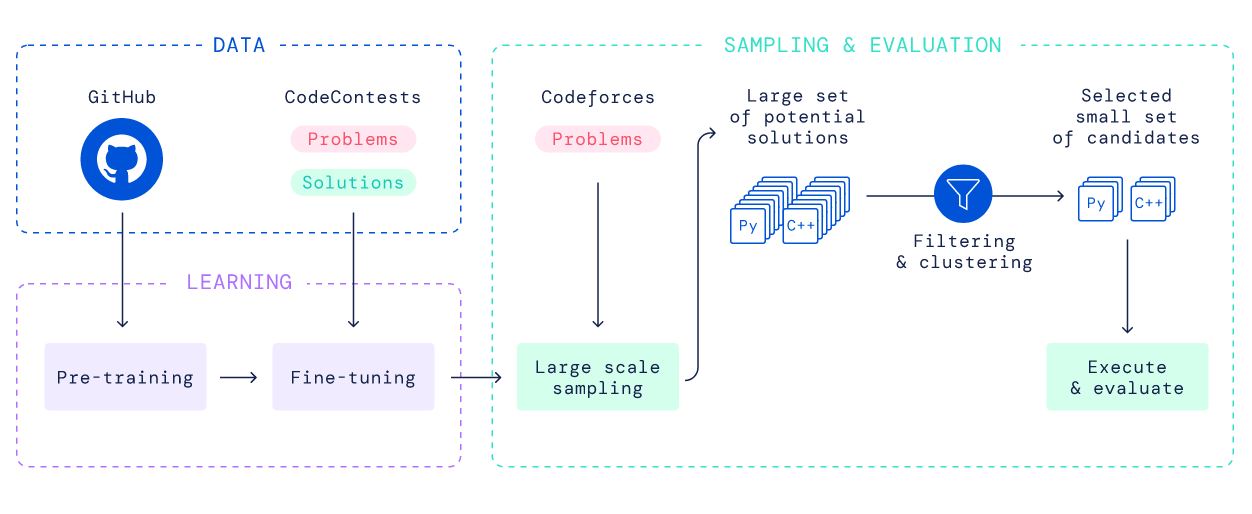

But nothing seems to discourage the DeepMind teams, who have already proven that they are fond of seemingly insurmountable problems. In the end, they got their way with a system trained on Codeforces.

It is a platform of competitive programming, on which programmers of all stripes can test their ability to solve a variety of problems. The results are compiled into a leaderboard that rewards the fastest and cleverest developers. And it’s not just an educational tool; companies, especially American ones, often use this type of platform to test their candidates when recruiting. In addition, there are many unique problems, which the AI could not “cheat” on by reusing a generic solution. It is therefore a extremely relevant test environment for this kind of work.

For several weeks, AlphaCode cut its teeth on a set of problems carefully selected by the engineers. To check his progress, the latter then confronted him with a “massive amount” of problems in Python and C++, each requiring very different approaches and reasoning. Satisfied with the performance of its system, DeepMind has threw her baby straight into the deep end by entering her into ten Codeforces competitions, where he had to fight against flesh-and-blood humans.

And against all odds, AlphaCode did well; he was ranked in the 54th percentile, which means he did about as good as the average human developer. Only overall black point: approx. 40% of the solutions produced contained vulnerabilities that could have been exploited by a hacker. But this is a different and even more complex problem, which DeepMind will tackle later.

From “simple” programming to autonomous development

“I can safely say that AlphaCode exceeded all my expectations”, affirms Mike Mirzayanov, the thinking head of Codeforces. “I was skeptical because even in a simple competitive problem, you not only have to implement the algorithm, but also invent it from scratch — and that’s what’s very difficult.”, he breathes.

Of course, AlphaCode is still nowhere near as dominant in his discipline as his siblings; AlphaGo or AlphaStar, to name a few, outrageously dominate their disciplines. But these works nevertheless represent a significant and even quite spectacular progress in the ability of AI-based systems to solve human problems. And this last point is very important. It is indeed an essential component to exploit the full potential of this technology.

If they continue to progress like this, systems like this could help developers produce nifty and elegant solutions to problems that are tedious or even out of reach for our human brains. Eventually, this could pave the way for fully automated programming systems; the developers would then become both the pilots and guarantors.

The dawn of a new paradigm

An approach that promises to multiply the capacities, efficiency and productivity of a host of systems in the industry. But that’s not all; AlphaCode also has real potential for research, particularly in artificial intelligence. And this is indeed the most important implication of this work. Because once will be mature, the exploratory approach of AlphaCode could constitute a endless source of inspiration for human developers. Eventually, we can even imagine that it is capable of producing a new, even more accomplished version of himself. This would then start a cycle of perpetual and automated innovation, in a human-controlled environment.

Of course, this also raises the specter of certain dystopian scenarios; if it was piloted by a hacker, an AI of this type would, for example, be quite capable of disseminating malicious code, such as backdoors, in the targeted system. But that should not obscure the implausible potential of these systems; knowing the academic pedigree of DeepMind, it will therefore be very interesting to follow the continuation of this work which could one day redefine our relationship to technology.

The research paper is available here.