So development on Stadia requires making games to specific specifications like on a video game console. The new servers, instead of accepting increasingly powerful and complex games, what they allow is to accept new players to the service and with something as simple as renewing the hardware in the nodes, which would be increasingly cheaper for Google, while that the costs for the users would not go down, but would be hidden in the form of a wide library of games that would initially serve to attract the public to the platform.

The commitment to Cloud Gaming

Making a video game console goes against the DNA of a service-focused Google, so the approach that its ideologues put on the table was the following: create a starter kit at a very low price with a control knob and a decoder to connect to each user’s TV. The idea was to sell those two pieces at a high profit margin, but especially to have a group of users pay for the entry-level hardware. Who, due to time differences, would not share the use of the servers.

However, running games from Google required a powerful infrastructure and this was seen by AMD as a great business opportunity. The initial proposal was to use ARM, but the graphics cards from Lisa Su’s company have their IMC for use with an x86. NVIDIA was not chosen due to the fact that they already had their own service in the form of GeForce Now for them. The problem? It would take a few years to have the hardware ready, at least three of them. So Google opted for a solution for the first deployment of the architecture, instead of developing their own hardware they would end up opting for parts already available on the market.

The AMD Opportunity

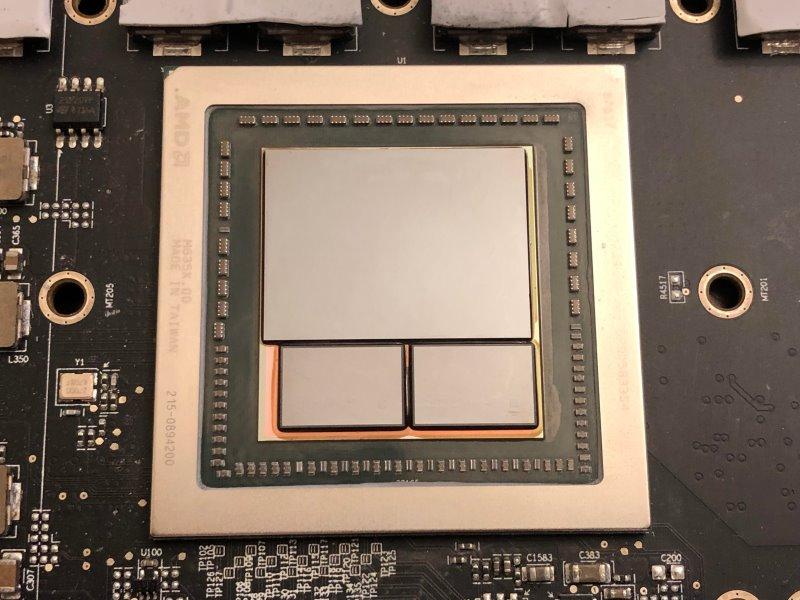

To put ourselves in a situation, we have to take into account how AMD had had a huge setback with its RX Vega graphics cards, which had been crushed in all aspects by NVIDIA’s Pascal architecture, known as GTX 1000 in all aspects, but especially in a crucial one. yield per area. In the world of PC hardware, the price is determined by the performance and the margins for the RX Vega were very fair, since it used what we call a 2.5DIC configuration where the GPU, HBM2 memory and the interposer where they are mounted is the same piece. .

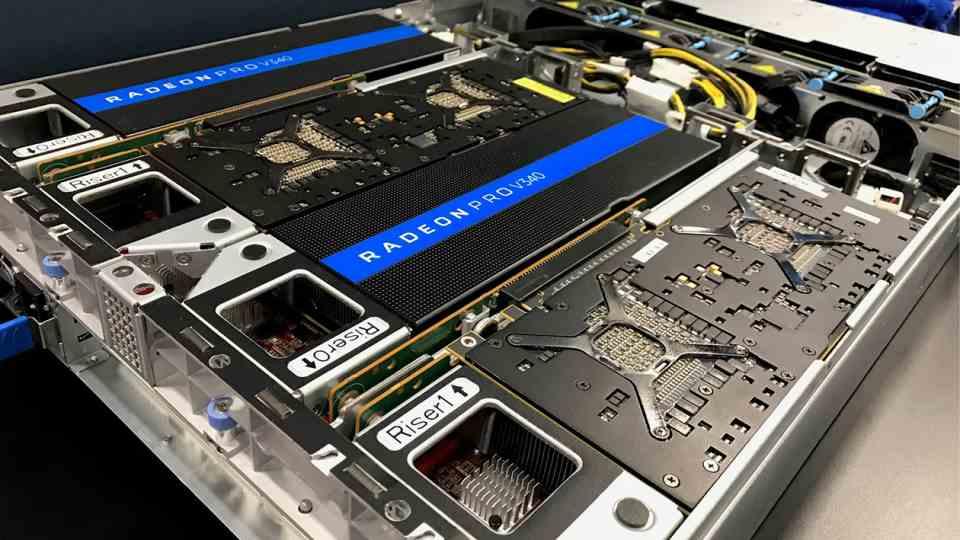

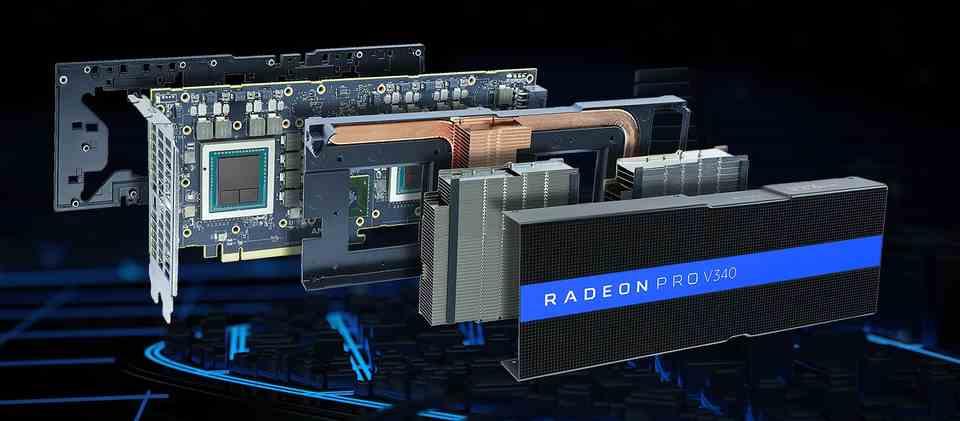

The solution for inventory cleaning came from two fronts. The first of these was the massive sale to cryptocurrency mining farms. The second was the creation of a new graphics card that they called Radeon Pro V340, a dual graphics card that incorporated two sets of RX Vega 56 and its memory that was presented at the time as the heart of Google Project Stream servers, which would end up deriving Stadia.

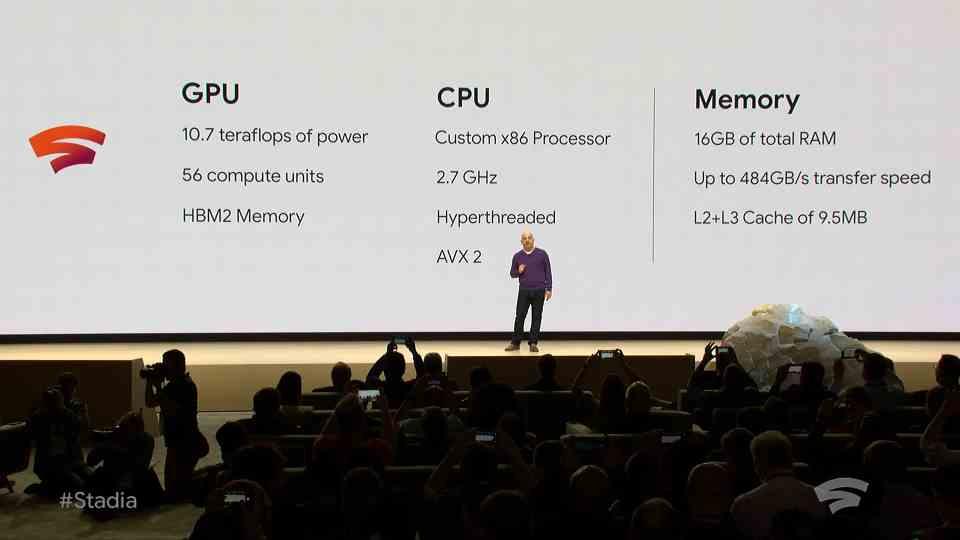

Stadia’s hardware was a PC

Google has never shown the Stadia servers inside, just a few promotional images of them along with some specifications. However, months before the launch of Stadia, AMD revealed the internal images of the servers, with four RV340 cards, which if you take into account that they are dual, this is equivalent to a total of 8 GPUs per server. Its power was enough to give 4K cloud gaming for each GPU, but it was possible to virtualize the graphics so that up to four Stadia virtual machines shared the power. Since 4K is four times as many pixels as Full HD, two profiles were created for gaming. One in which you didn’t have to pay anything else, except for the game itself and another that was the subscription and that allowed you to use a full graphics chip and access content at higher resolution.

The parts of a console are designed to last five years in continuous manufacturing, in PC there are components that after three or four years have disappeared from the map. The problem is not that Google set up the Stadia servers based on those of a computer, but that some of them already had an expiration date. What’s more, the graphics card and the compilation of the shaders in the games was tied to a GPU that was soon going to be discontinued at the time. In any case, Google, in the face of developers, always had a Stadia Gen 2, with console-style hardware in terms of cost and the same performance.

Think that PS5 has a much higher power than Stadia and much lower costs by using fewer and less expensive components, such as the use of GDDR6 memory instead of the expensive HBM2.

Stadia’s problem at the start was its infrastructure

Considering that a user has access to at least one of the GPUs that are in a server, we need to know how many of these servers were deployed in the first phase of Stadia to know what is the minimum number of users that the service supports. this are 60,000 concurrent users and a maximum of 240,000 since Stadia supports 4 virtual machines per GPU, but dividing the available graphics power by a quarter if that is the case. Which is a very low figure for a service to be successful and profitable if we start to analyze the figures. The Stadia team didn’t have the time to set up the servers, they didn’t have enough capital, and they had to go with PC parts that were quickly outdated.

This was not a problem in terms of processor, RAM and storage, but it was with respect to the graphics card, because Google had opted for an architecture that had its hours counted and that AMD itself had already decided to retire. Which made them have to consider Stadia Gen 2, a project that never saw the light of day due to the low number of users and had to be based on using a single chip for processor and graphics card in order to reduce costs. However, it never went beyond being documentation on the table and a promise to developers.

The big publishers did not see it entirely clearly

A service needs games that are not ephemeral, they cannot launch games with high narratives and that last a short time due to the fact that when they are finished they will stop paying the monthly money. Unfortunately, video games are not like movies and television, where the times and costs of deploying a new series are much lower. Thus, Google considered with Stadia to create two games as a service that would take years to appear. What is done in the meantime? Count on the large independent publishers, that is, “The Industry” to support your project.

And what are companies like Ubi Soft, Activision-Blizzard, Electronic Arts and others doing? Converting games already released on the market is highly lucrative; however, with that you do not differentiate yourself from the competition. So you need the Super Mario on duty so that people buy your box or sign up for your service or buy your product. Unfortunately, Google didn’t have this, nor the patience, nor the time.

Of all of them, the only one that officially joined was Ubi Soft, but behind the scenes at Google they were rubbing their hands. The reason? Stadia had the applause of the industry, but they preferred to wait until there was a greater infrastructure behind it that would support a greater number of users. Independent publishers were not interested in an infrastructure of less than 1 million concurrent players at the same time. As we have said before, the initial infrastructure was designed to support a maximum of 240,000 users simultaneously.

The recipe for disaster

Stadia’s big problem is clear from the beginning, a total lack of infrastructure on its part and of games that make a difference to an audience that did not see the motivation to abandon other platforms. As if that weren’t enough, subscription-based business models came out with a flat rate in games like Amazon Luna, which has intelligently focused on a single market instead of trying to cover it, in hardware that was not extinct and also in a PC in terms of hardware, but also in software.

Google should never have considered Stadia as a console in the cloud, each and every one of the games needed to convert the code, a problem that neither GeForce Now nor Luna share. At the same time cloud-based consoles have the problem of being limited in performance and having poorer quality, but they have the advantage of the catalog of games. On the other hand, the case that concerns us had hardly any catalog and much less differential. In any case, it is a demonstration that Stadia was dead from the beginning since they never managed to get enough subscribers to justify an increase in their infrastructure and the motivation to continue with a second generation. Rest in peace Stadia, you are going to be the textbook example of how things should not be done.