One of the hardware design constraints that hardware designers face is power consumption. It is always sought to be able to reach a certain performance under a specific budget. The problem? Users always look for the graphics card that gives us the highest number of frames in our favorite games, as well as better graphic quality. What causes that from time to time the amount of energy consumed has to rise.

Why will your graphics card make your electricity bill more expensive?

Of all the components found inside your computer, the one that has a higher impact on the electricity bill is the graphics card. Not surprisingly, while in the case of a central processor consuming around 200 W it is considered exaggerated, in the case of the graphics card this is considered normal. We even have models that are close to 400 W and soon we will have the use of the ATX12VO or 12+4-pin connector that can double consumption and, therefore, make having our graphics card on cost twice as much.

The central GPU will increase its power consumption

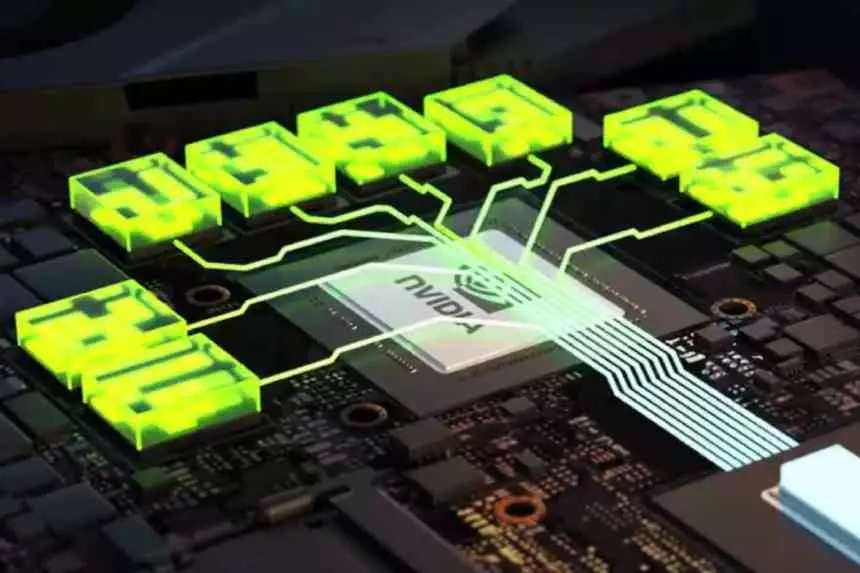

The main component of any graphics card is its central GPU, which compared to a central processor today are made up of dozens of cores and there are even cases that are already in the hundreds. In any case, we must start from the idea that generating graphics is a task that requires great parallelism. For example, if we have to generate an image in Full HD we will have 2 million pixels to deal with simultaneously and if we talk about a 4K resolution then the thing goes up to 8 million.

Because each pixel or any other graphical primitive for composing the scene is independent of the rest, that’s when a design with a large number of cores becomes important. Of course, with a fixed budget in terms of energy consumption. So from one generation to the next, engineers at Intel, AMD, and NVIDIA have to rack their brains to fit more cores under the same budget and achieve higher clock speeds. This was possible thanks to the effects of adopting a new manufacturing node, however, this has become the limiting factor and if you want to reach certain performance rates, it is necessary to increase the consumption of graphics cards.

To understand it much better, think of a neighborhood of houses that are renovated every so often. In which little by little the number of houses is not only increasing, but under the same power plant. The point will come when it will be necessary to renew it in order to provide electricity to all of them.

VRAM also plays an important role

Apart from the graphics chip, we have a series of smaller chips that are connected to the GPU and that serve as memory to store data and instructions. This type of memory differs from the ones we use as central RAM in that they give more importance to the amount of data they can transmit per second than to the data access time. Well, this is one of the problems with regard to consumption and that is going to lead us to the adoption of large cache memories within the central graphics chip in order to cut the energy cost of data access.

The problem is that the necessary volumes of information that the graphics card has to handle are so large that it is impossible to place such an amount of memory inside the chip. So we continue to need external memory. Although the goal in the future is to increase the size of the last level caches until the point where almost 100% of the instructions have a consumption of less than 2 picoJoules per transmitted bit. What consume the VRAM memories? Well, currently 7 picoJoules per transmitted bit, but things could go to 10 in the future.

Taking into account that the energy consumption, measured in watts, is the number of Joules per second, then the problem is very clear. In addition, as the graphical complexity in games increases and with it the number of cores, so does the necessary bandwidth.

Your electricity bill will not go up as much as with your future graphics card

If you are worried about your graphics card rising electricity bill, then let us put your mind at ease. Only the top-of-the-range models that will be designed to run games at 4K with Ray Tracing activated or with frame rates in the hundreds will consume more than current graphics cards and, therefore, will use the new connector of 12+4 pins. The rest of the ranges will remain within current consumption, which range from 75 W to 375 W.

So if your screen has a resolution of 1080p and even 1440p and you are looking to play games at 60 FPS, then you do not have to worry about the increase in consumption of your graphics card. Since it will not be necessary for you to buy one that consumes 450 W or more. Rather, it is the most enthusiastic, those who want maximum performance at any price, who will have to worry the most about rising electricity bills.